The new features in Blackboard’s November upgrade will be available from the morning of Friday 3rd November. This month’s upgrade includes the following new features to Ultra courses:

- Ability to change ‘Mark using’ option without updating the Turnitin assignment due date

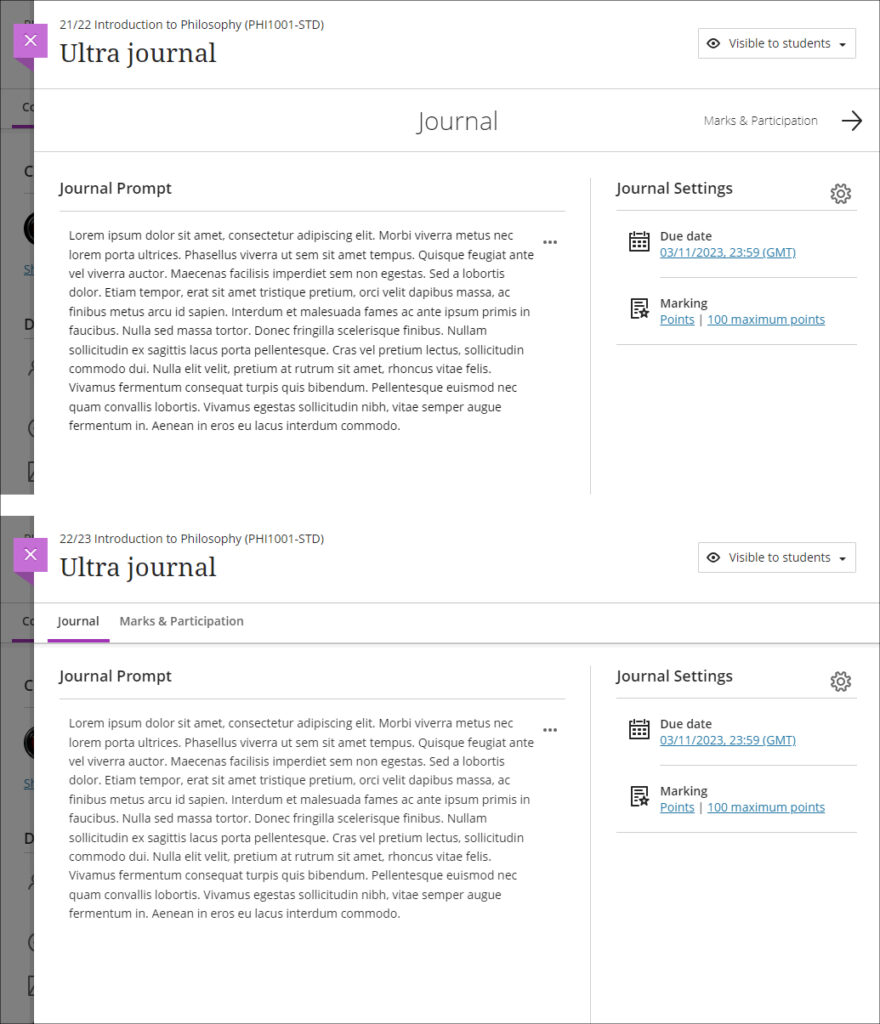

- Improved image insertion tool

- Improvements to matching questions in tests

- Improvements to journals navigation

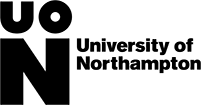

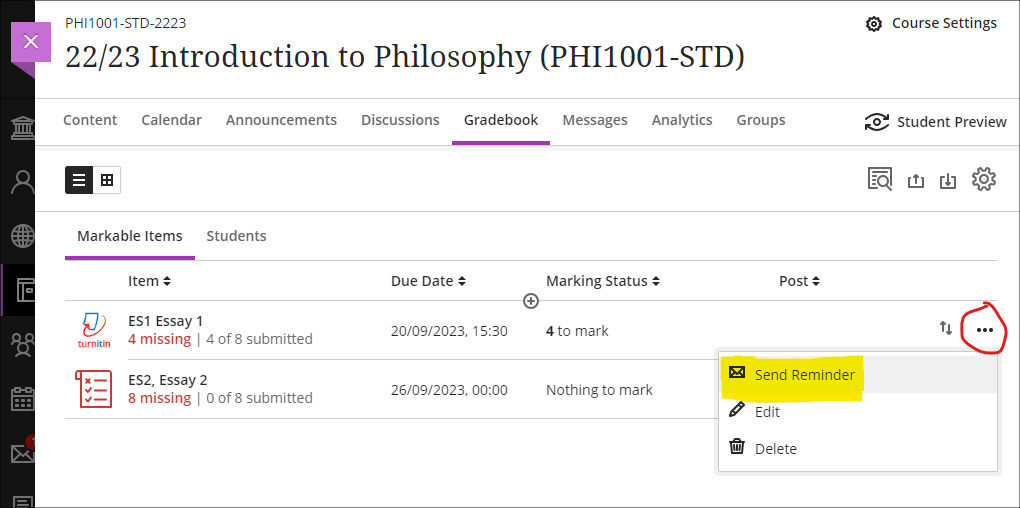

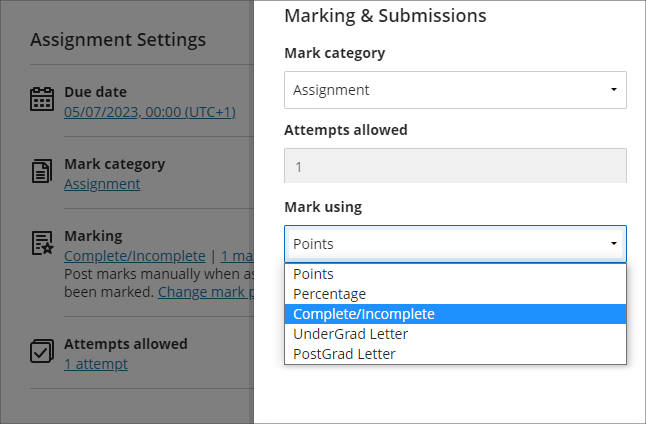

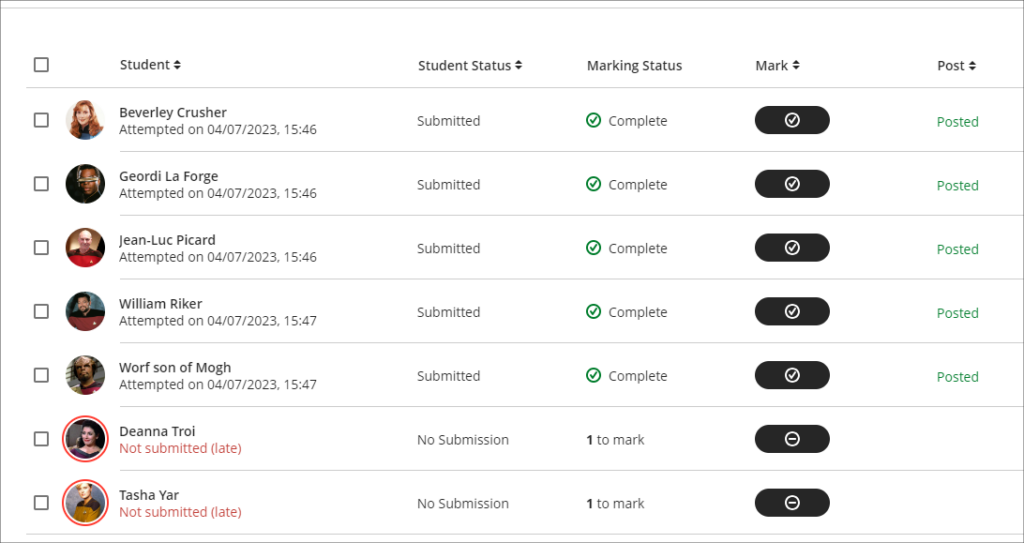

Ability to change ‘Mark using’ option without updating the Turnitin assignment due date

A source of frustration with Turnitin and Ultra courses has been that once the assignment due date has passed it is not possible to change the ‘Mark using’ option. This means that if the UnderGrad Letter or PostGrad Letter schema has not been selected when setting up the assignment, it cannot be selected during the marking process without moving the due date to the future, which in most cases is not an advisable course of action. This has meant that in cases where the correct marking schema has not been selected, a mapped Gradebook item has to be created to show the numeric grades as letters. This restriction has now been removed, and ‘Mark using’ can be changed without changing the due date.

Staff can update the ‘Mark using’ option in the gradebook by selecting the assignment in the column header and choosing ‘Edit’.

Please note that once the desired ‘Mark using’ option has been selected and saved, the gradebook will continue to show the original mark until the page is refreshed.

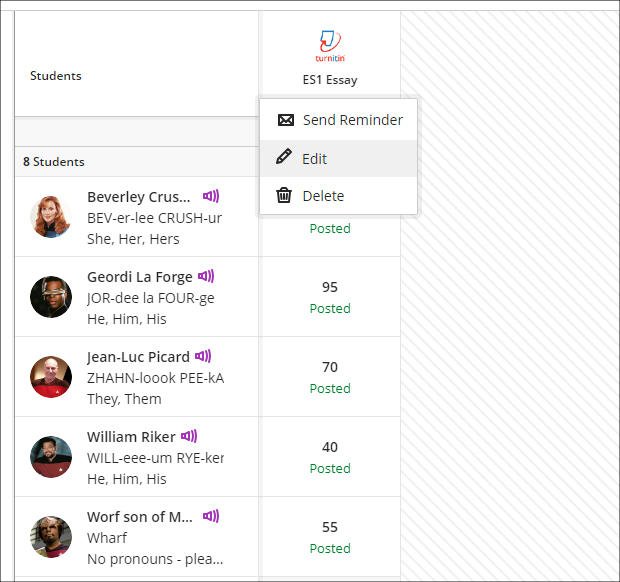

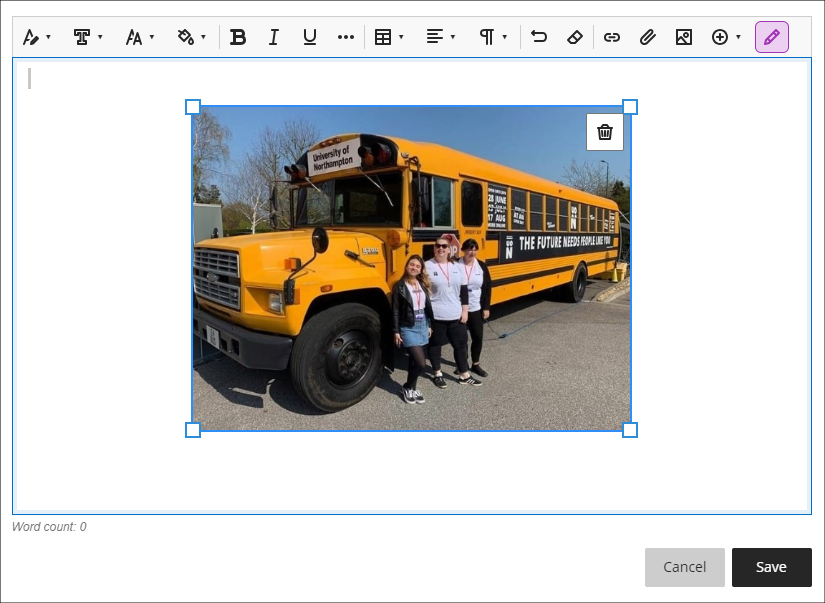

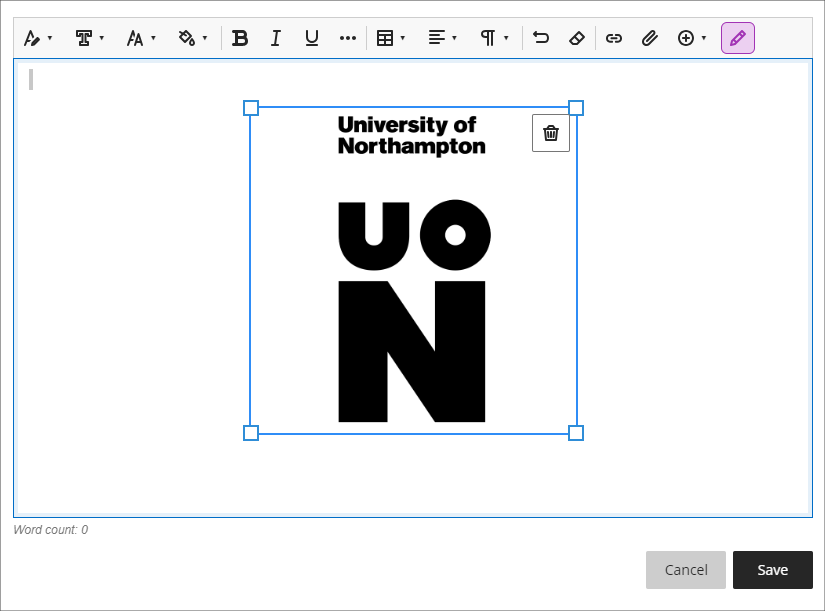

Improved image insertion tool

Following November’s upgrade, the Ultra rich text editor will have a dedicated button for image insertion. Previously, images were inserted using the attachment button. As well as being more intuitive, the new image insertion tool will allow images to be zoomed into, and to have the aspect ratio adjusted prior to insertion. Once inserted, images can be resized by using the grab handles on the inserted image.

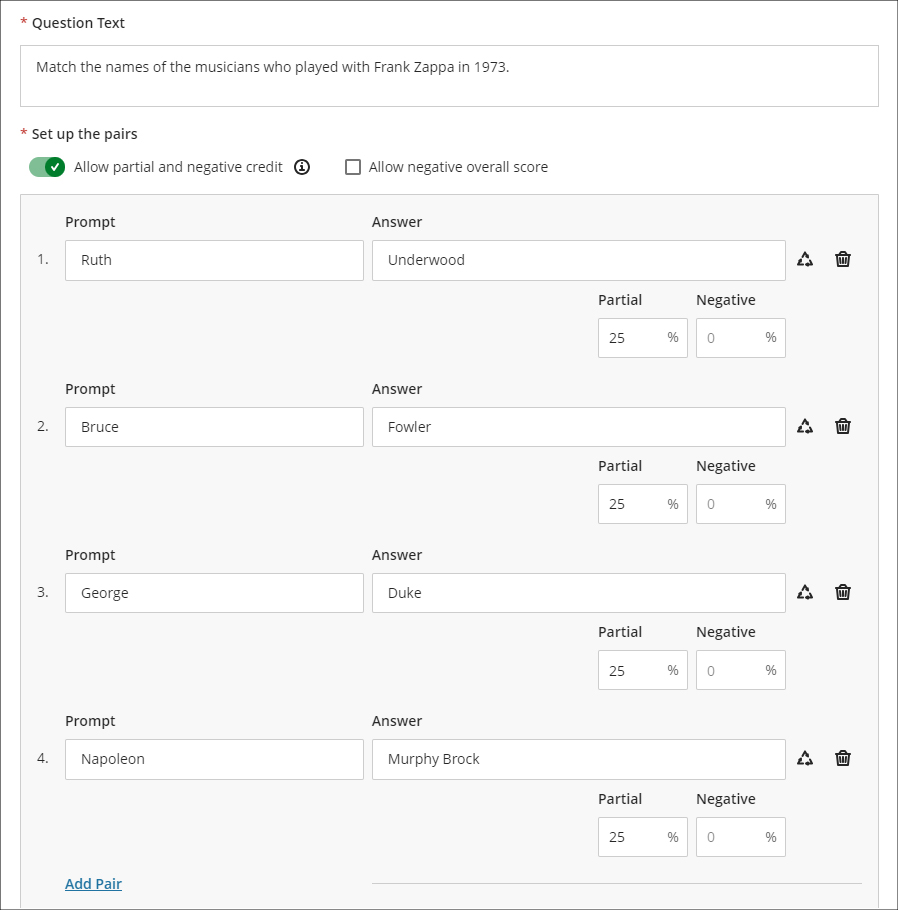

Improvements to matching questions in tests

Building on last month’s upgrade, which improved multiple choice questions in Ultra tests, the November upgrade improves matching questions in tests.

When using matching questions, the options to select partial and negative credit and to allow a negative overall score are now easier to select. Partial and negative credit is on by default, and credit is automatically distributed equally across the answers.

Additional answers (i.e., answers for which there is no corresponding prompt) has been renamed ‘Distractors’ to more accurately reflect its function in matching questions.

In the above example, students would have seven possible answers to match to four prompts, and would score 25% of the total value of the question for each correct match.

Please note that images, video, and mathematical formulae can also be used in matching questions, as well as in most other types of question in Ultra tests.

More information about using matching questions is available at: Blackboard Help – Matching Questions

More information about setting up tests in Ultra courses is available at: Blackboard Help – Create Tests

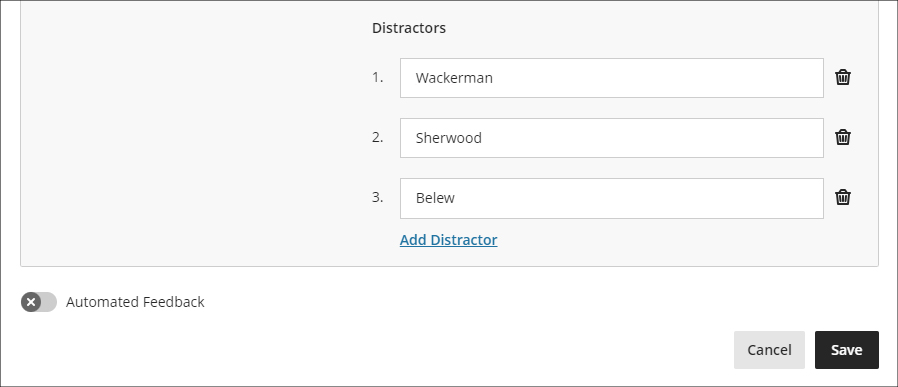

Improvements to journals navigation

After the November upgrade, the ‘Marks and Participation’ option in Ultra journals will be available via the tab navigation on the left-hand side of the screen, providing consistency of navigation with Ultra discussions and assignments.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

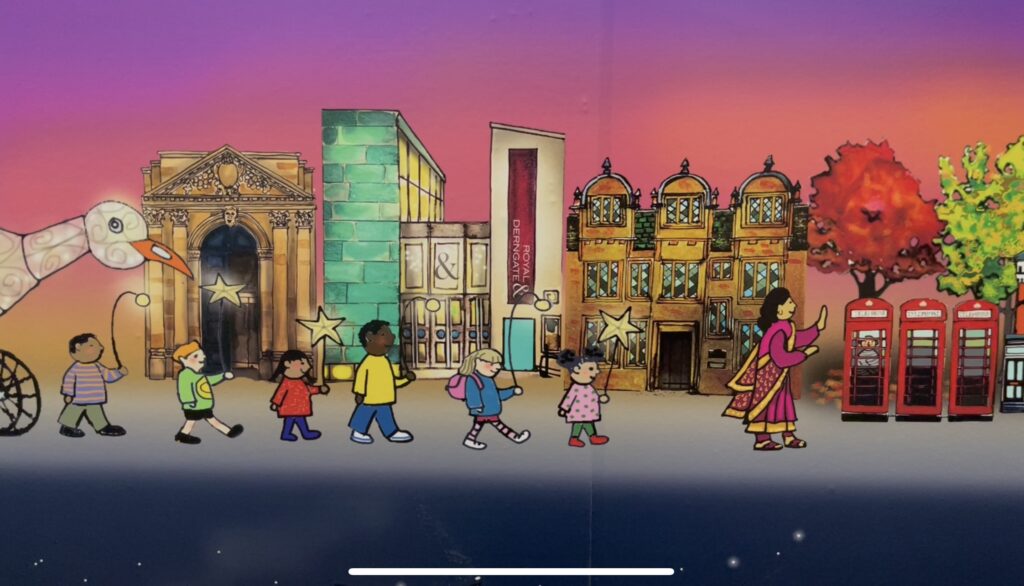

In the heart of the Waterside Campus, a new art installation by Senior Digital Marketing Lecturer and creative artist Kardi Somerfield is rewriting the rules of engagement, merging art and education to create a unique learning experience and visual identity for the newly refurbished Waterside bar. We recently had the opportunity to meet with Kardi Somerfield, to discuss her work.

Kardi’s work stands as an extraordinary tribute to Northampton, stretching three meters in height and an impressive nine meters in length. It encapsulates the very essence of Northampton. Boasting over 200 distinct locations and nearly 300 characters, this monumental piece symbolizes the heart and soul of the town. The installation, at its core, epitomizes inclusivity in our local community.

Creating a work of these dimensions came with its own set of challenges. Transitioning from drawing on a digital screen to delivering a huge-format vinyl involved creating a vast Photoshop file with over 1000 layered elements including buildings, characters, and wildlife.

One of the most intriguing aspects of Kardi’s creation is its interactive dimension. By integrating QR codes, she created a digital-physical bridge, allowing visitors to interact with the artwork in unique ways. This innovative artwork blends digital and analog technologies and transcends the visual spectacle to become a powerful pedagogical tool, particularly for storytelling within the realm of education.

Click here to watch the interview on Kaltura Player.

Learning Technologists Richard Byles and Kelly Lea, and Head of Learning Technology Rob Howe have published the outcomes of their research into student perspectives of Artificial Intelligence on the LTE (Learning Teaching Excellence) platform.

The insights presented in the report are derived from their student survey launched in May 2023, focusing on a range of topics including reasons and barriers for adopting AI tools, ethical considerations and thoughts on staff use to create new content.

The report provides a clear and concise presentation of their research results, discoveries, and conclusions with input from Kate Coulson, Head of Learning and Teaching Enhancement and Senior Lecturer in Fashion Jane Mills. The central theme here revolves around the crucial dialogue surrounding the inclusion of student opinions in shaping AI guidance within the educational landscape.

Currently, Richard and Kelly are on the lookout for volunteers who can participate in video interviews on uses of AI in the classroom. These aim to shed light on how educators are introducing Generative AI Technologies to students, further enriching our understanding of AI’s role in education. Your voice could be an essential part of this ongoing research.

Report Link (PDF): Exploring Student Perspectives on

Generative Artificial Intelligence Tools in

Higher Education: A Survey-Based Study

R Byles, K Lea, R Howe

More Information

More information about the University’s position on AI is available from:

The new features in Blackboard’s October upgrade will be available from the morning of Friday 6th October. This month’s upgrade includes the following new features to Ultra courses:

- Send reminder from gradebook

- Partial credit auto-distribution for correct answers for Multiple Choice questions

- Delegated marking option for Ultra assignments

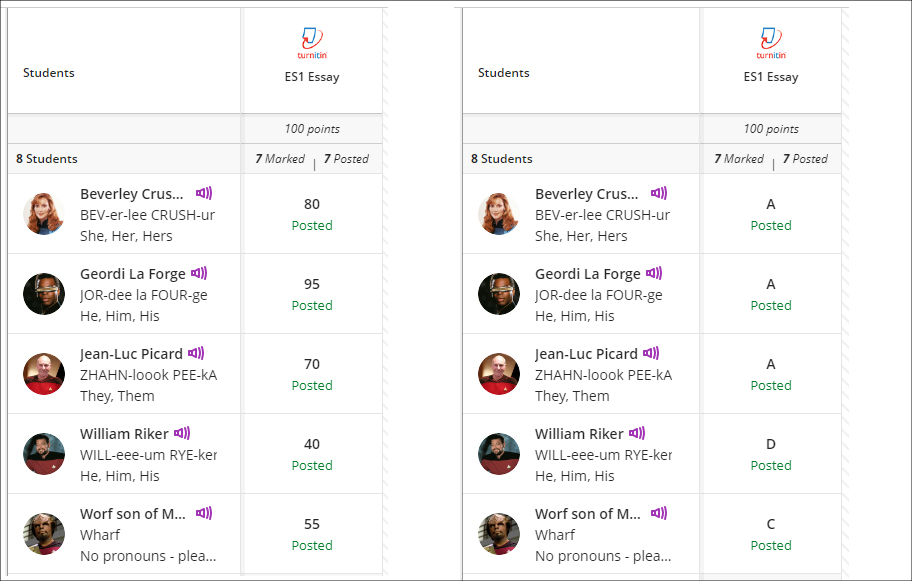

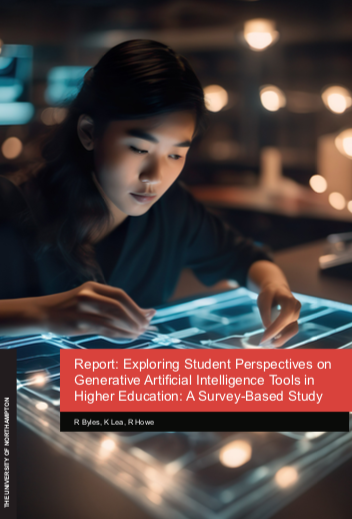

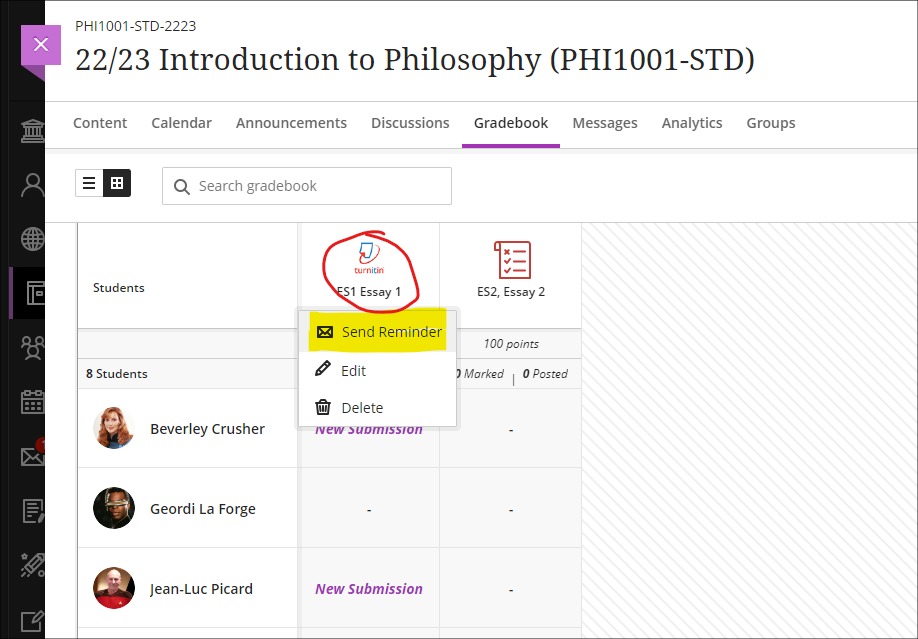

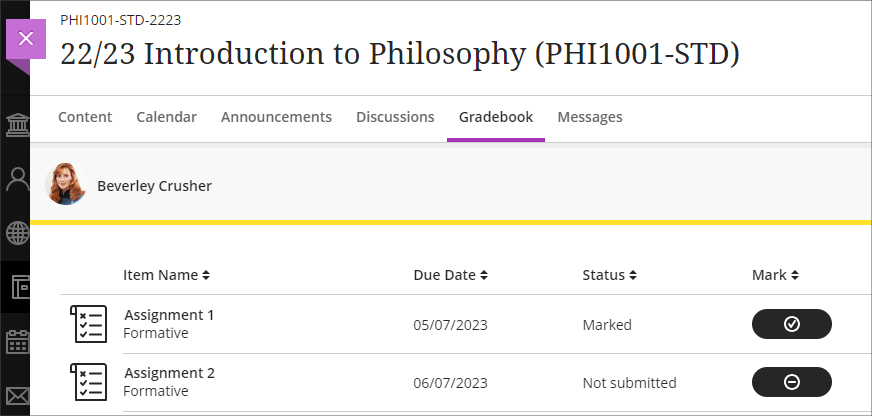

Send reminder from gradebook

New in the October upgrade is the addition of a ‘Send Reminder’ tool in the Ultra gradebook. However, please be aware that this tool is in a very early stage of development, and, due to its limited functionality, staff are recommended not to use it.

Instead of using the the send reminder tool, staff are advised to continue using the current process of contacting non-submitters via the student progress tool, as reminders sent via this method will always be sent as emails as well as Ultra messages:

- Identifying and contacting non-submitters (NILE assessment workflow guide)

- Identifying and contacting non-submitters (video guide – 1m 30sec)

In it’s current form, reminders sent via the new send reminder tool cannot be sent as emails to students; instead, students will receive an Ultra message only. Additionally, reminders cannot be sent for assignments which have release conditions applied, which are automatically applied to all Turnitin assignments. Send reminder messages cannot be customised by staff, and students who receive a reminder will receive the following Ultra message:

Important Notification: Your assessment has not been submitted.

Your assessment named ‘[Name of Assessment]’ has not been submitted.

If you have already received mitigating circumstances or an extension for this assessment, you can ignore this message.

Important information about late submissions, extensions, and mitigating circumstances:

In accordance with University policy, you can submit assessments up to one week late, but your grade will be capped to a bare pass. Extensions are available through your module leader if you have unforeseen circumstances that prevent you from meeting an assessment deadline. The maximum extension period is two weeks, and grades for assessments which have an extension will not be capped. Please note that late submissions and extensions are only available at the first submission point.

If unforeseen circumstances mean that you will need longer than two weeks to submit your assessment, you may be able to apply for mitigating circumstances. If your application for mitigating circumstances is successful this will defer submission of your assessment to the resit submission point, so you can submit to this for an uncapped grade. If a mitigating circumstances application is approved at the resit submission point, it is recognition of extenuating circumstances at that time, but there is no further opportunity to resubmit the assessment.

More information, help and support:

More information about late submissions, extensions, and mitigating circumstances is available from: University of Northampton Guide to Mitigating Circumstances and Extensions.

If you need any other support regarding your assessment, please contact the module leader for help as soon as possible.

The deadline for the above-named assessment is shown below:

Due date: [Assessment due date]

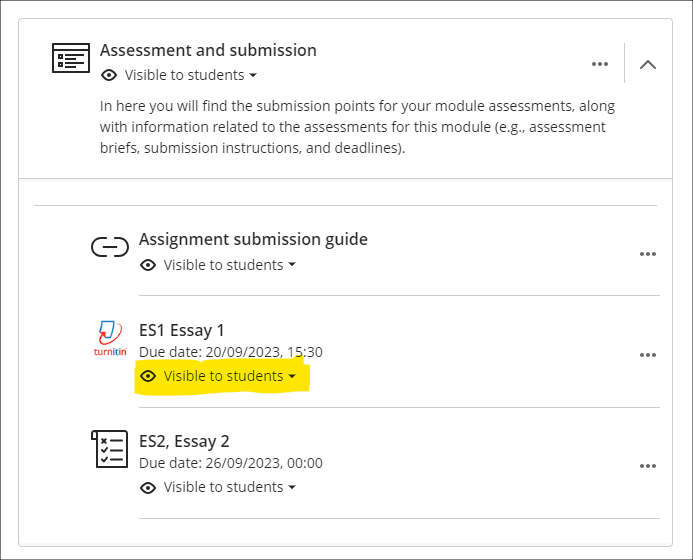

The send reminder tool can be accessed in the Ultra gradebook, from both list and grid view:

However, at the current time the assessment submission point must be set to ‘Visible to students’ for the message to be sent. If the assessment submission point is set to ‘Hidden from students’ or ‘Release conditions’ an error message will be received when trying to send the message.

Once the send reminder tool reaches a sufficient level of functionality, we will update our guidance accordingly.

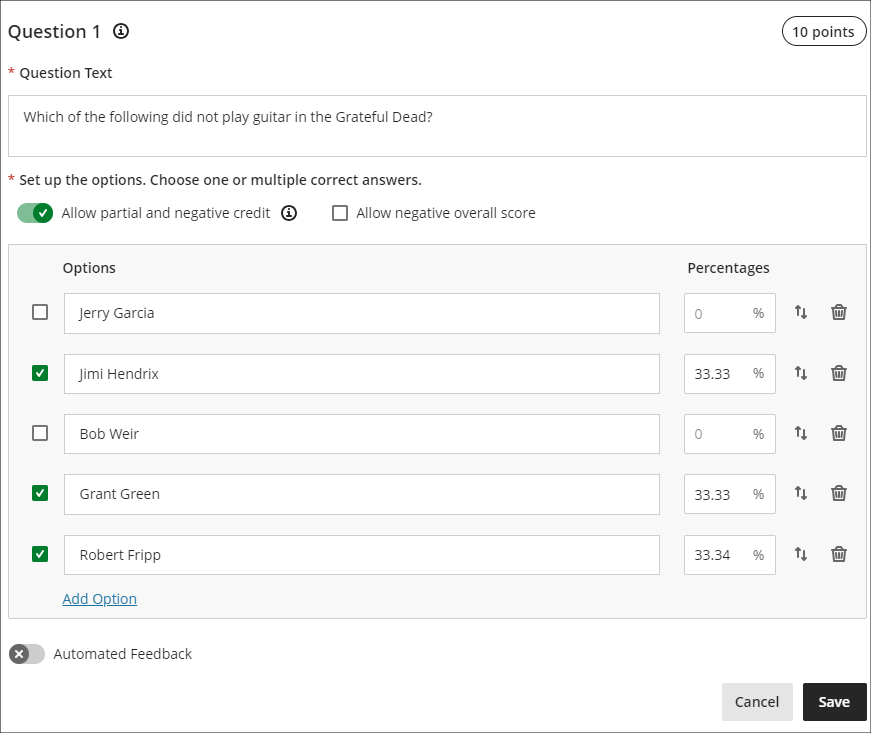

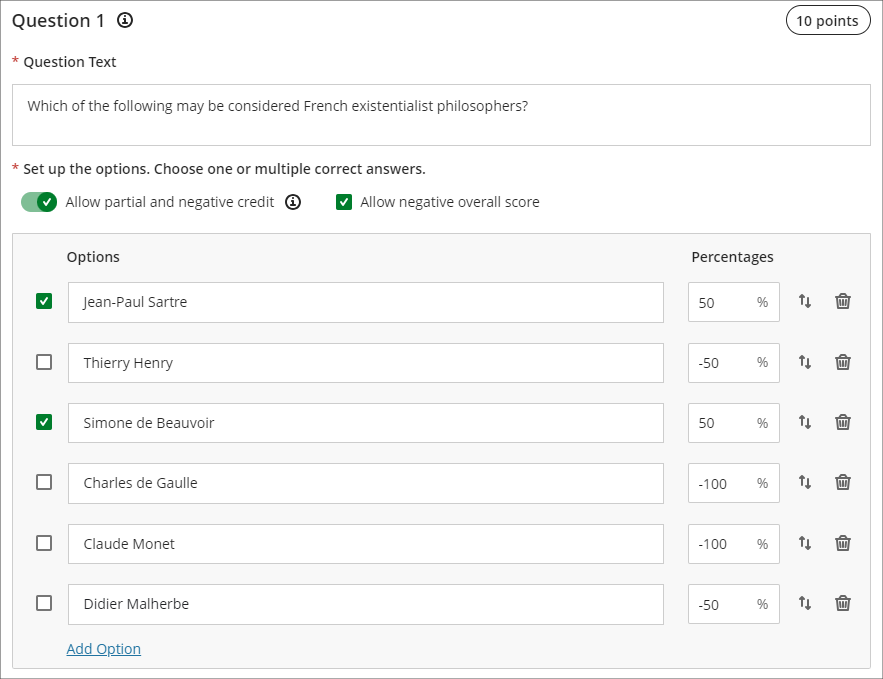

Partial credit auto-distribution for correct answers for Multiple Choice questions

Following the October upgrade, when setting up multiple option test questions with partial and negative credit allowed, percentages will be automatically allocated and equally distributed between the correct options. However, these can be overwritten should staff prefer to weight the distribution unequally.

More information about setting up tests in Ultra courses is available from: Blackboard Help – Create Tests

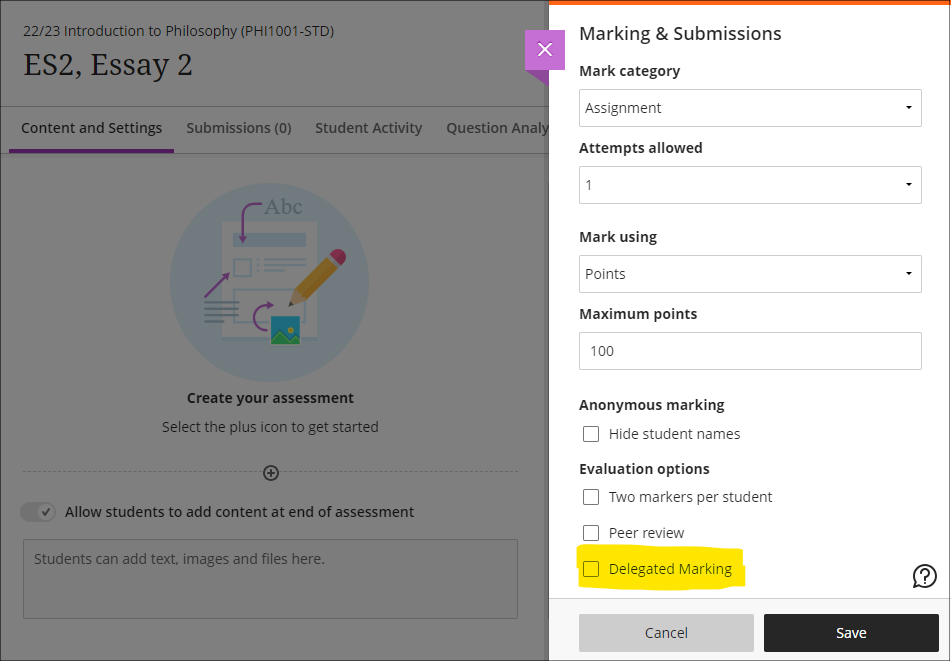

Delegated marking option for Ultra assignments

Delegated marking is already available with Turnitin assignments. After the October upgrade, staff marking Ultra assignments will also be able to create marking groups allowing the different members of staff marking an assignment to see only the assignments that they are marking.

Please note that this first version of delegated grading only supports assignment submissions from individual students. Tests, group assessments, and anonymous submissions are not supported at this time.

The option to allow delegated marking for Ultra assessments is available in the assignment settings.

After selecting the delegated grading option, select the appropriate group set. Staff can assign one or more members of staff to each group in the group set. If multiple markers are assigned to the same group, they will share the grading responsibility for the group members. Staff assigned to a group of students will only see submissions for those students on the assignment’s submission page, and they can only post grades for their assigned group members. Any unassigned staff enrolled in the course will see all student submissions on the assignment’s submission page, and will be able to post grades for all students.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

Robin Crockett (Academic Integrity Lead – University of Northampton) has run a small scale study investigating two AI detectors with a range of AI created assignments and has shared some of the initial results.

He used ChatGPT to generate 25 nominal 1000-word essays: five subjects, five different versions of each subject. For each subject, he instructed ChatGPT to vary the sentence length as follows: ‘default’ (i.e. I didn’t give it an instruction re. sentence length), ‘use long sentences’, ‘use short sentences’, ‘use complex sentences’, ‘use simple sentences’.

The table below shows the amount of the assignment which was detected as using AI in two different products: Turnitin and Copyleaks

| Essay 1 | Essay 2 | Essay 3 | Essay 4 | Essay 5 | |

| Turnitin | |||||

| Default | 100% AI | 100% AI | 76% AI | 100% AI | 64% AI |

| Long | 0% AI | 26% AI | 59% AI | 67% AI | 51% AI |

| Short | 0% AI | X | 31% AI | 82% AI | 27% AI |

| Complex | 33% AI | 15% AI | 0% AI | 63% AI | 0% AI |

| Simple | 100% AI | 0% AI | 100% AI | 100% AI | 71% AI |

| Copyleaks | |||||

| Default | 100% AI at p=80.6% | 100% AI at p=83.5% | 100% AI at p=88.5% | 100% AI at p=81.3% | 100% AI at p=85.4% |

| Long | ~80% AI at p=65-75% | 100% AI at p=81.5% | ~95% AI at p=75-85% | 100% AI at p=79.1% | 100% AI at p=80.6% |

| Short | ~70% AI at p=66-72% | 100% AI at p=76.9% | 100% AI at p=87.3% | ~85% AI at p=77-79% | 100% AI at p=78.4% |

| Complex | 100% AI at p=72.9% | 100% AI at p=81.0% | ~90% AI at p=62-73% | 100% AI at p=77.7% | 0% AI |

| Simple | 100% AI at p=83.6% | ~90% AI at p=73-81% | 100% AI at p=95.2% | ~90% AI at p=76-82% | 100% AI at p=84.9% |

X = “Unavailable as submission failed to meet requirements”.

0% -> complete false negative.

Robin noted:

Turnitin highlights/returns a percentage of ‘qualifying’ text that it sees as AI-generated, but no probability of AI-ness.

Copyleaks highlights sections of text it sees as AI-generated, each section tagged with the probability of AI-ness, but doesn’t state the overall proportion of the text it sees as AI-generated (hence his estimates).

Additional reading: Jisc blog on AI detection

The new features in Blackboard’s September upgrade will be available from the morning of Friday 8th September. This month’s upgrade includes the following new features to Ultra courses:

- Email non-submitters – anonymous marking improvement to Turnitin in Ultra courses

- Progress tracking – automatically enabled in Ultra courses

- NILE Ultra Course Awards 22/23

- Online NILE induction for new students

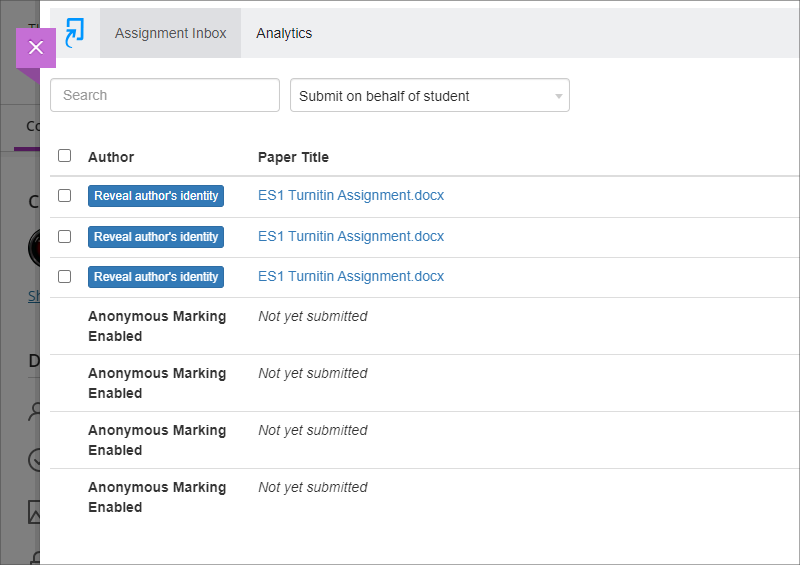

Email non-submitters: anonymous marking improvement to Turnitin in Ultra courses

Currently, when marking students’ anonymous Turnitin submissions, it is not possible to precisely determine who has and who has not submitted, which makes it difficult for staff to contact and support non-submitters.

With Turnitin assignments, the student progress indicator in Ultra courses displays one of three states, the current meaning of which is:

- Unopened (student has not submitted)

- Started (student has opened the assignment, and may or may not have submitted)

- Completed (student has opened the assignment, and may or may not have submitted)

At present, when a student opens a Turnitin assignment, the student progress indicator automatically changes from ‘Unopened’ to ‘Started’. (It is not possible for a student to manually change the student progress indicator from ‘Unopened’ to ‘Started’; this can only be done by opening the assignment.) However, in order to display ‘Completed’, each student who submitted the assignment has to manually change the progress tracking state from ‘Started’ to ‘Completed’. This means that while staff know that a student whose student progress indicator shows ‘Unopened’ has definitely not submitted, it is not possible to determine whether students whose student progress indicator shows ‘Started’ have submitted the assignment or only opened the submission but not submitted. Additionally, as a student can change the status from ‘Started’ to ‘Completed’ without submitting an assignment, the ‘Completed’ indicator does not provide a sufficient guarantee that a submission was actually made.

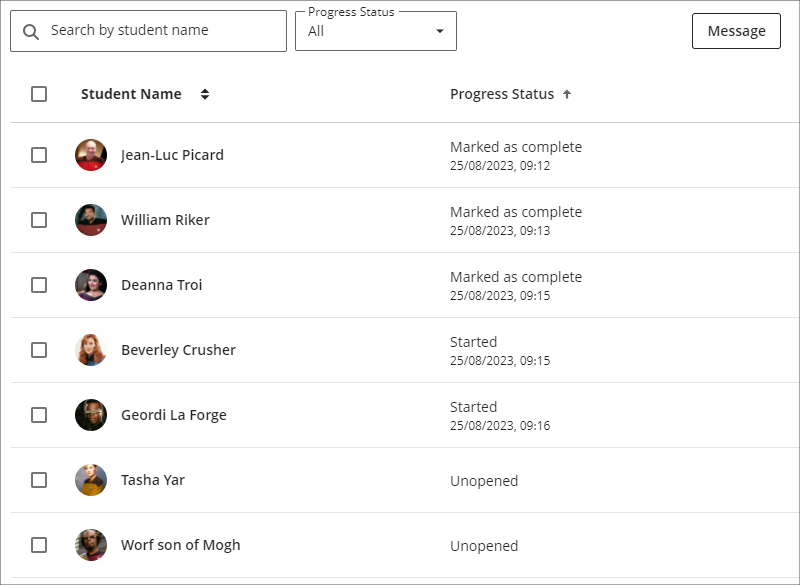

Following the September update, the way that the student progress indicator works will change, and will allow staff to know with certainty which students have and have not submitted. These progress states will effectively become locked to the submission status in Turnitin and will not be modifiable by students. Therefore, after the September update the meaning of the statuses will be:

- Unopened (student has not submitted)

- Started (student has opened the assignment, but has not submitted)

- Completed (student has submitted)

For staff, this is a considerable improvement over Turnitin’s ‘Email non-submitters’ feature (which was withdrawn by Turnitin for assignment submission points set up after February 2022), as staff will now be able to see which students have submitted and which have not, and will be able to filter and sort the Gradebook and quickly send a message to all non-submitters. And, crucially, this will all be possible to do while not disclosing the identities of the individual authors when marking their work in Turnitin.

Following the September upgrade, staff will continue to see anonymised Turnitin submissions as normal in the Turnitin assignment inbox:

However, when looking at the progress summary for the assignment in the Ultra course, staff will be able to see the submission status for each student, and can use filtering and sorting to quickly select and message non-submitters. When using this feature to select and simultaneously message multiple students, each student will receive a private message which will not disclose the identities of the other recipients. As well as being able to read the message in NILE, students are also automatically emailed a copy of the message. Following the changes to University policy regarding student email addresses, from the 13th of September onward, copies of announcements and messages emailed from NILE courses will go to students UON email addresses (i.e., those ending @my.northampton.ac.uk) and not to their personal email addresses, e.g., addresses ending @gmail.com, @yahoo.com, @qq.com, etc.

You can find out more about setting up and marking Turnitin assignments in Ultra courses, and about messaging non-submitters at: NILE Assessment Workflows – Ultra Workflow 1: Turnitin

Progress tracking – automatically enabled in Ultra courses

Progress tracking is a helpful feature for students, as it lets them view their progress in Ultra courses. Progress tracking also provides useful insights for staff about how content in their Ultra courses is being used, and how students are getting on in their courses. When considered alongside other information, progress tracking can provide clues to staff about which students might be struggling and might benefit from additional support.

At present, progress tracking is default off in Ultra courses and needs to be switched on manually in each course. In the days following the September upgrade, progress tracking will be automatically and permanently enabled in all new and existing Ultra courses.

Progress tracking in Ultra courses is a considerable improvement over what was available in Original courses, both in its scope and ease of access. Plus, unlike Original courses, which only provided data about students who had accessed the course using a laptop or desktop computer, the data provided about student progress in Ultra courses takes account of access via laptops/desktops, mobile devices using a mobile browser, and mobile devices using the Blackboard Learn app.

You can find out more about progress tracking at: Blackboard Help – Progress Tracking

NILE Ultra Course Awards 22/23 & 23/24

Did you put together a great NILE Ultra course in 2022/23? Do you know someone who did? Or, have you recently put together a great NILE Ultra course for 2023/24? If so, please consider making a nomination for the next round of Ultra Course Awards. Nominations are open until the 31st of December, 2023, and winners of Ultra Course Awards for their 2022/2023 and 2023/2024 courses will be formally announced at the University of Northampton Learning and Teaching Awards 2024:

Ultra Course Awards 22/23 & 23/24 – Make a nomination

Online NILE induction for new students

If your students are looking for some information about logging in to NILE, finding their way around, and understanding a bit more about how NILE works, you’ll be pleased to know that we’ve refreshed the student section of the Learning Technology Team website, which now includes an online induction to NILE for students.

The online NILE induction covers the following:

- What is NILE?

- Logging in to NILE

- Finding your way around NILE

- Personalising your NILE profile

- Accessing your NILE courses

- Understanding how a NILE course works

- Accessing content in NILE in alternative formats

- Submitting your assignments on NILE

- Improving your digital skills

- More information, help and support with NILE

You can view the online NILE induction pages at: NILE Introduction, Help & Support

And, of course, do feel free to add a link to this page in your NILE courses.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in Blackboard’s August upgrade will be available from the morning of Friday 4th August. This month’s upgrade includes the following new features to Ultra courses:

- Ability for staff to add images to learning modules

- New location for Ultra groups

- Improvements to messages tool

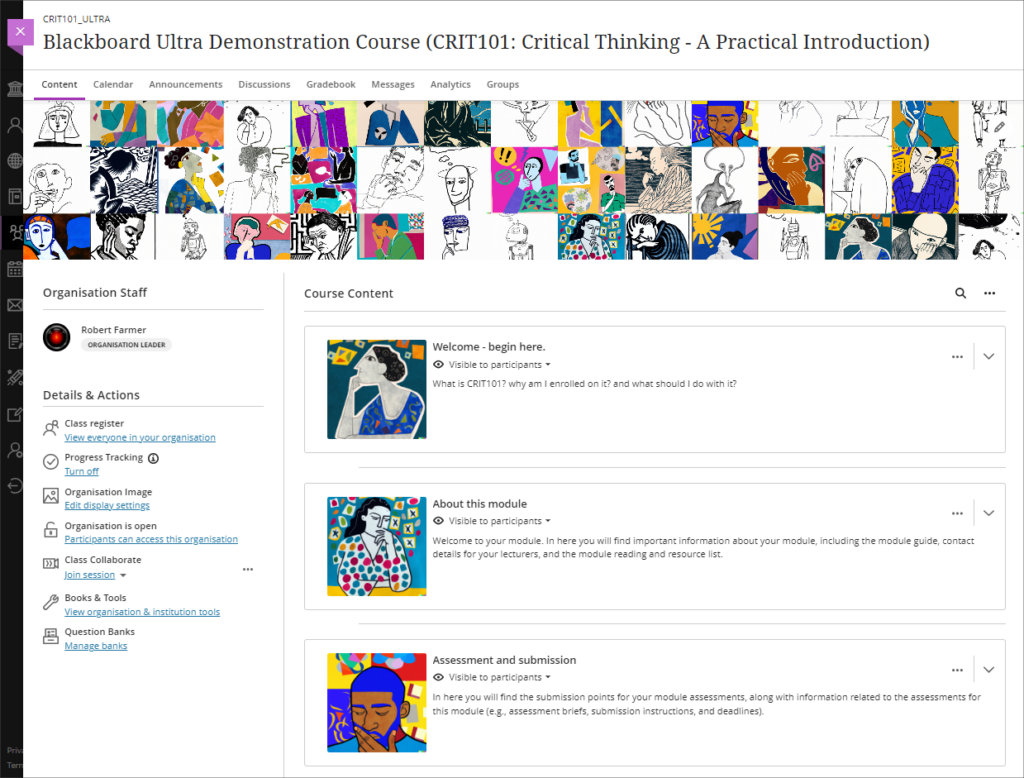

Ability for staff to add images to learning modules

Following feedback from staff about the need to make Ultra courses more visually engaging, the August upgrade will allow staff to add images to learning modules.

• Blackboard Ultra course with images added to learning modules.

For more information about customising the appearance of learning modules, please see: Blackboard Help – Create Learning Modules, Customise your learning module’s appearance

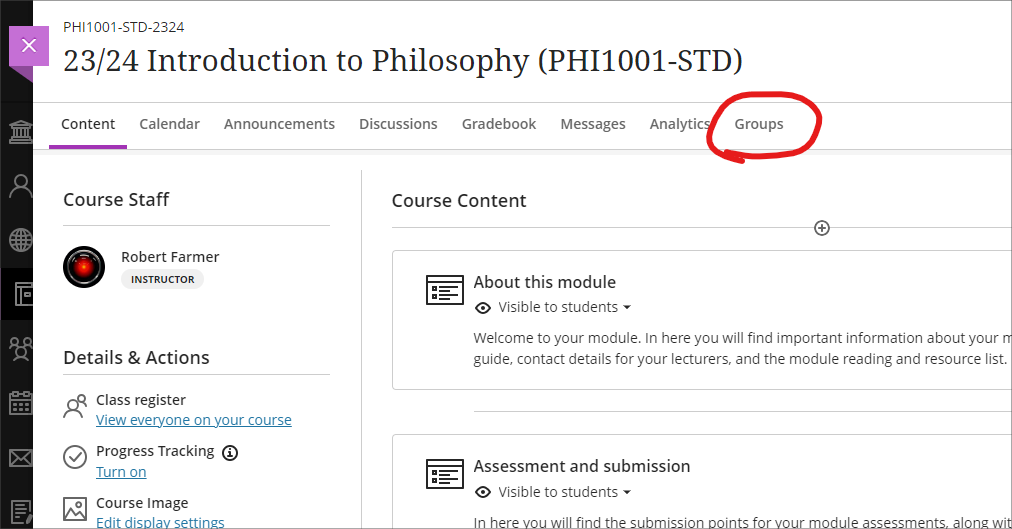

New location for Ultra groups

After the August upgrade, the groups tool in Ultra courses will move from the Details & Actions menu to the top menu.

• Ultra courses with new Ultra groups location highlighted

For more information about creating and managing groups, and about group spaces in Ultra courses, please see: Blackboard Help – Groups

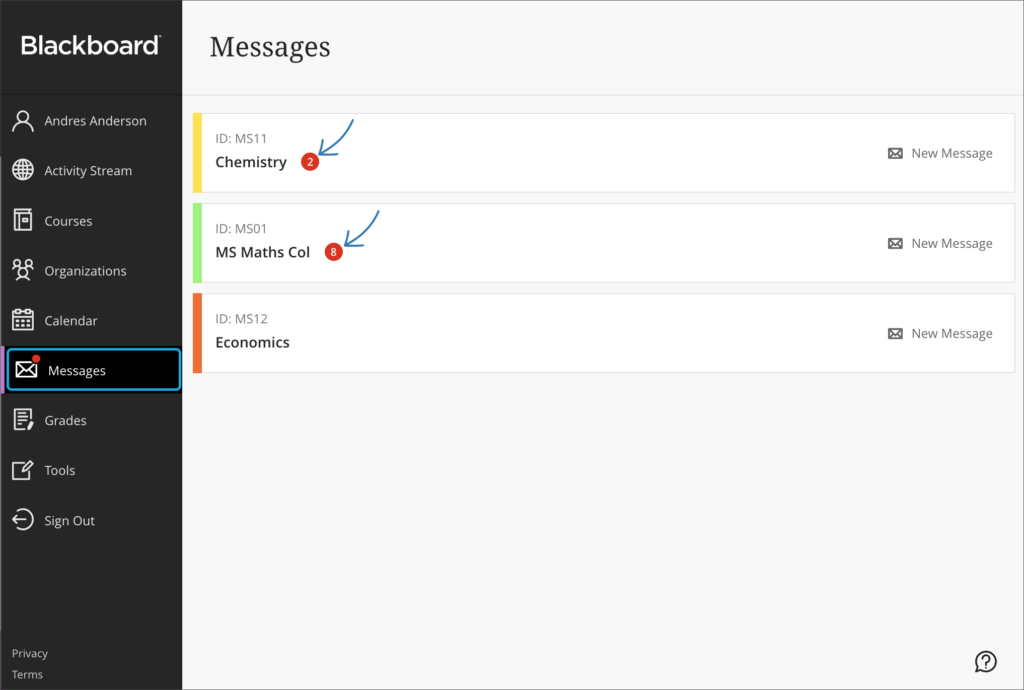

Improvements to messages tool

Prior to the August upgrade, although courses which contained unread messages were flagged, they were not sorted to the top of the list in the messages page. Following feedback from staff and students, courses with unread messages will now be automatically placed at the top of the list.

• Messages page with courses with unread messages sorted to the top of the list.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: https://libguides.northampton.ac.uk/learntech/staff/nile-help/who-is-my-learning-technologist

The Learning Technology Team would like to say a very big thank you and many congratulations to the four members of academic staff who received a NILE Ultra Course Award at the University’s Learning and Teaching Awards ceremony on Thursday 6th July.

This year’s winners were:

- Helen Caldwell & Joanne Barrow – EDUM074: International Perspectives on Education

- Alison Power – MID1028: Scholarly Practice in Midwifery

- Jodie Score – SLS1019: Introduction to Microbiology

The Ultra Course Awards recognise staff who have created excellent Ultra courses for their students, and each course submitted for an award was reviewed by a panel to ensure that it met the award criteria, which are that the course:

- Follows the NILE Design Standards (https://libguides.northampton.ac.uk/learntech/staff/nile-design/nile-design-standards)

- Is clearly laid out and structured via the use of learning modules or folders at the top level, and uses, where appropriate, sub-folders to organise content within the top-level learning modules or folders

- Contains a variety of content, including activities for students to take part in

Nominations for the next round of Ultra Courses Awards will open in August 2023, and the winners will be announced at the 2024 UON Learning and Teaching Conference.

The last twelve months have seen a lot of changes to NILE, and particularly to Ultra courses.

Between August 2022 and July 2023 we’ve blogged about 65 upgrades to Blackboard, almost all of which have been upgrades to Ultra courses:

- Advanced scoring options in multiple choice and multiple option test questions (July 2023)

- Complete/incomplete grade schema and formative assessment option (July 2023)

- Resizing media option in Ultra documents (July 2023)

- Announcements moved to the top navigation bar (June 2023)

- Ultra tests: upload questions from a file (June 2023)

- Journals – submission status filters available when using assessed journals (June 2023)

- Drag and drop content into to Ultra courses (May 2023)

- Email non-submitters of Blackboard Ultra assignments (May 2023)

- Improvements to hotspot questions in Ultra tests (May 2023)

- Ability to prevent backtracking in Ultra tests with page breaks (May 2023)

- Ability to reuse questions in Ultra question banks (May 2023)

- Improvements to Ultra rubrics (May 2023)

- Grading attempt selector improvements (May 2023)

- Ultra course content search (April 2023)

- Hotspot question improvements (April 2023)

- Multiple grading schemas (April 2023)

- Improved submission page sorting controls (April 2023)

- Discussion navigation improvements (April 2023)

- Quick access to student overview from multiple locations (April 2023)

- Ultra analytics improvement – deactivated students no longer show in the student progress reports (April 2023)

- Prevent editing or deletion of discussion posts (March 2023)

- Improved data and analytics in Ultra courses (March 2023)

- Improved attempt switching when grading student submissions with multiple attempts (March 2023)

- Polygon shape tool available when creating hotspot questions in Ultra tests (February 2023)

- Sort items by grading status in the Ultra gradebook (February 2023)

- Students can see other members of their group in Ultra courses (February 2023)

- Ally alternative format views count towards progress in progress tracking (February 2023)

- Prevent students from editing or deleting Ultra discussion posts after the due date (January 2023)

- Model answer question type supported in Ultra tests (January 2023)

- Improvements to Ultra test randomisation options (January 2023)

- Drag-and-drop content re-ordering improvements (December 2022)

- Course links (December 2022)

- Single student progress report (December 2022)

- Improvements for copying content (December 2022)

- Question banks descriptions and search (December 2022)

- Simpler exit actions for student preview (December 2022)

- Improvements to ‘needs grading’ count for Blackboard Ultra assignments (December 2022)

- Hotspot questions in Ultra tests (November 2022)

- Course activity report under the analytics tab in Ultra courses (November 2022)

- Student course access data available in student grades overview (November 2022)

- Improved grade history report available in the Ultra gradebook (November 2022)

- ‘Sticky’ Ultra gradebook view (November 2022)

- Turnitin icon is displayed in Turnitin assignments in Ultra and Original courses (November 2022)

- All content market icons appear alongside the content item in Ultra courses (November 2022)

- Improvements to unread messages indicator (November 2022)

- Student engagement reports for course content in Ultra courses (October 2022)

- Improved access to student grades overview in the gradebook in Ultra courses (October 2022)

- Enhanced auto save capability in the content editor in Ultra tests & assignments (October 2022)

- Maths formulas accessible with screen readers in Ultra courses (October 2022)

- Course name appears in browser tab in Ultra courses (October 2022)

- Add your preferred pronouns and name pronunciation to your NILE profile (September 2022)

- Copy rubrics into Ultra courses (September 2022)

- Ultra courses cannot be accidentally copied into themselves (September 2022)

- Improvements to question banks sorting controls (September 2022)

- Discussion replies in Ultra courses are available from the grading page (September 2022)

- Improvements to the Ultra test timer for students (September 2022)

- Microsoft Immersive Reader included as one of Ally’s alternative formats (September 2022)

- Turnitin problem in Original courses fixed (August 2022)

- Ability to manage question banks in Ultra courses (August 2022)

- Additional exceptions available for Blackboard assignments and tests in Ultra courses (August 2022)

- Improved Gradebook filters in Ultra courses (August 2022)

- Improved auto-save functionality for students when using the content editor in Ultra tests and assignments (August 2022)

- Improvements to LaTeX equation support in Ultra courses (August 2022)

- Import/Export group sets and members in Ultra courses (August 2022)

- Improvement to the Messages tool in Ultra courses (August 2022)

More information about all of the upgrades to Blackboard is available here: https://blogs.northampton.ac.uk/learntech/category/nile-update/

The new features in Blackboard’s July upgrade will be available from the morning of Friday 7th July. This month’s upgrade includes the following new features to Ultra courses:

- Advanced scoring options in multiple choice and multiple option test questions

- Complete/incomplete grade schema and formative assessment option

- Resizing media option in Ultra documents

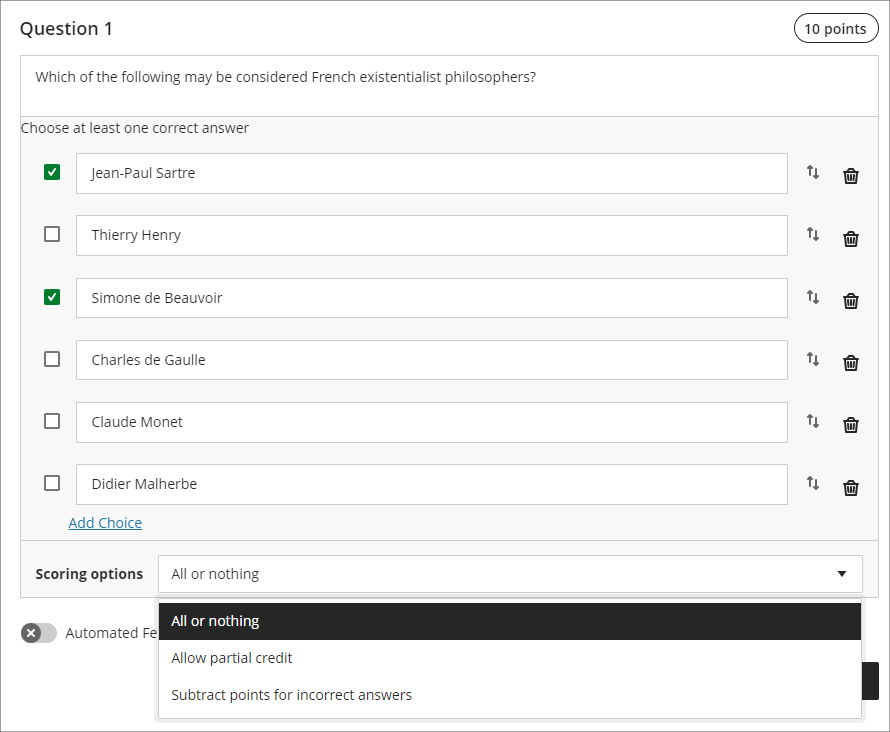

Advanced scoring options in multiple choice and multiple option test questions

Currently, when setting up multiple choice and multiple option test questions staff can select from the following options:

- All or nothing – students must select all the correct answer choices to receive credit. If a student selects one or more incorrect answer choices, they earn no points;

- Allow partial credit – students receive partial credit if they correctly answer part of the question;

- Subtract points for incorrect answers – students will have points subtracted for incorrect answer choices, although the overall question score will not be less than zero.

Following the July upgrade staff will have more control on the way that scores for each question are compiled, and will be able to:

- Define a positive percentage value to award credit for a correct answer;

- Enter a negative percentage value (up to a maximum of -100% ) to subtract credit for an incorrect answer;

- Allow a negative overall score for the question.

In the following example, it is possible to score from a maximum of 10 points (by selecting only the first and third answers) to a minimum of -30 points (by selecting only the second, fourth, fifth, and sixth options). A student selecting all six options would score -20 points.

More information about using tests in Ultra courses can be found at: Blackboard Help – Tests, Pools, and Surveys

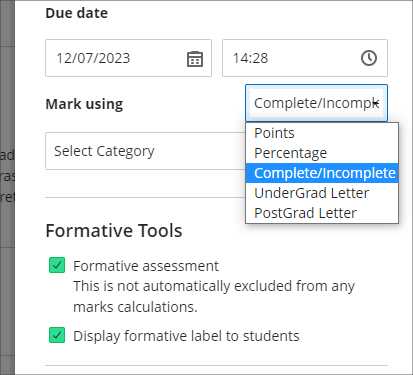

Complete/incomplete grade schema and formative assessment option

Following the July upgrade, staff will be able to use a complete/incomplete schema when assessing student work that does not require a grade to be given. The complete/incomplete schema still requires an assessment to be given a point value, but any amount of points given, including zero, will result in the assessment grade awarded being ‘complete’.

When a value is provided, the mark shows as a tick to show that the assessment has been completed.

The July upgrade also includes the option to mark assessments as formative. When this option is selected, the word ‘Formative’ is displayed alongside the assignment in the gradebook. Staff have the option to display ‘Formative’ to students also.

The complete/incomplete schema and the ability to note assessments as formative can be used independently or together, and can be used with both Turnitin and Blackboard assessments.

The following screenshot shows the student view of the gradebook where two assignments have marked as formative and where the complete/incomplete schema has been used.

Resizing media option in Ultra documents

After the July upgrade, when adding an image to an Ultra document staff will be able to resize the media using the corner handles that appear when the document is being edited and the image is selected.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: https://libguides.northampton.ac.uk/learntech/staff/nile-help/who-is-my-learning-technologist

Recent Posts

- Blackboard Upgrade – March 2026

- Blackboard Upgrade – February 2026

- Blackboard Upgrade – January 2026

- Spotlight on Excellence: Bringing AI Conversations into Management Learning

- Blackboard Upgrade – December 2025

- Preparing for your Physiotherapy Apprenticeship Programme (PREP-PAP) by Fiona Barrett and Anna Smith

- Blackboard Upgrade – November 2025

- Fix Your Content Day 2025

- Blackboard Upgrade – October 2025

- Blackboard Upgrade – September 2025

Tags

ABL Practitioner Stories Academic Skills Accessibility Active Blended Learning (ABL) ADE AI Artificial Intelligence Assessment Design Assessment Tools Blackboard Blackboard Learn Blackboard Upgrade Blended Learning Blogs CAIeRO Collaborate Collaboration Distance Learning Feedback FHES Flipped Learning iNorthampton iPad Kaltura Learner Experience MALT Mobile Newsletter NILE NILE Ultra Outside the box Panopto Presentations Quality Reflection SHED Submitting and Grading Electronically (SaGE) Turnitin Ultra Ultra Upgrade Update Updates Video Waterside XerteArchives

Site Admin