Blackboard’s AI design assistant, launched last December, has quickly proven to be a helpful tool for developing content within NILE courses. Our early data shows that while most users create tests independently, the AI design assistant is especially popular for test and quiz creation. Senior Lecturer in Nursing, Julie Holloway, shares her positive experience using AI to support her pharmacology students in a prescribing program.

“We are using AI to help generate new exam questions, particularly in relation to pharmacology content,” Julie explains. Due to the parameters of professional regulation within independent prescribing, she’s unable to provide students with past exam papers for their revision. Instead, AI has allowed her to create supplemental revision questions directly linked to each pharmacology lecture, providing students with valuable practice material aligned with their coursework.

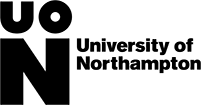

One notable feature in the AI design assistant enables users to select existing course materials for the AI to draw from when generating content. Julie has leveraged this by selecting specific pharmacology materials, allowing her to create questions that closely reflect the lectures and give students an efficient tool for self-assessment. “The process was easier than I originally thought,” she adds.

As well as helping with efficiency, Julie also noted the AI’s capacity to inspire new ways of phrasing questions. “Exam questions can become repetitive,” she says, and the AI’s suggestions help with this and enhance the student experience by supplementing their revision.

In thinking about the limitations of using AI to create test questions, Julie points out that the AI occasionally generates questions that aren’t entirely relevant. However, she highlights, “I think this will improve as we get more experienced with working with AI and search terms, etc.”

Would she recommend this approach to other educators? “Absolutely,” she says, encouraging colleagues to explore the tool themselves. “Just give it a try—it’s not as scary as you think!”

If you would like any support using any of the AI Design Assistant tools in NILE, then please contact your Learning Technologist. If you are unsure who this is, then please select this link: https://libguides.northampton.ac.uk/learntech/staff/nile-help/who-is-my-learning-technologist

For more information on the AI Design Assistant, then please select this link: https://libguides.northampton.ac.uk/learntech/staff/nile-guides/ai-design-assistant

Breaking Boundaries: Engaging Students with Clear and Structured Course Design

This summer, Kiran Kaur, a lecturer in the Faculty of Business and Law, was recognised with a NILE Ultra Course Award for her exceptional work on BUS2900: Research, Trends, and Professional Directions, which she developed during the 2023-24 academic year. The NILE Ultra Awards celebrate excellence in course design, recognising modules that demonstrate high standards of structure, accessibility, and student engagement.

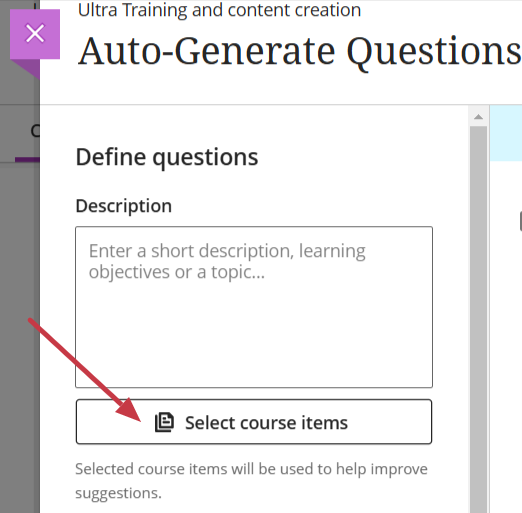

Kiran’s success with the 2023-24 module laid the foundation for her work this year when she took on a new module at short notice. She applied the same principles she used in her award-winning course—prioritising structure, clarity, and accessibility—and incorporated innovative tools like Padlet and emojis. These changes have had a powerful impact on student engagement, with the students in this new 2024-25 module providing glowing feedback. One student even described it as “the best module ever on NILE,” noting how easy it was to navigate and access resources.

Innovative Use of Emojis and Padlet for Engagement

A standout feature of Kiran’s teaching approach is her creative use of emojis to enhance course content. “I used emojis to help break up the text and make the material feel a little more fun and approachable for students. It’s a simple touch, but it got great feedback,” Kiran explained. This added a layer of visual clarity that students enjoyed.

In addition, Kiran made extensive use of Padlet, a collaborative tool that students used throughout the module to share ideas and engage with each other. “Padlet really worked to get students engaging with each other. It’s not just a tool for posting comments; it’s a space where students can collaborate in real-time, share their thoughts, and build a sense of belonging,” Kiran noted. Padlet’s role in the module went beyond traditional discussion boards, encouraging real-time collaboration and making students feel more connected despite being in an online environment. This interactivity was a key factor in building student engagement.

Sharing Best Practice and Building Consistency

Kiran plans to share her approach at an upcoming subject staff development day. She is eager to promote consistency across her programme, ensuring that students enjoy a seamless and cohesive learning experience throughout their three years of study. “It’s about fostering a culture where we share what’s working well, so that students benefit across the board,” Kiran explained.

Reflecting on her own experience, she encourages her colleagues to consider nominating themselves or each other for future NILE Ultra Course Awards. She credits her own nomination to a colleague’s encouragement and is now keen to inspire others to recognise the impact of their own teaching practices. “I wouldn’t have even thought to put myself forward if a colleague hadn’t mentioned the award. Now, I want to help others see the value in recognising their own achievements,” Kiran added.

Looking Forward

Kiran continues to apply her proven strategies to her new modules, maintaining her commitment to clear, structured design and student engagement. Her success demonstrates how thoughtful course development, and the use of innovative digital tools can greatly enhance the student experience. “For me, it’s about making sure that the students have the best possible experience. If they’re engaged and able to access the materials easily, they’ll get more out of the course,” she said.

Kiran’s story serves as an inspiration to her colleagues at the University of Northampton, illustrating how collaboration, innovation, and sharing best practices can lead to great results. Congratulations again to Kiran Kaur for her NILE Ultra Course Award, and we look forward to seeing her continued success!

NILE Ultra Course Awards 2025

Keep an eye out in the new year for the 2025 Ultra Courses Awards.

Assignment submission time is always stressful for students. There are the well-known issues that students face of decoding assignment briefs, managing multiple assignments, plus all the work that goes into completing assignments, getting the quotes and references right, and then the anxious wait to get the marks and feedback.

However, one potentially stressful stage that sometimes gets overlooked is the process of actually submitting the assignment. While this might seem like a minor stage in the process, it is a very important one, and is something that some students do struggle with, especially if it’s their first assignment, or uses a new/unfamiliar submission process, e.g., a video assessment. Additionally, and contrary to the popular myth, young people are not ‘digital natives.’ Many students come to university with low levels of digital ability and confidence, and for a lot of our students NILE will be the first VLE they’ve ever encountered, and the process of electronic assignment submission will be entirely new to them.

An excellent way to pre-emptively de-stress the assignment submission process is to adopt the view that it’s best to teach your students how to do all the things that you want them to do, including how to submit an assignment, and that’s exactly what the ITT (Initial Teacher Training) team do. In this guest post, Helen Tiplady, Senior Lecturer in Education (ITT Science), shares her approach to supporting students with the assignment submission process.

Here’s Helen:

Supporting students to submit their digital assessments correctly.

It may be due to our Primary school training backgrounds, but tutors in the Initial Teacher Training (ITT) team often share ‘What A Good One Looks Like’ with our students – otherwise fondly known as a ‘WAGOLL’.

One example I’d like to share with you was from a Level 4 science module (ITT1042) where students needed to complete a digital assessment piece. The premise was that they were planning a talk to a group of governors or sharing ideas at a staff INSET training day. The students needed to create a PowerPoint presentation along with their ‘speech’ written in the notes section. They then converted this to a PDF and uploaded this to the Turnitin submission point.

Although we have detailed, ‘step-by-step’ notes accompanied with screenshots for the students to follow as part of our assignment guidance, we have found that the most effective way for our students to upload their digital assessments correctly is through practice.

We offer a bespoke time during one of our learning events when students can observe the tutors demonstrate the steps to a successful submission (See Figure 1 below). We then ask the students individually to do a draft submission while the tutors are available to support and help with any issues. Finally, we ask the students to ‘teach each other’ on how to upload their assessment correctly to Turnitin.

This final step is crucial as this will allow the students to recall the steps more successfully at a later date. After all, Confucius is famous for saying “I hear, I forget. I see, I remember. I do and I understand.”

| Step 1 – Model: Show the students the stages to submit their digital assessment correctly. |

| Step 2 – Practice: Let the students submit a draft submission. |

| Step 3 – Tell: Ask the students to tell someone the stages they have learnt. |

Figure 1: How to support students to upload digital assessments successfully

So, in summary, try and find some ring-fenced time in one of your classes for the students to do a trial run of submitting their digital assessments. Find a time when the stakes are low and there is no pressure of a looming deadline. And remember, the more the students feel prepared, the easier they will find it to submit their digital assessments correctly the first time.

Interviews with presenters and exhibitors from the 6th annual innovation showcase event, Merged Futures 6 in the UON Learning Hub on Friday 14th June 2024 by Learning Technologist Richard Byles.

In this short film, Dr Mu Mu, Programme Leader for the AI and Data Science course, and students discuss a new project that aims to improve student access to information through the development of a new AI chatbot.

Dr Mu Mu explains that students often have common questions about their schedules, deadlines, and accommodation. To address these needs, second-year students are tasked with creating an AI chatbot. Three BSc Artificial Intelligence & Data Science students share insights into the development process.

Dr Mu Mu emphasises the broader learning outcomes: “It’s not only about the technical challenges, but also thinking about ethics, legal issues, and how to make the chatbot more personalised.”

The practical experience gained from this project has led the students gaining successful placements and internships in prestigious UK organisations. This project exemplifies how our programme not only equips students with technical expertise but also prepares them to navigate and address real-world challenges.

In this short video UON Learning Development tutor Anne-Marie Langford discusses her work employing generative AI to produce sample passages of academic writing for analysis and refinement in development workshops.

Anne-Marie notes that the use of AI-generated text can prompt students to critique academic writing, encouraging them to develop higher order thinking skills. This proves particularly valuable in scrutinising shortcomings in generative AI-generated text which can prove useful in identifying and presenting knowledge but are less adept and applying, analysing and evaluating it.

While recognising the time-saving potential of chatbots such as ChatGPT and their uses in enhancing student learning, she underscores the limitations of GAI in academic writing and referencing. Anne-Marie emphasises the importance of students adopting a critical, ethical and well-informed approach to using generative AI, urging them to cultivate their own critical voices and refine their skills.

By incorporating text from generative tools into her sessions, Anne-Marie exemplifies the advantages of modelling critical use of generative AI with students.

In 2023 the University appointed its first student Digital Skills Ambassador (DSA), the purpose of the role being to allow students to get digital skills support from other students. While it’s often assumed that most people are now confident and competent users of digital systems, especially young people (the so-called ‘digital natives’), the reality is that some students come to university without the basic digital skills they need to flourish on their courses. The University of Northampton is rightfully proud of the excellent digital facilities that support teaching and learning here, but being mindful of the pernicious effects that the digital divide can have in education, chose to create the student DSA role in order not to leave any student in the digital darkness. To understand a little more about what it means to be a DSA, we interviewed the current incumbent, Faith Kiragu, and asked them to explain in their own words how the role works.

1. Can you tell me a little about you and your role? How does the support work?

“As the Digital Skills Ambassador, my role primarily revolves around providing support and guidance to fellow students on various aspects of digital skills, with a focus on Microsoft Office Packages, NILE (Northampton Integrated Learning Environment), the student Hub, LinkedIn Learning, and related queries.

Students can seek my help by booking appointments through the Learning Technology platform. Upon visiting the platform, they fill out a form detailing their query briefly. After submission, they receive a confirmation email containing the details of their appointment. Additionally, to ensure they do not miss their session, students receive reminders a day before their scheduled appointment time. During the session, I address their queries, provide guidance, and offer practical assistance to help them navigate through any challenges they may encounter with digital tools and platforms. My aim is to empower students with the necessary digital skills to enhance their academic journey and future career prospects.”

2. What are the common support requests and how do you support these?

“The most common support requests I receive are related to navigating NILE, submitting assignments, accessing online classes on Collaborate, and Microsoft PowerPoint tasks like adding images and textboxes.

To support these requests, I provide personalized guidance during the one-on-one appointments. I offer step-by-step demonstrations, share relevant resources such as Linked-In Learning, and address specific queries to ensure students feel confident in handling these on their own. Additionally, I offer troubleshooting assistance and encourage students to practice these skills independently to enhance their proficiency over time.

3. Is the support used by students across all courses, or some areas more than others?

“Yes, I have noticed that more students from health-related courses seek digital skills support compared to other courses, Public Health being the course I have encountered most students. Students from the Business and Law Faculty come a close second.”

4. Do you have any (anonymous) examples of how you have helped students with their problems?

“A student asked for help with accessing their online classes on Collaborate via NILE. During our appointment, I guided them through the process of navigating to the correct module on NILE, locating the scheduled Collaborate session, and joining the virtual classroom. By the end of the session, the student could successfully participate in their online class without further difficulties.

Another student sought help creating a presentation on Microsoft PowerPoint, specifically needing guidance on how to add images and textboxes effectively. I provided a step-by-step demonstration of inserting images into slides, resizing and positioning them, and formatting textboxes for adding content and captions. Additionally, I shared tips on utilising PowerPoint’s features for enhancing visual appeal and maintaining a cohesive layout throughout the presentation. I also supported the student in accessing Linked-In Learning, and the student left the session equipped with the skills and confidence to complete their assignment using PowerPoint effectively.”

5. What do you think are the main benefits to students who have received support?

“The support I offer to students entails providing guidance and assistance with various digital tools and platforms, including NILE, Microsoft PowerPoint, and Collaborate. Through personalized appointments, students receive practical help in navigating these systems. This support not only enhances their digital skills but also boosts their confidence in engaging with coursework effectively. As a result, students experience improved academic performance and save valuable time by overcoming challenges efficiently. Furthermore, the support empowers students to take ownership of their learning journey, fostering independence and lifelong learning skills. Overall, the support provided equips students with the necessary resources and confidence to succeed academically in today’s digital-centric educational landscape.”

6. What have you learnt from your time in the role?

“In my role as the Student Digital Skills Ambassador, I have learned invaluable lessons that have enriched both my technical and interpersonal skills. Effective communication has been paramount as I translate complex technical information into accessible guidance for students with varying levels of digital literacy. Adaptability has been key as I tailor support to accommodate diverse learning styles and preferences. Through addressing queries, I have honed my problem-solving abilities while cultivating patience and empathy for students’ individual challenges. Additionally, this role has emphasized the importance of continuous learning, prompting me to stay updated on emerging technologies and digital trends. Overall, my experience has deepened my understanding of digital tools and platforms while enhancing my ability to support others in their learning journey, fostering a collaborative and empowering environment for student success.”

This short film features three BA Fashion, Textiles, Footwear & Accessories students discussing their experiences using Generative AI (GAI) in their projects. The students demonstrate diverse applications of GAI, highlighting how they tailor the technology to their individual creative needs.

The film features Subject Head Jane Mills, who discusses the potential of AI to support students, and outlines the introduction of a new AI logbook – designed to provide a framework for students to confidently explore and utilize GAI for brainstorming and research purposes.

In this condensed talk from the Vulcan Sessions on 26/01/24, Senior Lecturer in Education David Meechan discusses the opportunities and considerations of using AI in education.

Introducing the concept of Generative Artificial Intelligence (GAI) as a diverse and constantly evolving field without a consistent definition among scholars. He shares personal examples of how GAI can help support students by scaffolding their learning and reducing the initial cognitive load through the creation of basic first drafts.

David expresses, ‘I’m a big believer in experiential learning, providing children, and now students, with experiences they can build on.’ Therefore, he advocates for the use of GenAI tools, which offer ‘varied, specific, and potentially creative results, revolutionising education and supporting lifelong learning.’

Emphasising the importance of the ethical use AI tools in education, he argues for engagement with a wide range of GenAI tools to prepare students for navigating future changes in the education and technological landscape.

In this short film Jane Mills delves into the realm of text-to-image Generative AI models, experimenting with platforms such as Stable Diffusion and Midjourney. Initially encountering what she described as “odd and distorted” images, she highlights the evolving landscape of Generative AI images during this period.

“In 2023 the images started to look better,” Jane explains, noting a significant breakthrough as these AI models began capturing intricate details, showcasing her expertise as a fashion specialist, particularly in facial features, colour pallets, fabric textures and embellishments.

By May 2023, AI integration became a reality in the discipline of Fashion teaching. Jane champions the fusion of human creativity with machine efficiency, enabling designers to conceptualise runway shots, intricate patterns, and expressive collages.

Highlighting the importance of designing detailed prompts, Jane illustrates how specifying techniques, mediums, and styles could lead to incredible results, ranging from watercolor cityscapes to photorealistic textures.

Generative AI serves as a powerful tool that provides fresh perspectives, preparing students for the ever-evolving fashion industry. This approach facilitates faster design processes, hones skills, and meets industry demands.

“It’s an assistive tool, a collaborator that empowers human imagination. As students gain valuable experience using this transformative technology, they’re not just designing the future of fashion; they’re shaping the way we think about its creation,” she emphasised.

Recent Posts

- NILE Ultra Course Award Winners 2025

- Blackboard Upgrade – June 2025

- Learning Technology / NILE Community Group

- Blackboard Upgrade – May 2025

- Blackboard Upgrade – April 2025

- NILE Ultra Course Awards 2025 – Nominations are open!

- Blackboard Upgrade – March 2025

- Blackboard Upgrade – February 2025

- Blackboard Upgrade – January 2025

- Blackboard Upgrade – December 2024

Tags

ABL Practitioner Stories Academic Skills Accessibility Active Blended Learning (ABL) ADE AI Artificial Intelligence Assessment Design Assessment Tools Blackboard Blackboard Learn Blackboard Upgrade Blended Learning Blogs CAIeRO Collaborate Collaboration Distance Learning Feedback FHES Flipped Learning iNorthampton iPad Kaltura Learner Experience MALT Mobile Newsletter NILE NILE Ultra Outside the box Panopto Presentations Quality Reflection SHED Submitting and Grading Electronically (SaGE) Turnitin Ultra Ultra Upgrade Update Updates Video Waterside XerteArchives

Site Admin