In this condensed talk from the Vulcan Sessions on 26/01/24, Senior Lecturer in Education David Meechan discusses the opportunities and considerations of using AI in education.

Introducing the concept of Generative Artificial Intelligence (GAI) as a diverse and constantly evolving field without a consistent definition among scholars. He shares personal examples of how GAI can help support students by scaffolding their learning and reducing the initial cognitive load through the creation of basic first drafts.

David expresses, ‘I’m a big believer in experiential learning, providing children, and now students, with experiences they can build on.’ Therefore, he advocates for the use of GenAI tools, which offer ‘varied, specific, and potentially creative results, revolutionising education and supporting lifelong learning.’

Emphasising the importance of the ethical use AI tools in education, he argues for engagement with a wide range of GenAI tools to prepare students for navigating future changes in the education and technological landscape.

In this short film Jane Mills delves into the realm of text-to-image Generative AI models, experimenting with platforms such as Stable Diffusion and Midjourney. Initially encountering what she described as “odd and distorted” images, she highlights the evolving landscape of Generative AI images during this period.

“In 2023 the images started to look better,” Jane explains, noting a significant breakthrough as these AI models began capturing intricate details, showcasing her expertise as a fashion specialist, particularly in facial features, colour pallets, fabric textures and embellishments.

By May 2023, AI integration became a reality in the discipline of Fashion teaching. Jane champions the fusion of human creativity with machine efficiency, enabling designers to conceptualise runway shots, intricate patterns, and expressive collages.

Highlighting the importance of designing detailed prompts, Jane illustrates how specifying techniques, mediums, and styles could lead to incredible results, ranging from watercolor cityscapes to photorealistic textures.

Generative AI serves as a powerful tool that provides fresh perspectives, preparing students for the ever-evolving fashion industry. This approach facilitates faster design processes, hones skills, and meets industry demands.

“It’s an assistive tool, a collaborator that empowers human imagination. As students gain valuable experience using this transformative technology, they’re not just designing the future of fashion; they’re shaping the way we think about its creation,” she emphasised.

In this short film, Theatre Director Matt Bond delves into the intricacies of his pioneering theater experiment, “PlayAI,” a collaborative venture with the AI tool ChatGPT.

Building on the success of his groundbreaking work at Riverside Studios in London in April 2023, this project challenges the traditional boundaries of playwriting by immersing itself in the realms of exploration and experimentation with Artificial Intelligence.

Over a transformative four-week period, Bond collaboratively engaged with UON BA Acting students to craft a new play that delves into profound themes. These themes encompass the nuanced emotions surrounding redundancy and belonging in the age of Artificial Intelligence, the complexities of forging relationships with digital avatars, and the conflicting dynamics between idealism and capitalism within a futuristic digital ‘metaverse’ society.

The film provides valuable insights as four BA acting students share their perspectives on how they have embraced AI technology as a powerful catalyst for innovation and exploration.

Moreover, the impact of the project transcends the realm of performance. It becomes evident that the students, in their exploration of key AI concepts, have not only expanded their digital literacies but have also delved into the ethical boundaries of AI. Their involvement reflects a meticulous and comprehensive approach to working with AI, showcasing a profound commitment to understanding and navigating the intricate facets of this transformative technology.

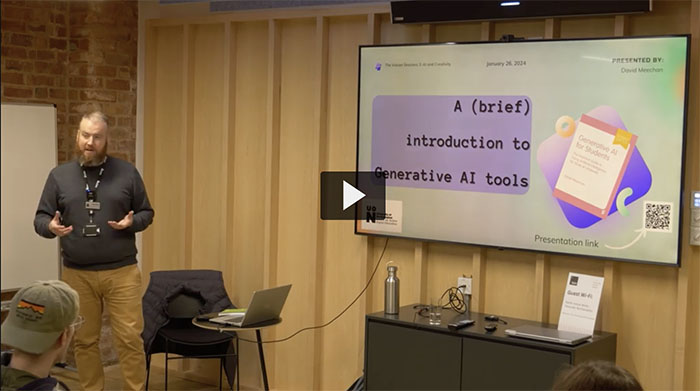

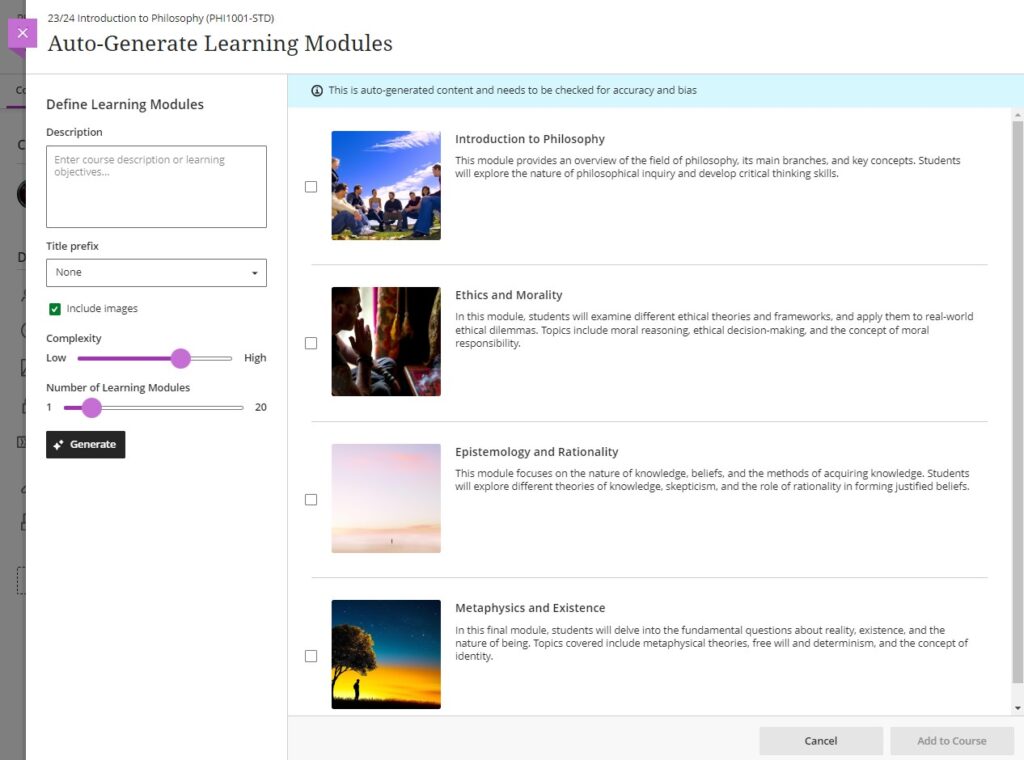

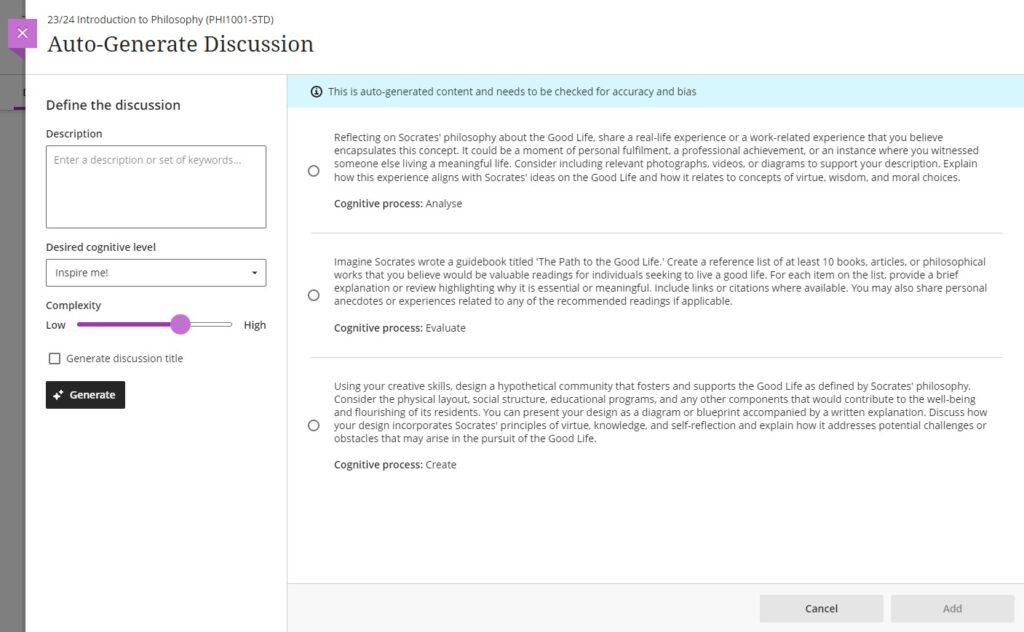

The new features in Blackboard’s January upgrade will be available between Friday 5th and Monday 8th January. The January upgrade includes the following new/improved features to Ultra courses:

- AI Design Assistant: Authentic assessment prompt generation

- AI Design Assistant: Generate rubric Improvements

- Total & weighted calculations in the Ultra gradebook

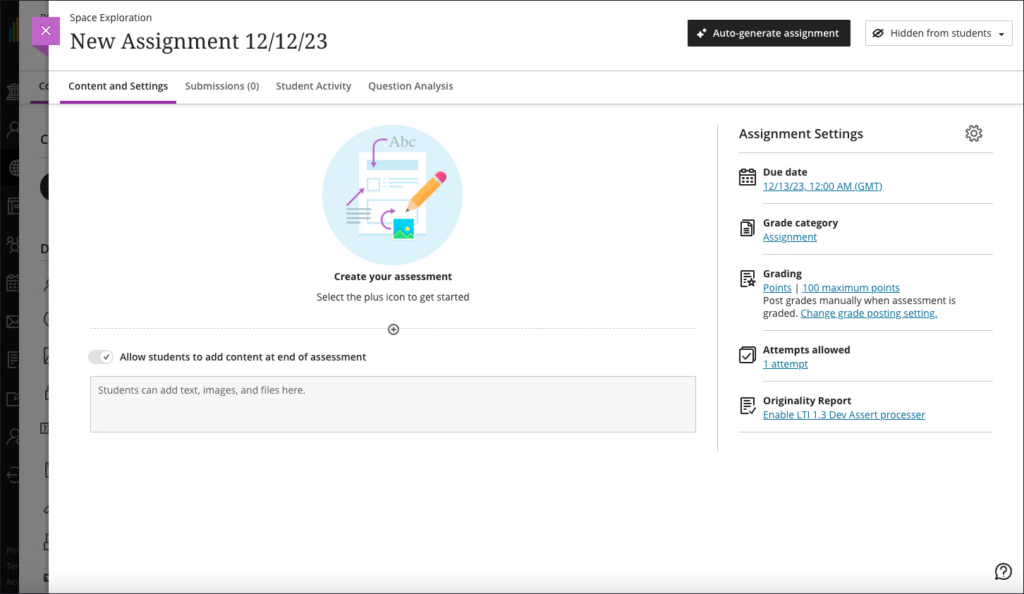

AI Design Assistant: Authentic assessment prompt generation

Currently, staff are able to auto-generate prompts for Ultra discussions and journals. The January upgrade will add the ability to auto-generate prompts for Ultra assignments too. Following the upgrade, when setting up an Ultra assignment, staff will see the ‘Auto-generate assignment’ option in the top right-hand corner of the screen.

Selecting ‘Auto-generate assignment’ will generate three prompts which staff can refine by adding additional context in description field, selecting the desired cognitive level and complexity, and then re-generating the prompts.

Once selected and added, the prompt can then be manually edited by staff prior to releasing the assignment to students.

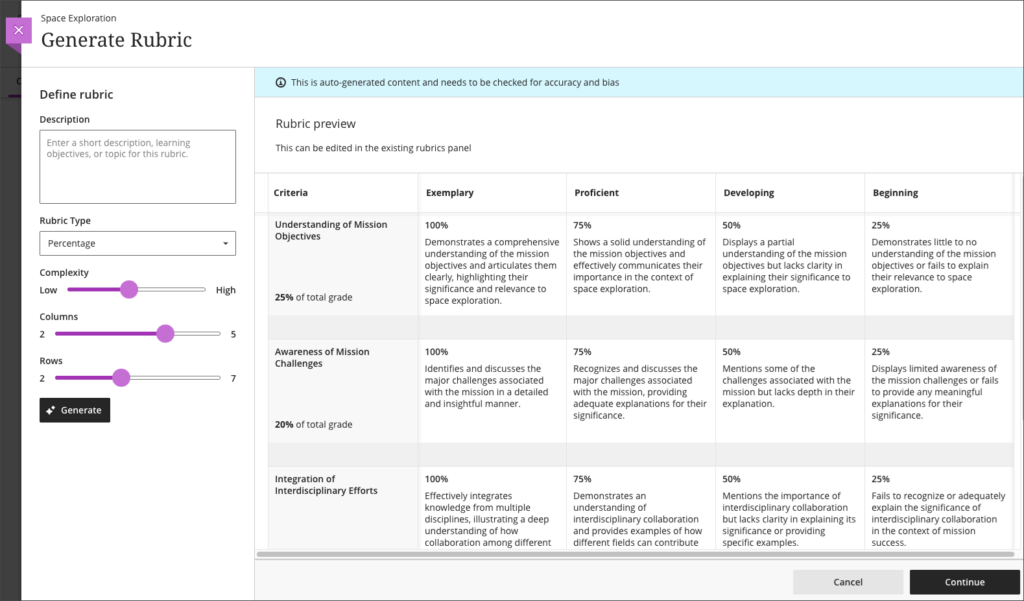

AI Design Assistant: Generate rubric Improvements

Following feedback from users, the January upgrade will improve auto-generated rubrics. The initial version of the AI Design Assistant’s auto-generated rubrics did not handle column and row labels properly, and this will be improved in the January upgrade. Also improved in the January upgrade will be the distribution of percentages/points across the criteria, which were inconsistently applied in the initial release.

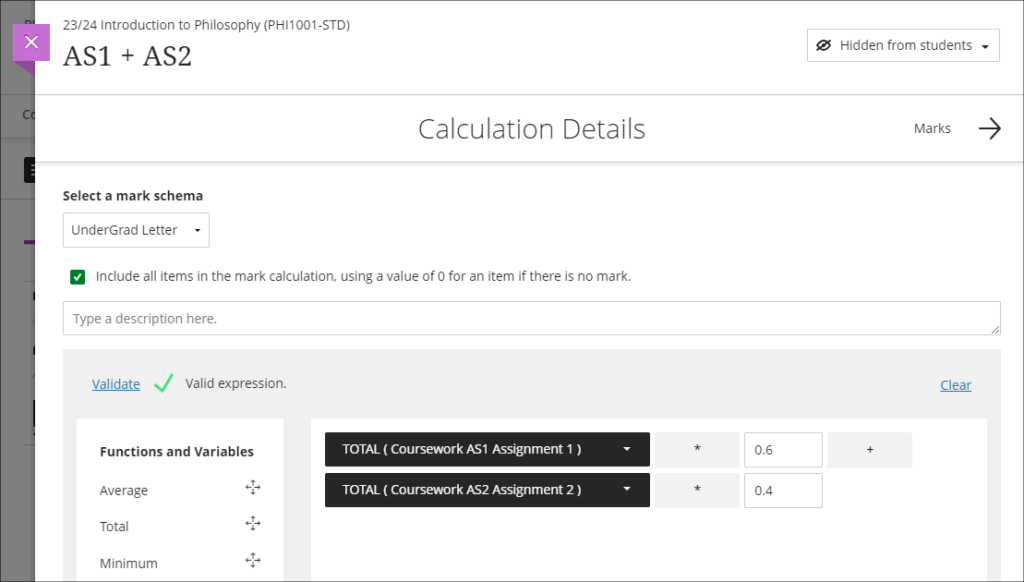

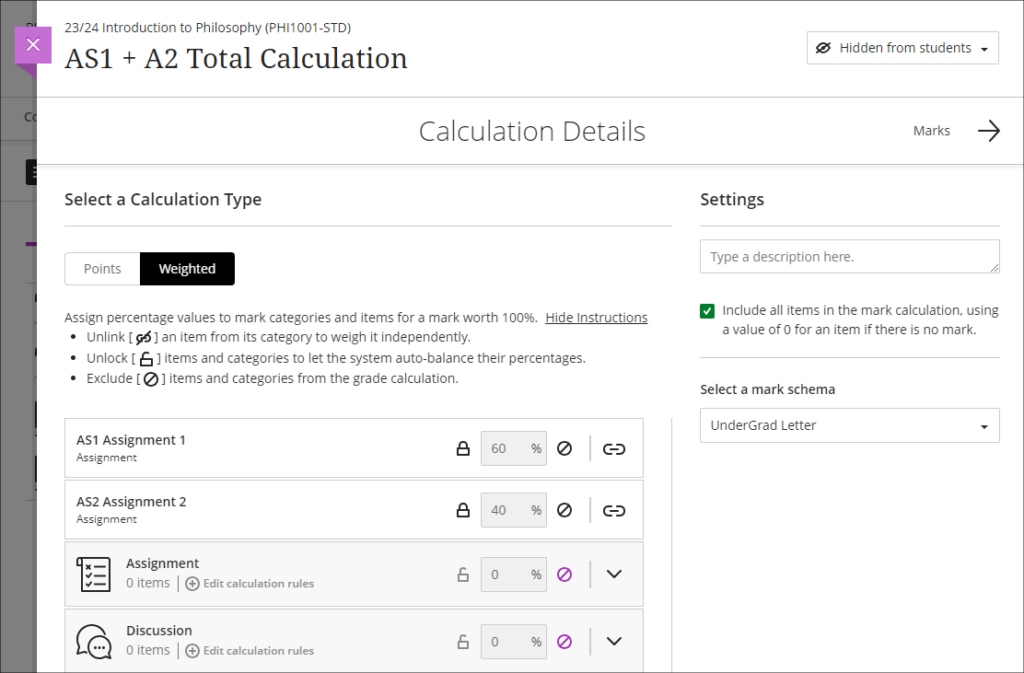

Total & weighted calculations in the Ultra gradebook

The Ultra gradebook currently allows for the creation of calculated columns using the ‘Add Calculation’ feature. However, the functionality of these calculated columns makes the creation of weighted calculations difficult, e.g., when generating the total score for two pieces of work where one is worth 60% of the mark and the other 40%. At present, this would have to be done in a calculated column by using the formula AS1 x 0.6 + AS2 x 0.4, like so:

However, weighting problems can be further compounded if the pieces of work are not all out of 100 points, which can often be the case when using computer-marked tests. Following feedback about this issue, the January upgrade will bring in an ‘Add Total Calculation’ option, which will allow staff to more easily generate an overall score for assessments with multiple sub-components. The new ‘Add Total Calculation’ column will simply require staff to choose the assessments which are to be used in the calculation, and specify how they are to be weighted. Using the same example as above, the calculation would look like so:

More information

To find out more about all of the AI Design Assistant tools available in NILE, full guidance is available at: Learning Technology – AI Design Assistant

And as ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

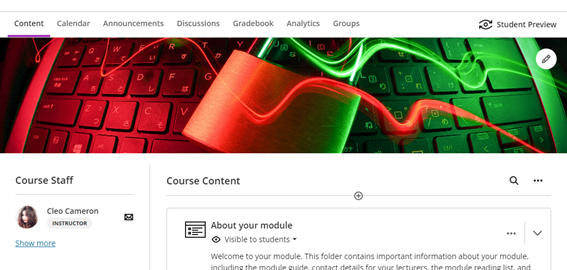

Dr Cleo Cameron (Senior Lecturer in Criminal Justice)

In this blog post, Dr Cleo Cameron reflects on the AI Design Assistant tool which was introduced into NILE Ultra courses in December 2023. More information about the tool is available here: Learning Technology Website – AI Design Assistant

Course structure tool

I used the guide prepared by the University’s Learning Technology Team (AI Design Assistant) to help me use this new AI functionality in Blackboard Ultra courses. The guide is easy to follow with useful steps and images to help the user make sense of how to deploy the new tools. Pointing out that AI-generated content may not always be factual and will require assessment and evaluation by academic staff before the material is used is an important point, and well made in the guide.

The course structure tool on first use is impressive. I used the key word ‘cybercrime’ and chose four learning modules with ‘topic’ as the heading and selected a high level of complexity. The learning modules topic headings and descriptions were indicative of exactly the material I would include for a short module.

I tried this again for fifteen learning modules (which would be the length of a semester course) and used the description, ‘Cybercrime what is it, how is it investigated, what are the challenges?’ This was less useful, and generated module topics that would not be included on the cybercrime module I deliver, such as ‘Cyber Insurance’ and a repeat of both ‘Cybercrime, laws and legislation’ and ‘Ethical and legal Implications of cybercrimes. So, on a smaller scale, I found it useful to generate ideas, but on a larger semesterised modular scale, unless more description is entered, it does not seem to be quite as beneficial. The auto-generated learning module images for the topic areas are very good for the most part though.

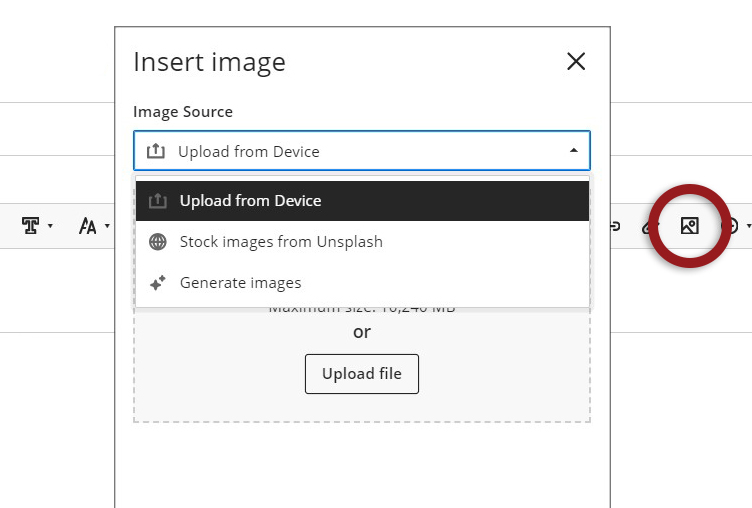

AI & Unsplash images

Once again, I used the very helpful LearnTech guide to use this functionality. To add a course banner, I selected Unsplash and used ‘cybercrime’ as a search term. The Unsplash images were excellent, but the scale was not always great for course banners. The first image I used could not get the sense of a keyboard and padlock, however, the second image I tried was more successful, and it displayed well as the course tile and banner on the course. Again, the tool is easy to use, and has some great content.

I also tried the AI image generator, using ‘cybercrime’ as a search term/keyword. The first set of images generated were not great and did not seem to bear any relation to the keyword, so I tried generating a second time and this was better. I then used the specific terms ‘cyber fraud’ and ‘cyber-enabled fraud’, and the results were not very good at all – I tried generating three times. I tried the same with ‘romance fraud’, and again, the selection was not indicative of the keywords. The AI generated attempt at romance fraud was better, although the picture definition was not very good.

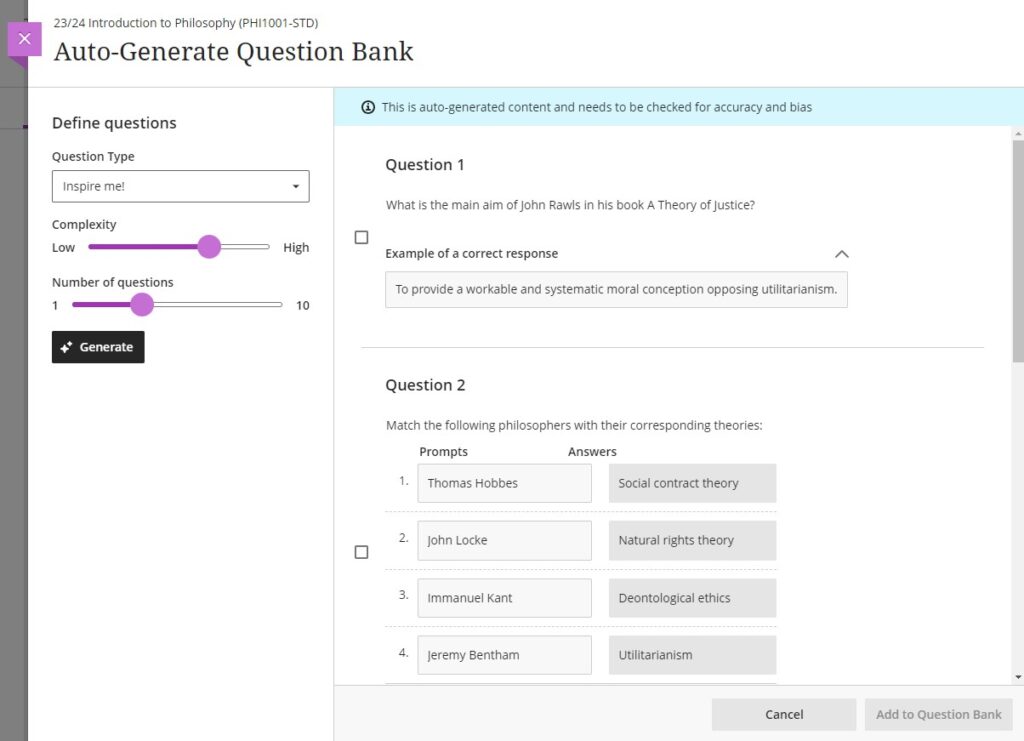

Test question generation

The LearnTech guide informed the process again, although having used the functionality on the other tools, this was similar. The test question generation tool was very good – I used the term ‘What is cybercrime?’ and selected ‘Inspire me’ for five questions, with the level of complexity set to around 75%. The test that was generated was three matching questions to describe/explain cybercrime terminologies, one multiple choice question and a short answer text-based question. Each question was factually correct, with no errors. Maybe simplifying some of the language would be helpful, and also there were a couple of matched questions/answers which haven’t been covered in the usual topic material I use. But this tool was extremely useful and could save a lot of time for staff users, providing an effective knowledge check for students.

Question bank generation from Ultra documents.

By the time I tried out this tool I was familiar with the AI Design Assistant and I didn’t need to use the LearnTech guide for this one. I auto-generated four questions, set the complexity to 75%, and chose ‘Inspire me’ for question types. There were two fill-in-the-blanks, an essay question, and a true/false question which populated the question bank – all were useful and correct. What I didn’t know was how to use the questions that were saved to the Ultra question bank within a new or existing test, and this is where the LearnTech guide was invaluable with its ‘Reuse question’ in the test dropdown guidance. I tested this process and added two questions from the bank to an existing test.

Rubric generation

This tool was easily navigable, and I didn’t require the guide for this one, but the tool itself, on first use, is less effective than the others in that it took my description word for word without a different interpretation. I used the following description, with six rows and the rubric type set to ‘points range’:

‘Demonstrate knowledge and understanding of cybercrime, technologies used, methodologies employed by cybercriminals, investigations and investigative strategies, the social, ethical and legal implications of cybercrime and digital evidence collection. Harvard referencing and writing skills.’

I then changed the description to:

‘Demonstrate knowledge and understanding of cybercrime, technologies used, methodologies employed by cybercriminals, investigations and investigative strategies. Analyse and evaluate the social, ethical and legal implications of cybercrime and digital evidence collection. Demonstrate application of criminological theories. Demonstrate use of accurate UON Harvard referencing. Demonstrate effective written communication skills.’

At first generation, it only generated five of the six required rows. I tried again and it generated the same thing with only five rows, even though six was selected. It did not seem to want to separate out the knowledge and understanding of investigations and investigative strategies into its own row.

I definitely had to be much more specific with this tool than with the other AI tools I used. It saved time in that I did not have to manually fill in the points descriptions and point ranges myself, but I found that I did have to be very specific about what I wanted in the learning outcome rubric rows with the description.

Journal and discussion board prompts

This tool is very easy to deploy and actually generates some very useful results. I kept the description relatively simple and used some text from the course definition of hacking:

‘What is hacking? Hacking involves the break-in or intrusion into a networked system. Although hacking is a term that applies to cyber networks, networks have existed since the early 1900s. Individuals who attempted to break-in to the first electronic communication systems to make free long distance phonecalls were known as phreakers; those who were able to break-in to or compromise a network’s security were known as crackers. Today’s crackers are hackers who are able to “crack” into networked systems by cracking passwords (see Cross et al., 2008, p. 44).’

I used the ‘Inspire me’ cognitive level, set the complexity level to 75%, and checked the option to generate discussion titles. Three questions were generated that cover three cognitive processes:

The second question was the most relevant to this area of the existing course, the other two slightly more advanced and students would not have covered this material (nor have work related experience in this area). I decided to lower the complexity level to see what would be generated on a second run:

Again, the second question – to analyse – seemed the most relevant to the more theory-based cybercrime course than the other two questions. I tried again and lowered the complexity level to 25%. This time two of the questions were more relevant to the students’ knowledge and ability for where this material appears in the course (i.e., in the first few weeks):

It was easy to add the selected question to the Ultra discussion.

I also tested the journal prompts and this was a more successful generation first time around. The text I used was:

‘“Government and industry hire white and gray hats who want to have their fun legally, which can defuse part of the threat”, Ruiu said, “…Many hackers are willing to help the government, particularly in fighting terrorism. Loveless said that after the 2001 terrorist attacks, several individuals approached him to offer their services in fighting Al Qaeda.” (in Arnone, 2005, 19(2)).’

I used the cognitive level ‘Inspire me’ once again and ‘generate journal title’ and this time placed complexity half-way. All three questions generated were relevant and usable.

My only issue with both the discussion and journal prompts is that I could not find a way to save all of the generated questions – it would only allow me to select one, so I could not save all the prompts for possible reuse at a later date. Other than this issue, the functionality and usability and relevance of the auto-generated discussion and journal prompt, was very good.

On Friday 8th December, 2023, three new NILE tools will become available to staff; the AI Design Assistant, AI Image Generator, and the Unsplash Image Library.

AI Design Assistant

AI Design Assistant is a new feature of Ultra courses, and may be used by academic staff to generate ideas regarding:

- Course structure

- Images

- Tests/quizzes

- Discussion and journal prompts

- Rubrics

The AI Design Assistant only generates suggestions when it is asked to by members of academic staff, and cannot automatically add or change anything in NILE courses. Academic staff are always in control of the AI Design Assistant, and can quickly and easily reject any AI generated ideas before they are added to a NILE course. Anything generated by the AI Design Assistant can only be added to a NILE course once it has been approved by academic staff teaching on the module, and all AI generated suggestions can be edited by staff before being made available to students.

AI Image Generator & Unsplash Image Library

Wherever you can currently add an image in an Ultra course, following the upgrade on the 8th of December, as well as being able to upload images from your computer, you will also be able to search for and add images from the Unsplash image library. And, in many places in Ultra courses, you will be able to add AI-generated images too.

First Thoughts on AI Design Assistant

Find out more about what UON staff think about AI Design Assistant in our blog post from Dr Cleo Cameron (Senior Lecturer in Criminal Justice): First Thoughts on AI Design Assistant

Other items included in the December upgrade

The December upgrade will see the ‘Add image’ button in a number of new places in Ultra courses for staff, including announcements (upload from device or Unsplash), and Ultra tests and assignments (upload from device, Unsplash, and AI-generated images). However, please note that images embedded in announcements will not be included in the emailed copy of the announcement; they will only be visible to students when viewing the announcement in the Ultra course.

Ultra rubrics will be enhanced in the December upgrade. Currently these are limited to a maximum of 15 rows and columns, but following the upgrade there will be no limit on the number of rows and columns when using an Ultra rubric.

More information

To find out more about these new tools, full guidance is already available at: Learning Technology – AI Design Assistant

You can also find out more by coming along to the LearnTech Jingle Mingle on Tuesday 12th December, 2023, between 12:30 and 13:45 in T-Pod C (2nd Floor, Learning Hub).

As ever, please get in touch with your learning technologist if you would like any more information about these new NILE features: Who is my learning technologist?

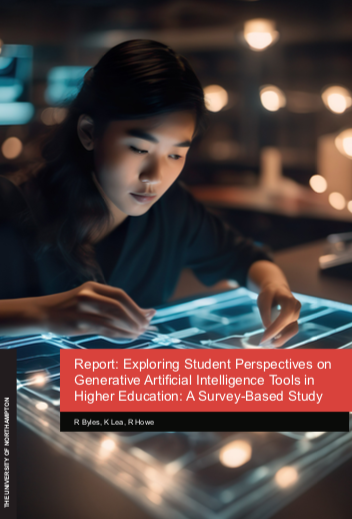

Learning Technologists Richard Byles and Kelly Lea, and Head of Learning Technology Rob Howe have published the outcomes of their research into student perspectives of Artificial Intelligence on the LTE (Learning Teaching Excellence) platform.

The insights presented in the report are derived from their student survey launched in May 2023, focusing on a range of topics including reasons and barriers for adopting AI tools, ethical considerations and thoughts on staff use to create new content.

The report provides a clear and concise presentation of their research results, discoveries, and conclusions with input from Kate Coulson, Head of Learning and Teaching Enhancement and Senior Lecturer in Fashion Jane Mills. The central theme here revolves around the crucial dialogue surrounding the inclusion of student opinions in shaping AI guidance within the educational landscape.

Currently, Richard and Kelly are on the lookout for volunteers who can participate in video interviews on uses of AI in the classroom. These aim to shed light on how educators are introducing Generative AI Technologies to students, further enriching our understanding of AI’s role in education. Your voice could be an essential part of this ongoing research.

Report Link (PDF): Exploring Student Perspectives on

Generative Artificial Intelligence Tools in

Higher Education: A Survey-Based Study

R Byles, K Lea, R Howe

More Information

More information about the University’s position on AI is available from:

Robin Crockett (Academic Integrity Lead – University of Northampton) has run a small scale study investigating two AI detectors with a range of AI created assignments and has shared some of the initial results.

He used ChatGPT to generate 25 nominal 1000-word essays: five subjects, five different versions of each subject. For each subject, he instructed ChatGPT to vary the sentence length as follows: ‘default’ (i.e. I didn’t give it an instruction re. sentence length), ‘use long sentences’, ‘use short sentences’, ‘use complex sentences’, ‘use simple sentences’.

The table below shows the amount of the assignment which was detected as using AI in two different products: Turnitin and Copyleaks

| Essay 1 | Essay 2 | Essay 3 | Essay 4 | Essay 5 | |

| Turnitin | |||||

| Default | 100% AI | 100% AI | 76% AI | 100% AI | 64% AI |

| Long | 0% AI | 26% AI | 59% AI | 67% AI | 51% AI |

| Short | 0% AI | X | 31% AI | 82% AI | 27% AI |

| Complex | 33% AI | 15% AI | 0% AI | 63% AI | 0% AI |

| Simple | 100% AI | 0% AI | 100% AI | 100% AI | 71% AI |

| Copyleaks | |||||

| Default | 100% AI at p=80.6% | 100% AI at p=83.5% | 100% AI at p=88.5% | 100% AI at p=81.3% | 100% AI at p=85.4% |

| Long | ~80% AI at p=65-75% | 100% AI at p=81.5% | ~95% AI at p=75-85% | 100% AI at p=79.1% | 100% AI at p=80.6% |

| Short | ~70% AI at p=66-72% | 100% AI at p=76.9% | 100% AI at p=87.3% | ~85% AI at p=77-79% | 100% AI at p=78.4% |

| Complex | 100% AI at p=72.9% | 100% AI at p=81.0% | ~90% AI at p=62-73% | 100% AI at p=77.7% | 0% AI |

| Simple | 100% AI at p=83.6% | ~90% AI at p=73-81% | 100% AI at p=95.2% | ~90% AI at p=76-82% | 100% AI at p=84.9% |

X = “Unavailable as submission failed to meet requirements”.

0% -> complete false negative.

Robin noted:

Turnitin highlights/returns a percentage of ‘qualifying’ text that it sees as AI-generated, but no probability of AI-ness.

Copyleaks highlights sections of text it sees as AI-generated, each section tagged with the probability of AI-ness, but doesn’t state the overall proportion of the text it sees as AI-generated (hence his estimates).

Additional reading: Jisc blog on AI detection

In this short video Learning Technologist Belinda Green speaks to Mark Thursby, a Senior Lecturer in Music, and two of his music students about their experimental sound installation “Of Sound Mind” presented at the University of Northampton on May 16th and 17th, 2023.

Mark explains how anything conductive can be converted into MIDI notes and music, allowing humans to interact with the installations by touching conductive elements such as leaves. The result was an AI insect hybrid garden that generated unique sounds and created an immersive experience. The installations also had the ability to daisy chain people, where participants could hold hands and the last person could touch a leaf to activate the system.

Belinda speaks to music students Ben Wyatt and Kai Downer who discuss the effectiveness of background sounds, such as a café or a public space to create an immersive surround sound atmosphere and how conductivity and human interaction can be used to create immersive sounds that are ‘in key’ using new technologies.

The interview highlights the captivating and interactive nature of the “Of Sound Mind” installations. The fusion of music, AI technology, and interaction created an immersive experience for participants. The enthusiasm expressed by the interviewees showcased the exciting possibilities that lie ahead, particularly for students and the advancement of music technology.

Case study produced by Richard Byles and Belinda Green.

The year 2023 is proving to be a fascinating one for generative AI tools, with ChatGPT, the latest chatbot from OpenAI, crossing the 100 million user line in January 2023, making it the fastest-growing consumer application in a short period of time (source: DemandSage). ChatGPT is a large language model that provides detailed answers to a wide range of questions. Ask it to summarise a report, structure a presentation or activities for your session and you may well be pleased with the results. ChatGPT’s ease of use, speed of response, and detailed answers have seen it quickly dominate the AI generator market and gain both widespread acclaim and criticism.

While early media attention focused on the negative, playing on sci-fi tropes and the out-of-control desires of AI tools, scientists such as Stephen Wolfram have been exploring and explaining the capabilities and intricacies of ChatGPT, expertly raising awareness of its underlying Large Language Model architecture and its limitations as a tool.

In his recent talk on the Turnitin Webinar ‘AI: friend or foe?’, Robin Crockett – Academic Integrity Lead here at UON, discussed how a better understanding of the ability of ChatGPT to create content can be used to deter cheating with AI tools. However, concerns have also been raised about students using the tool to cheat, claiming that minimal effort required to enter an essay question in ChatGPT may produce an essay that may be of an adequate standard to, at least, pass an assessment (source: The Guardian).

Link to YouTube, Turnitin Session: AI friend or foe? 28/02/23 (CC Turnitin)

The next webinar in the Turnitin series entitled ‘Combating Contract Cheating on Campus’ with expert speakers Robin Crockett, Irene Glendinning and Sandie Dann, can be found here.

One thing the media stories seem to agree upon is that generative AI tools have the potential to change the way we do things and challenge the status quo, with some traditional skill sets at risk of becoming replaced by AI, and new opportunities for those who embrace these technologies.

In terms of how AI might be utilised by academic staff, Lee Machado, Professor of Molecular Medicine, describes his use of AI tools in cancer classification and how AI tools might be used to help answer questions in his field. Lee also discusses how he feels AI tools such as ChatGPT could improve student experiences by providing personalised feedback on essays and by simplifying complex information.

In the following interview, Jane Mills, Senior Lecturer in Fashion and Textiles, discusses how the fashion industry is embracing AI, emphasising that rather than AI replacing creativity, it can be used to enhance creative work. Students Amalia Samoila and Donald Mubangizi reflect on the collaborative nature of working with AI, using examples of their current work.

In his interview, Rob Howe, Head of Learning Technology, discusses the evolution of AI technology and its impact on the academic world. He explains that AI has come a long way since the original definitions by Minsky and Turing in the 1950s and that improvements in processing speed and access to data have made it a revolution in technology. Rob describes how AI technology has already been integrated into tools and academic systems in universities and how the rise of AI technology has led to a change in the way assignments are being considered, as students may now be using AI systems to assist in their studies. Although there is discussion around institutions wishing to ban the use of AI in academic work, Rob emphasizes the importance of learning to live with such tools and using them in a way that supports educators and students. AI systems have the potential to be a valuable resource for tutors to generate learning outcomes and offer new ideas which can then be critically evaluated and modified.

Exploring AI through multiple platforms and apps is a great way for users to get started. However, it’s important to note that not all AI tools are free. While many offer free tokens or limited availability for new users, some require payment. Our team member, inspired by the use of AI-generated images by an art student at UON, tried the IOS app, Dawn AI, which offered a 3-day trial. They enjoyed generating 48 new versions of themselves and even created versions of themselves as a warrior and video game character.

However, it’s important to consider whether using AI in this way is simply a gimmick or if it has a more purposeful use. It’s easy to dismiss AI-generated images as mere novelties, but the potential applications of this technology are vast and varied. AI-generated images can be used in advertising, social media marketing, and even in the film industry. As AI continues to develop and evolve, we’re likely to see even more innovative and exciting uses for this technology. The possibilities are endless.

The full extent of how AI tools will fit into daily academic life is yet to be determined. While some believe that AI has the potential to revolutionize the way in which we teach and learn, others remain skeptical about its ethical implications and its potential to negatively impact student engagement.

One of the main concerns is whether AI tools will prove to be a positive tool to enhance creativity and support students or whether they will provide a shortcut to assessments that undermine the learning process. It is clear that there are significant implications for how educators use AI tools in the classroom.

To explore these issues and more, Rob Howe, Head of Learning Technology at the University of Northampton (supported by University staff, external colleagues and the National Centre for A.I.), will be running a series of debates and talks on campus and online. These discussions will aim to assess the potential of AI tools and examine their ethical implications. Participants will discuss the challenges and opportunities presented by AI, and debate the best ways to incorporate these tools into the classroom.

The first of these debates, titled, ‘The computers are taking over…?’, is on March 15th. The full details can be found here; https://blogs.northampton.ac.uk/learntech/2023/01/30/the-computers-are-taking-over-debate/

Link to future Webinar from the series: https://www.turnitin.com/resources/webinars/turnitin-session-series-contract-cheating-2023

Authors: Richard Byles and Kelly Lea.

Recent Posts

- Blackboard Upgrade – February 2026

- Blackboard Upgrade – January 2026

- Spotlight on Excellence: Bringing AI Conversations into Management Learning

- Blackboard Upgrade – December 2025

- Preparing for your Physiotherapy Apprenticeship Programme (PREP-PAP) by Fiona Barrett and Anna Smith

- Blackboard Upgrade – November 2025

- Fix Your Content Day 2025

- Blackboard Upgrade – October 2025

- Blackboard Upgrade – September 2025

- The potential student benefits of staying engaged with learning and teaching material

Tags

ABL Practitioner Stories Academic Skills Accessibility Active Blended Learning (ABL) ADE AI Artificial Intelligence Assessment Design Assessment Tools Blackboard Blackboard Learn Blackboard Upgrade Blended Learning Blogs CAIeRO Collaborate Collaboration Distance Learning Feedback FHES Flipped Learning iNorthampton iPad Kaltura Learner Experience MALT Mobile Newsletter NILE NILE Ultra Outside the box Panopto Presentations Quality Reflection SHED Submitting and Grading Electronically (SaGE) Turnitin Ultra Ultra Upgrade Update Updates Video Waterside XerteArchives

Site Admin