The new features in this month’s Blackboard’s upgrade will be available from Friday 6th June. This month’s upgrade includes the following new/improved features to Ultra courses:

- Add dividers to Ultra documents

- Enhanced appearance of knowledge checks results

- Tests: new jumbled sentence question type

- Discussion activity indicator for staff

- Grades transfer: option to select more items per page

- End of life notification for LearnSci LTI 1.1

- Learning technology / NILE community group

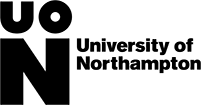

Add dividers to Ultra documents

Following June’s upgrade, staff will be able to use dividers in Ultra documents in order to enhance the look and the readability of these documents. In the following screenshot the divider tool is highlighted and a divider is in place between the text/image section and the knowledge check quiz.

More information about using Ultra documents is available from: Blackboard Help – Create Documents

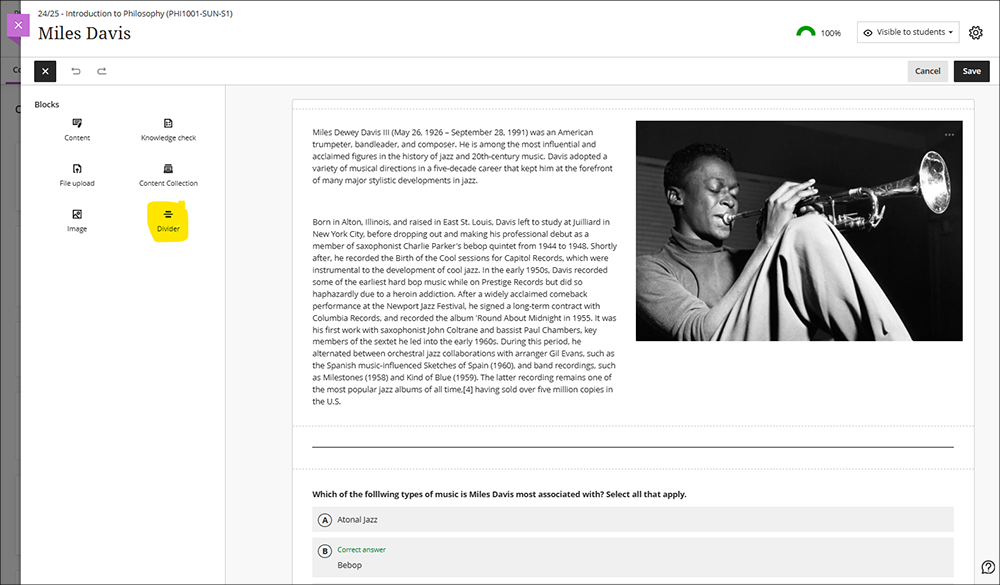

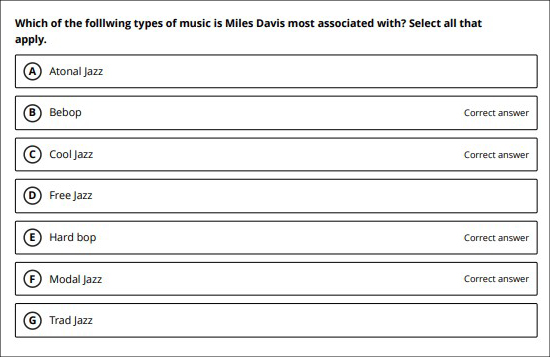

Enhanced appearance of knowledge checks results

After June’s upgrade, staff using knowledge check quizzes in Ultra documents will notice an improved display of the results of the quiz. In the screenshot below the top image shows the pre-upgrade view and the bottom image show the post-upgrade view of how the results of knowledge checks are presented to staff in their Ultra documents.

More information about creating knowledge check quizzes in Ultra documents is available from: Blackboard Help – Knowledge Checks in Blackboard Documents

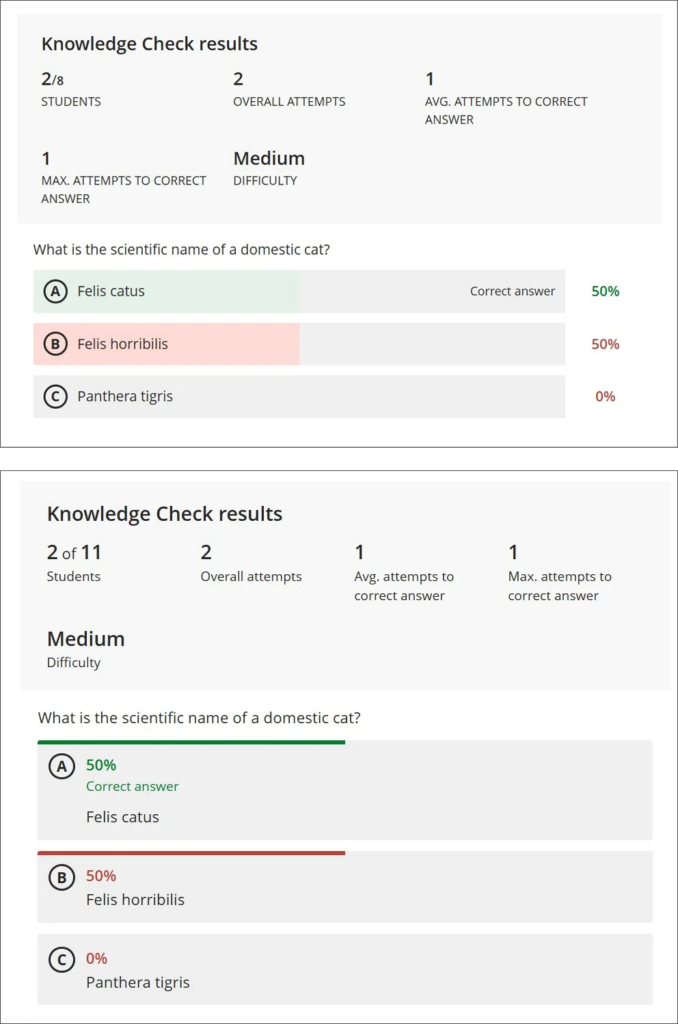

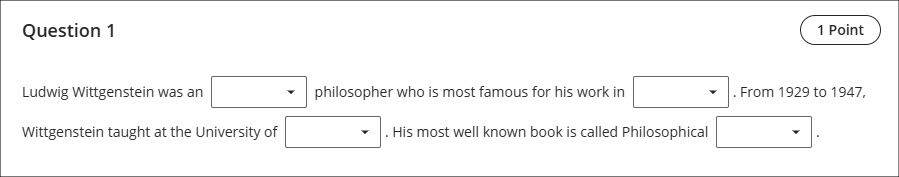

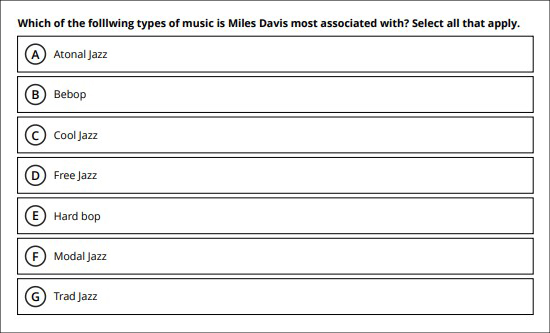

Tests: new jumbled sentence question type

June’s upgrade will introduce a new question type to tests; the jumbled sentence question. When creating a jumbled sentence question, staff provide a block of text, enclosing the variables in square brackets and adding distractors as necessary (see screenshot below).

Please note the need to ensure that each variable is unique and has only one possible correct answer. For example, the following text will not work: “Wittgenstein is most famous for his work in the philosophies of [language], [mind], and [logic].” This is because a student would have no way of knowing in which order to put the variables ‘language’, ‘mind’, and ‘logic’ in their reponse, and would, for example, unfairly score 0% for the response, “Wittgenstein is most famous for his work in the philosophies of logic, language, and mind”.

When completing a jumbled sentence question, students will be able to choose any of the correct answers and distractors from the drop-down lists.

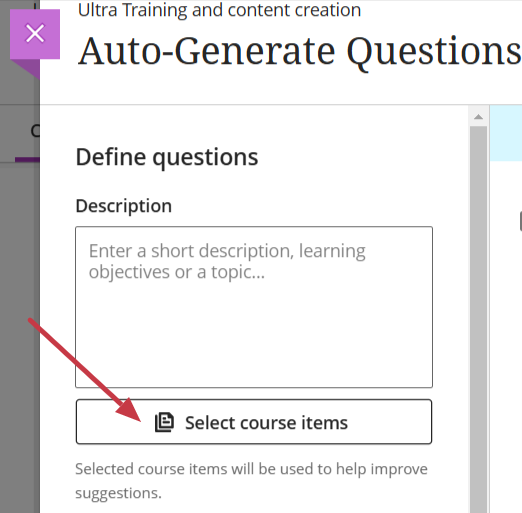

As well as being able to create jumbled sentence questions themselves, staff can also auto-generate jumbled sentence questions (and many other test question types) using the AI Design Assistant.

More information about creating tests is available from: Blackboard Help – Create Tests

More information about auto-generating test questions is available from: AI Question Generation in Blackboard

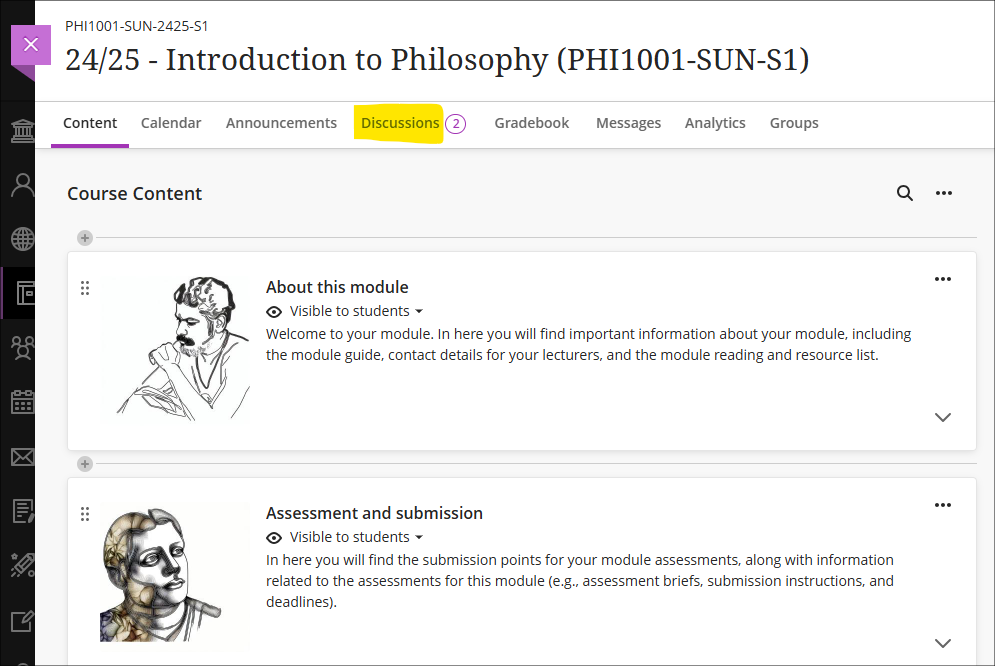

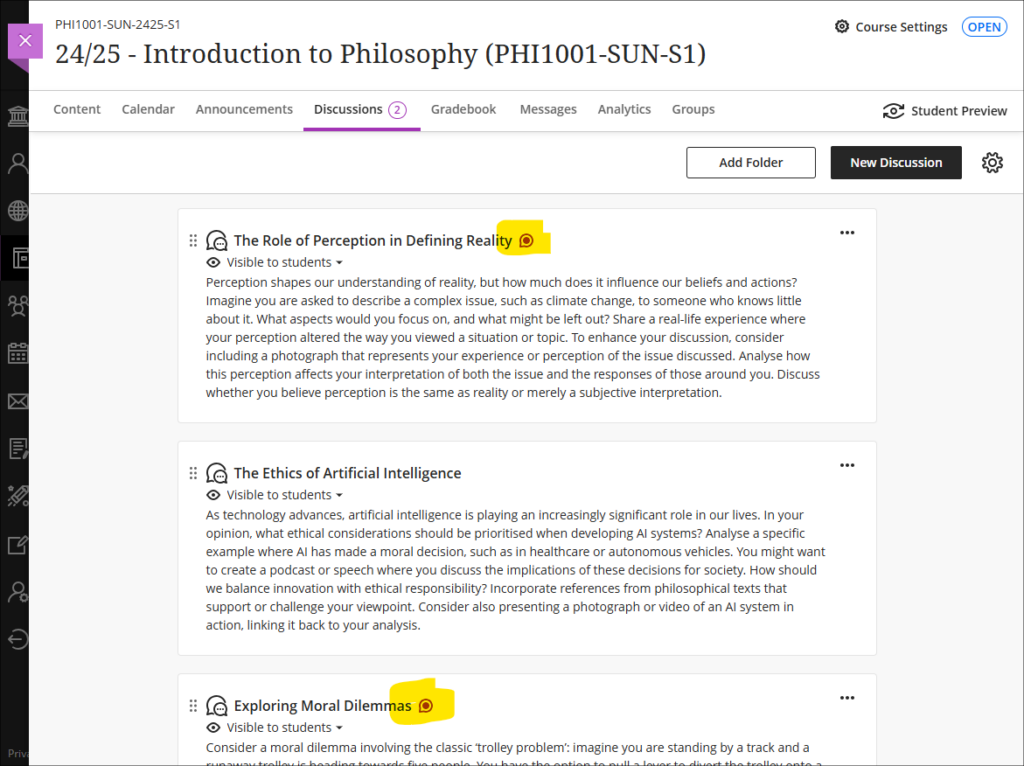

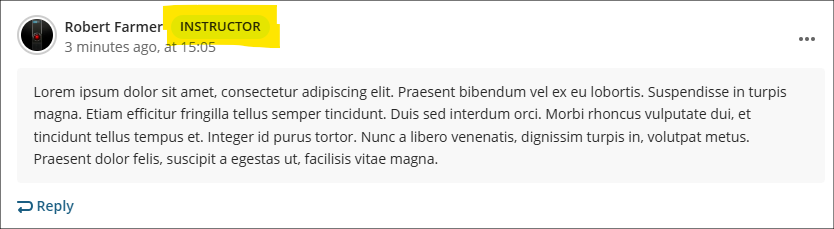

Discussion activity indicator for staff

Following the June upgrade, staff who use discussions in their courses will see an indicator in the course tools menu showing whether they have any discussions with unread posts. The indicator will display the number of discussions with new/unread posts, not the total number of new/unread posts in the discussions.

Once in the discussions tab the discussions which have unread posts will be flagged with the unread posts indicator.

Staff (and students) can also keep track of discussions by accessing the discussion and using the ‘Follow’ option. This will send notifications via email of posts and replies to a discussion.

More information about discussions is available from: Blackboard Help – Create Discussions

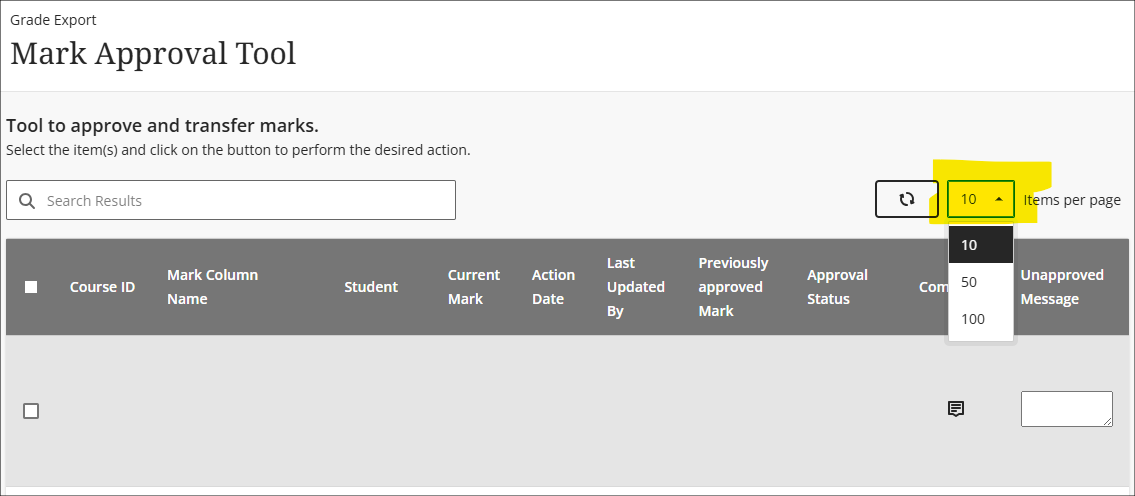

Grades transfer: option to select more items per page

When sending grades to SITS, staff can now choose to display 10, 50, or 100 items per page in the approval workflow. This option is available now.

More information about the grades transfer process is available at: Learning Technology Team – Transferring grades from NILE to SITS

End of life notification for LearnSci LTI 1.1

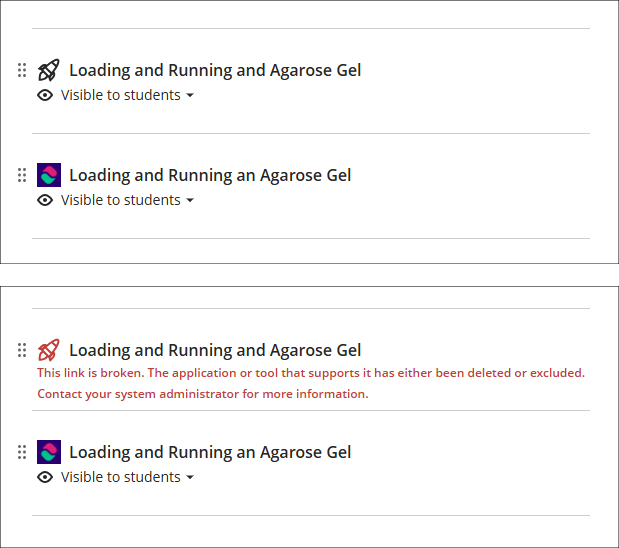

Following on from LearnSci’s implementation of the LTI 1.3 method of adding LearnSci resources to NILE Ultra courses, LearnSci have advised that resources added using the old LTI 1.1 method will stop working on 1 September 2025. LearnSci resources added to Ultra courses using the LTI 1.1 method will have been added via ‘+ Create > Teaching tools with LTI connection’, whereas resources added via the LTI 1.3 method will have been added using ‘+ Content Market > LearnSci’.

A LearnSci resource added using the LTI 1.1 will look like the first link in the screenshots below, with the rocket next to the title. A LearnSci resource added using the LTI 1.3 will look like the second link, with the LearnSci icon next to the title. Once the LTI 1.1 is switched off the old links will appear to staff with a ‘This link is broken’ message and will be automatically hidden from students. LTI 1.3 links will be unaffected.

All LearnSci resources added using the LTI 1.1 will be fully functional until the end of August 2025. Where staff are using LearnSci resources for the 25/26 academic year they will need to ensure that when new NILE courses are being set up that any old LTI 1.1 links that have been copied over are removed and replaced with new 1.3 links. If staff want LearnSci resources to be available to students in old NILE courses any 1.1 links will need to be replaced with 1.3 links.

Learning technology / NILE community group

Staff who are interested in finding out more about learning technologies and NILE are invited to join the Learning Technology / NILE Community Group on the University’s Engage platform. The purpose of the community is to share information and good practice concerning the use of learning technologies at UON. When joining the community, if you are prompted to login please use your usual UON staff username and password. By joining the Learning Technology / NILE Community you will receive calendar invitations to our regular live community events:

Join the Learning Technology / NILE Community Group

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in this month’s Blackboard’s upgrade will be available from Friday 2nd May. This month’s upgrade includes the following new/improved features to Ultra courses:

- Qualitative ‘no points’ Blackboard rubric

- Updates to AI conversations – role-play and auto-generate options

- Blackboard tests – ‘view submission one time’ results setting

- Gradebook – option to create text-based columns

- Minor change to discussions

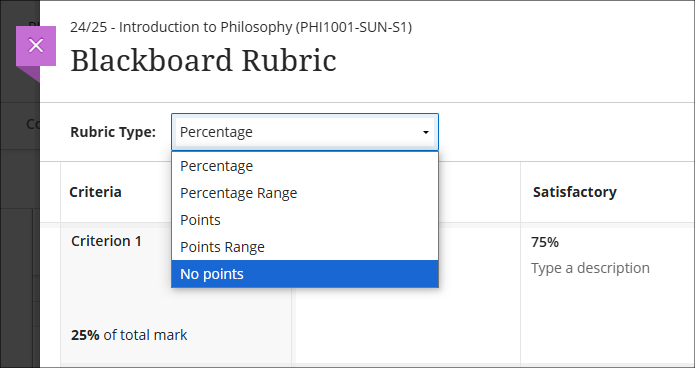

Qualitative ‘no points’ Blackboard rubric

May’s upgrade will include the option to create a ‘no points’ or qualitative rubric that can be used when marking Blackboard assignments, tests, journals, and discussions, or when marking items that have not been submitted electronically via the Blackboard ‘offline assessment’ option.

‘No points’ or qualitative rubrics allow staff to use a rubric for providing feedback to students without assigning specific points or percentage values to the rubric criteria. The rubric will thus not assign a grade – instead, staff can use the rubric purely for feedback purposes and can apply the grade manually and independently of the rubric.

You can find out more about creating and using Blackboard rubrics at: Blackboard Help – Create Rubrics

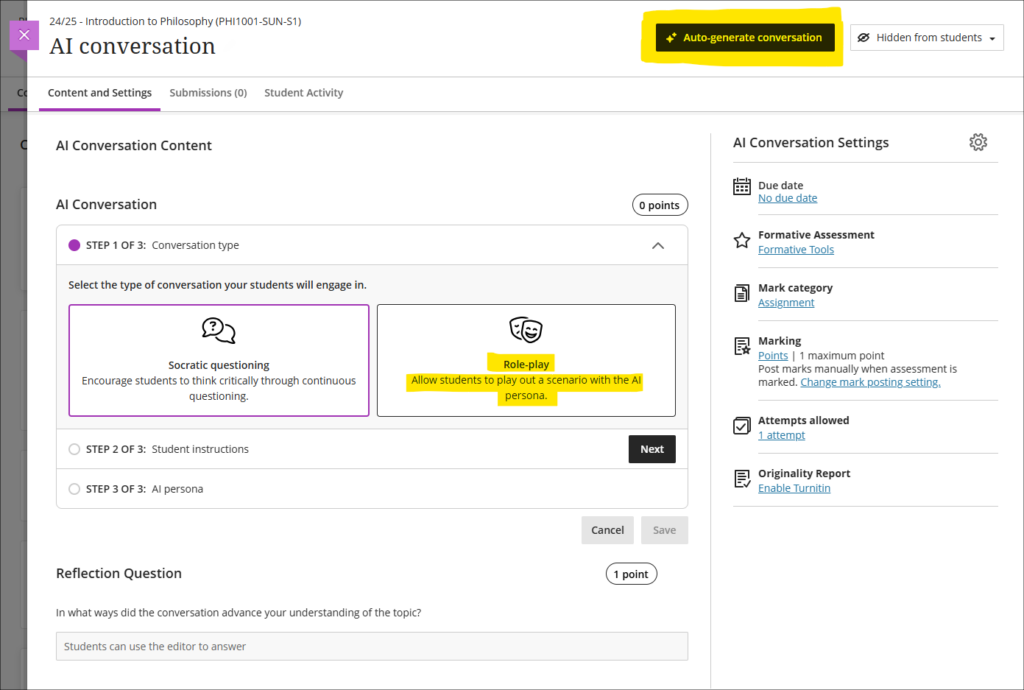

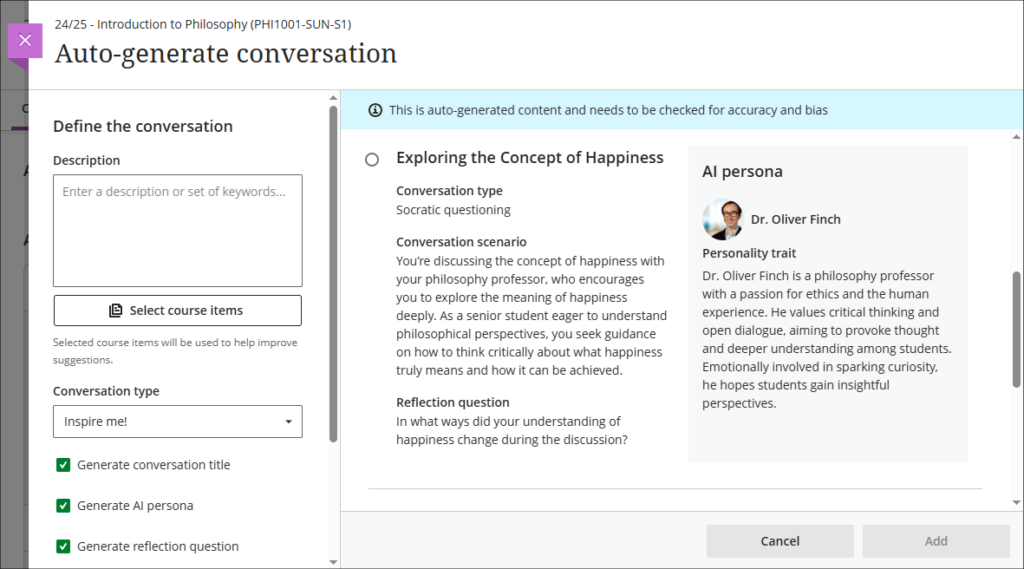

Updates to AI conversations – role-play and auto-generate options

The May upgrade will introduce two new features to the AI conversation tool. The first is the option to create AI-based role-play scenarios, meaning that staff will now be able to choose between two different types of AI conversation:

- Socratic questioning: Conversations that encourage students to think critically through continuous questioning.

- Role-play: Conversations that allow students to play out scenarios with the AI persona.

The second new feature in May’s upgrade is the option to auto-generate ideas for AI conversations. In order to provide ideas for conversations and speed up the process of creating personas and topics for an AI conversation, the AI Design Assistant will auto-generate three suggestions at once. Additionally, alongside the ideas for the conversation, staff can select whether or not to auto-generate the conversation title, the AI persona, and the reflection question. Further refinements to the auto-generation process can be made by adding information to the description field, selecting particular course items to define the scope of the auto-generation process, choosing the conversation type, and selecting the complexity level of the conversation.

More information about setting up and using the AI conversation tool is available from: Blackboard Help – AI Conversations

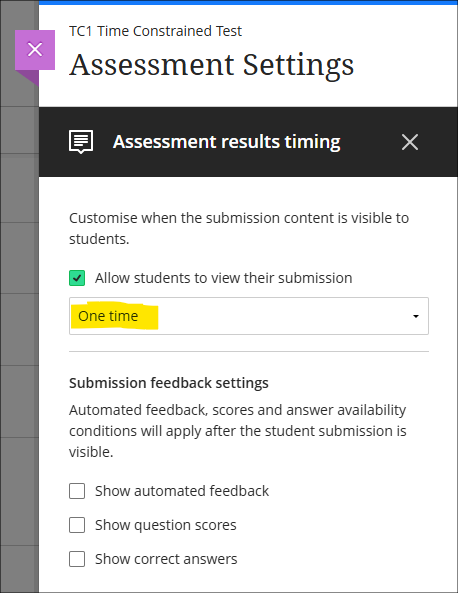

Blackboard tests – ‘view submission one time’ results setting

Following May’s upgrade staff will be able to make use of a ‘one time’ setting when choosing whether and how students can view their test submission, and, optionally, any automated feedback, individual question scores and which answers were correct (note that some of these settings only apply to computer-marked tests). Due to the wide variety of conditions under which tests take place, Blackboard tests offer a great deal of flexibility in their post-submission student visibility options, from allowing staff to withhold test submissions from students entirely and only releasing marks (thus replicating exam conditions when students hand in their papers, which they do not see again, and only receive a mark), to allowing students to view their test submissions, automated feedback, individual question scores and correct answers as often as they want.

Due the complexity of setting up tests, staff are recommended to seek advice from their learning technologist when using Blackboard tests for the first time, especially for summative assessment.

More information about setting up and using Blackboard tests is available from:

- Learning Technology Team – Ultra Workflow 3: Blackboard Test

- Blackboard Help – Tests, Pools, and Surveys

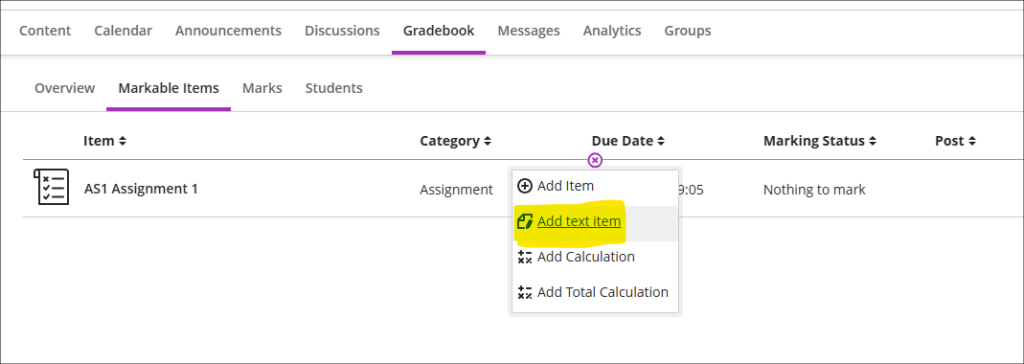

Gradebook – option to create text-based columns

Available in the gradebook after the May upgrade will be the option to create a text column. This feature allows staff to use a text column to record up to 32 characters of text per student, rather than a points or percentage value, meaning that staff can record text directly in gradebook columns, rather than as was previously the case where pre-defined mark schemas had to be used to convert numerical values to text. However, please note that the new text columns cannot be used when sending grades from NILE to SITS as the grades transfer columns only work when mapping columns with a numerical value. Text in gradebook columns is exported when using the download marks option to download gradebook items as a spreadsheet in .xls or .csv format.

More information about the gradebook in Ultra courses can be found at: Blackboard Help – UItra Gradebook

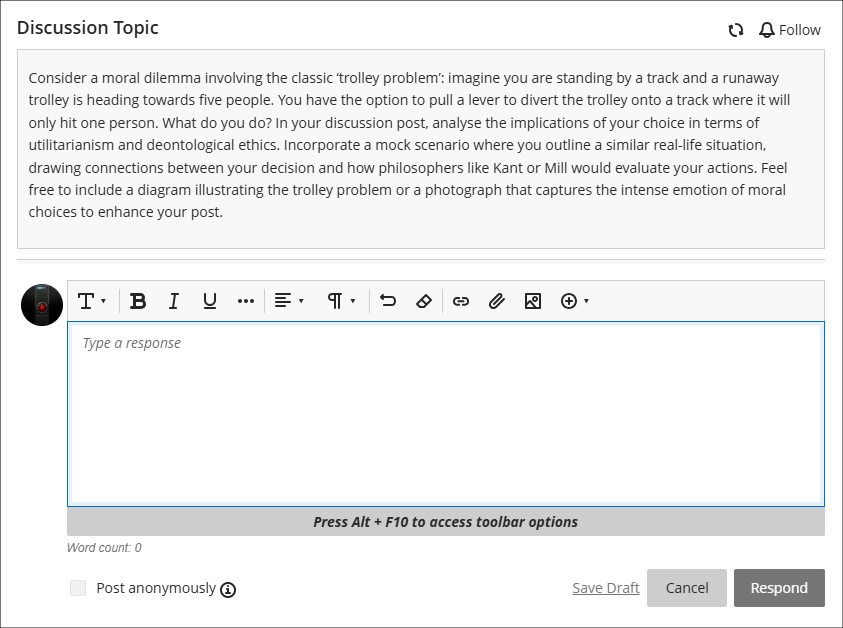

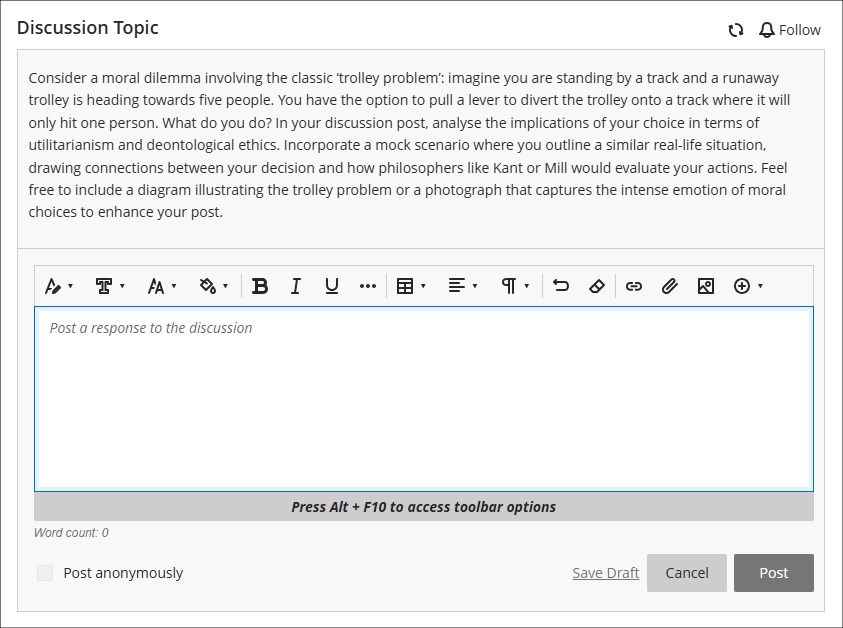

Minor changes to discussions

Following May’s upgrade, the following changes will be made to discussions:

- Updated wording: Discussion contributions are currently called ‘responses’ and ‘replies.’ This will change to ‘posts’ and ‘replies. ‘Posts’ (as was the case with ‘responses’) refers to a user’s direct contribution to the main discussion, whereas ‘replies’ refers to a reply that someone makes to an individual user’s post.

- Improved default text: Currently, the default text within the discussion response field is: ‘Type a response.’ The text within the input field will change to: ‘Post a response to the discussion.’ The default text within the reply field will remain as: ‘Type a reply’.

- Avatar update: Currently the user’s avatar is placed beside the text input box. This will be removed while the user is typing their post in order to to increase the area for adding content to the discussion. The avatar will be visible once the post has been posted to the discussion (except where anonymous posts are enabled and the user has selected this option).

Additionally, when academic staff post responses to discussions, their contributions will be clearly flagged with ‘Instructor’.

For more information about setting up and using discussions, see: Blackboard Help – Discussions

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in this month’s Blackboard’s upgrade will be available from Friday 4th April. This month’s upgrade includes the following new/improved features to Ultra courses:

- Grades Transfer – New ‘select all’ option (available now)

- Option to print Ultra documents

- Blackboard assignments indicate if students have reviewed feedback

- Maths support for Microsoft Word documents available for alternative formats

- NILE Ultra Course Awards 2025 – Nominations open until 30th April

Grades Transfer: New ‘select all’ option (available now)

In order to avoid slow page loading times for courses containing high numbers of student enrolments, the ‘Send Grades to SITS’ tool now only lists 10 students per page on the final screen. However, it is still possible to select all students from all pages when sending grades from NILE to SITS.

Details about how to select all grades, not just the first ten, when sending grades from NILE to SITS is available at: How do I select all grades when using the ‘Send Grades to SITS’ tool?

An updated video demonstrating the process of sending grades from NILE to SITS is available at: Sending your mapped grades from NILE to SITS

Full details of the grades transfer process, including updated guidance, videos and screenshots, is available at: Transferring grades from NILE to SITS

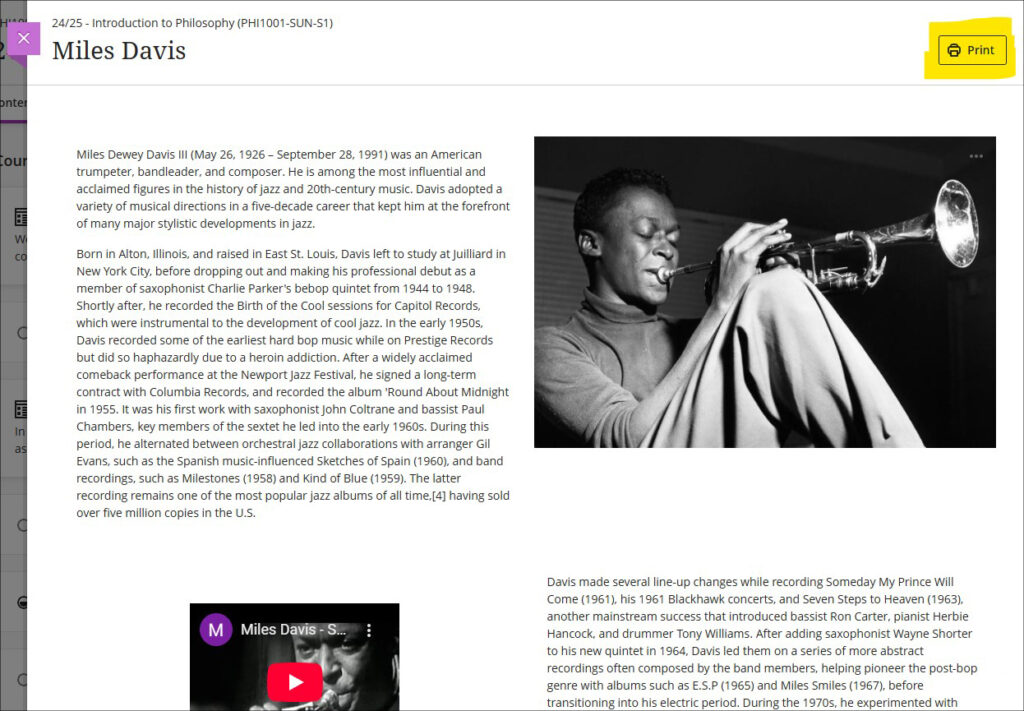

Option to print Ultra documents

Following April’s upgrade, staff and students will have the option to print Ultra documents to PDF or directly to a printer.

Where knowledge check questions are used in an Ultra document the print option works differently for staff and students. When staff print the document both the questions and the answers to knowledge check questions will be given in the printed version, but when students print the document only the questions will be printed.

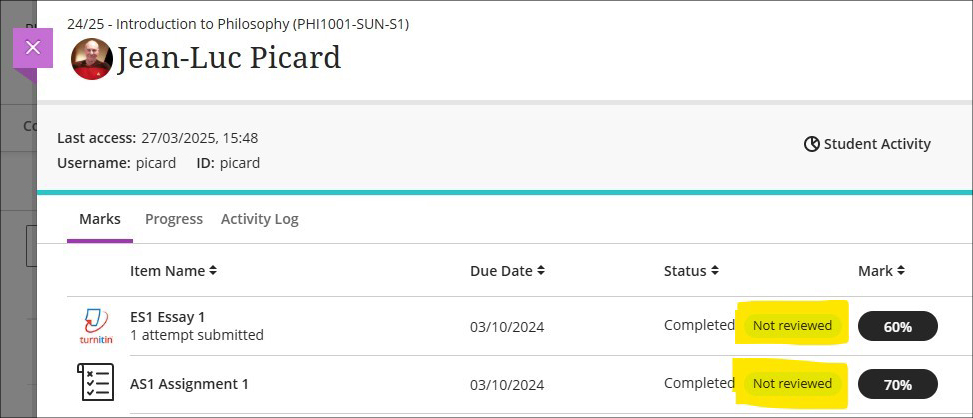

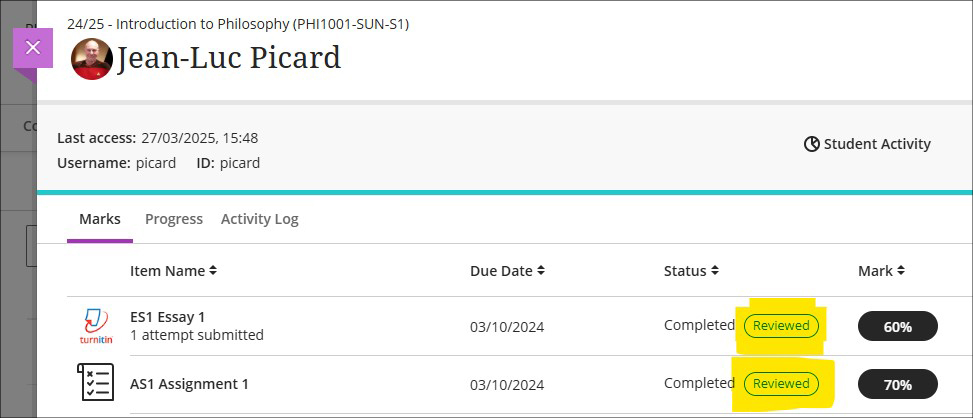

Blackboard assignments indicate if students have accessed feedback

While Turnitin assignments have for many years included an indicator to show whether students have accessed their feedback or not, Blackboard assignments have not had this feature. However, following this month’s upgrade staff will be able to see whether feedback has been accessed when using Blackboard assignments. Additionally, staff will also be able to see in the student overview page in Ultra courses whether students have accessed their feedback for Turnitin assignments too, making it easier for staff to get a quick overview about whether individual students have accessed the feedback for their assignments.

To access the student overview page in an Ultra document select the student either from their entry in the class register or the gradebook. As well as showing the time and date on which they last accessed the NILE course, the student overview page also displays an overview of their marks, a summary of their progress through the course (via the ‘Progress’ tab) showing which course materials they have accessed, and a more detailed log of their course interactions (via the ‘Activity Log’ tab). The ‘Student Activity’ report is also available from this page which details time spent in the course.

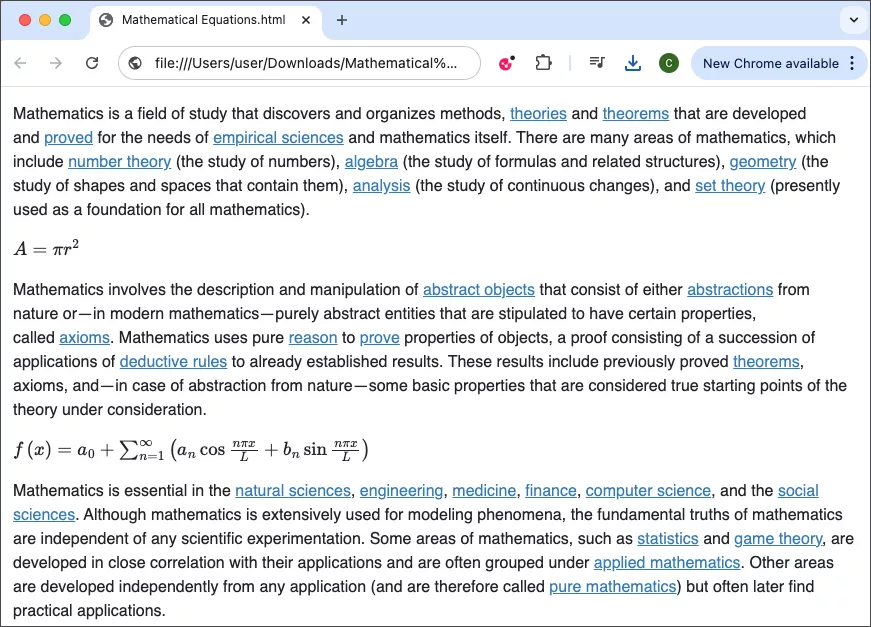

Maths support for Microsoft Word documents available for alternative formats

This month’s upgrade will see Ally’s alternative format conversion process upgraded to include the first version of support for OfficeMath found in Microsoft Word documents. Students downloading material in the HTML and BeeLine Reader alternative formats will now see equations rendered as MathML via MathJax in their browser, meaning that students will no longer be missing important equations when using Ally to download material in their preferred HTML option. This is the first of multiple releases to better support the rendering of equations within Ally’s alternative format options.

More information about what Ally is and how it works in NILE is available from: Blackboard Help – Ally

NILE Ultra Course Awards 2025 – Nominations open until 30th April

Have you put together a great NILE Ultra course for 2024/25? Or do you know someone who did? We’re really keen to highlight and celebrate examples of good practice with Ultra, so if you or someone you know has designed a good Ultra course we’d really like to hear from you. You can nominate yourself, or someone else, or multiple members of staff if the Ultra course has been created by more than one person. In your nomination we just need to know who it is that you’re nominating, which module the nomination is for, and what it is that you think has been done well. And you don’t have to tell us who is making the nomination if you don’t want to.

Nominated courses will be reviewed and Ultra Course Awards will be given according to the following criteria:

- The course follows the NILE Design Standards for Ultra Courses (https://libguides.northampton.ac.uk/learntech/staff/nile-design/nile-design-standards);

- The course is clearly laid out and well-organised at the top level via the use of content containers (i.e., learning modules and/or folders);

- Content items within top-level content containers are clearly named and easily identifiable for students, and, where necessary, sub-folders are used to organise content within the top-level content containers;

- The course contains online activities for students to take part in.

Winners of 2025 Ultra Course Awards will be announced at the University’s summer Learning and Teaching Conference, and you can find out more about last year’s winners here.

• Ultra Course Awards 2025 Nomination Form

Please note that nominations for 2025 Ultra Course Awards close at 23:59 on the 30th of April, 2025

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in this month’s Blackboard’s upgrade will be available from Friday 7th March. This month’s upgrade includes the following new/improved features to Ultra courses:

- Anonymous discussions – system administrators can view the author’s name if needed

- Pop out rubric for Blackboard assessment items

- Course open/closed settings panel improvements

Anonymous discussions – NILE system administrators can view the author’s name if needed

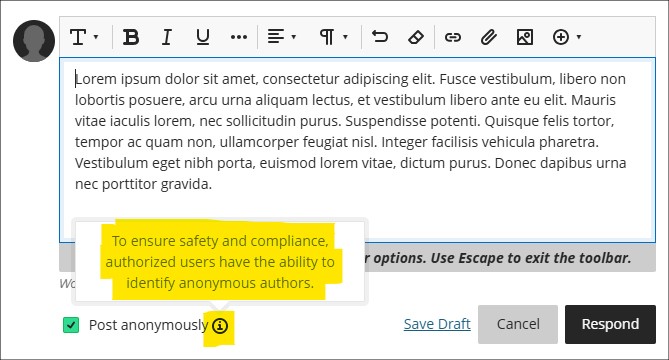

The April 2024 Blackboard upgrade introduced the option to allow students to post anonymously to Ultra discussions. While this was a welcome improvement, because anonymous posts could not be de-anonymised concerns were raised about what would happen if a student posted something that required the author’s name to be disclosed. In response to this, the March 2025 upgrade includes the option for NILE system administrators to view the name of anonymous authors of discussion posts. This will not de-anonymise the post author on the discussion itself, but will simply allow a system administrator to view the name of the post author. Where this is needed, staff can contact the Learning Technology Manager, providing details of the NILE course and discussion forum title, along with a screenshot of the post in question and reason why it is necessary to reveal the post author. As anonymous posts can now be revealed, a new ‘info’ option is available to students explaining that authorised users have the ability to identify anonymous authors.

For more information about setting up and using discussions in NILE, see: Blackboard Help – Create Discussions

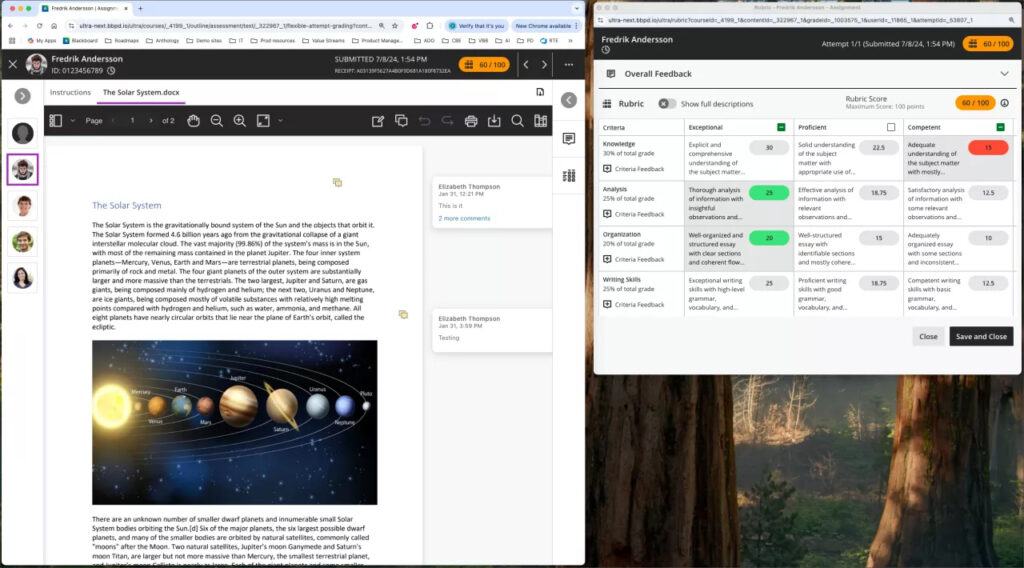

Pop out rubric for Blackboard assessment items

Following the March upgrade, staff will be able to pop out grading rubrics into a separate window when grading assignment submissions. Instead of only being available in the side panel and formatted in a stack, the pop-out rubric is a separate, movable window and formatted in a grid view.

This update makes it easier to navigate and grade student submissions by providing a clearer, more comprehensive view of the rubric. Popping out the rubric lets staff view the student submission and the rubric side-by-side for a more efficient grading experience. Staff can quickly select performance levels and provide feedback in the rubric while viewing the student submission.

More information about creating and managing Blackboard rubrics is available at: Blackboard Help – Create Rubrics

More information about grading with Blackboard rubrics is available at: Blackboard Help – Grade with Rubrics

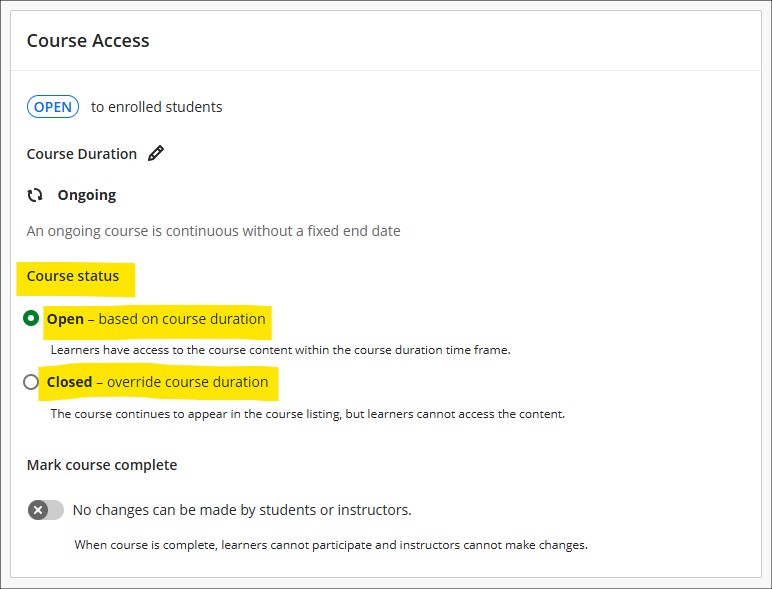

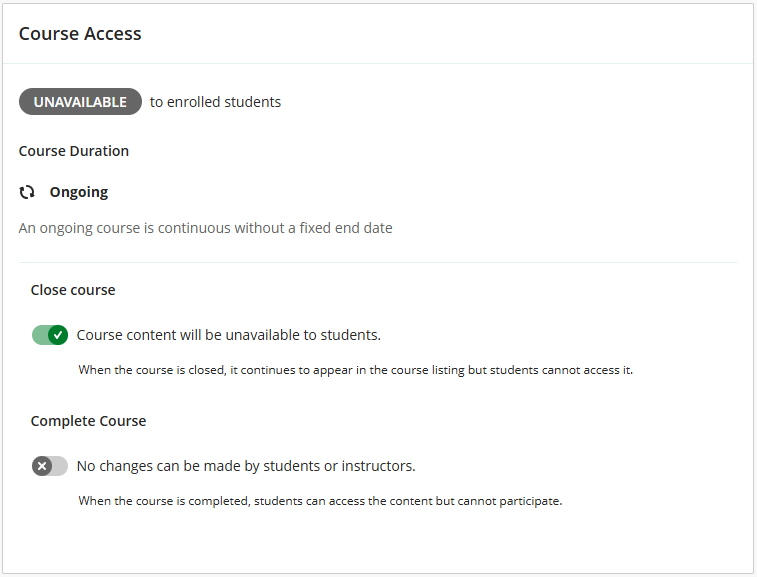

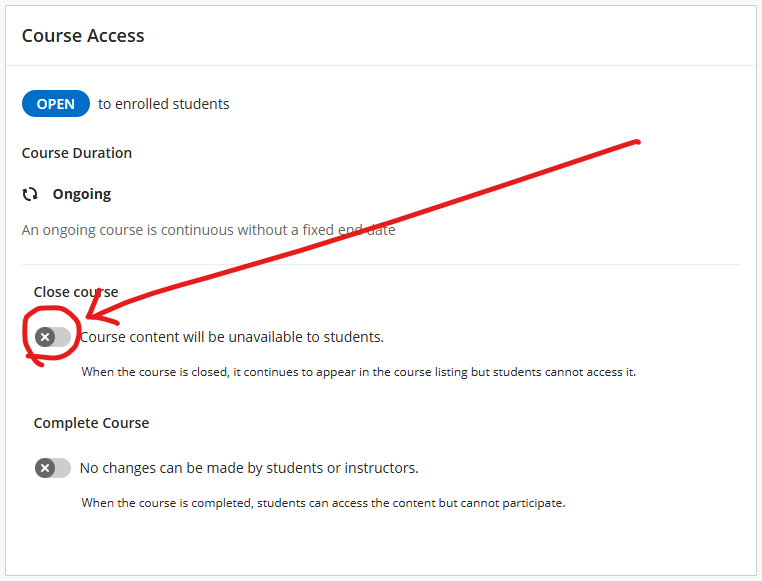

Course open/closed settings panel improvements

Following feedback about the January 2025 upgrade and the change of location for the open/close NILE course settings, the course setting panel interface has been improved for the March 2025 upgrade, making it easier for staff to choose between the open and closed status settings.

Once in the course settings panel, the March upgrade makes selecting between the course open/closed status much simpler.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in this month’s Blackboard’s upgrade will be available from Friday 7th February. This month’s upgrade includes the following new/improved features to Ultra courses:

- Improvements to AI Design Assistant

- Option to change folders to learning modules or learning modules to folders

- Upload images to Ultra documents using the new image block

- Improved rendering of Office (Word, PowerPoint, Excel) and PDF documents uploaded by staff and students, and improved student file submission processs

Improvements to AI Design Assistant

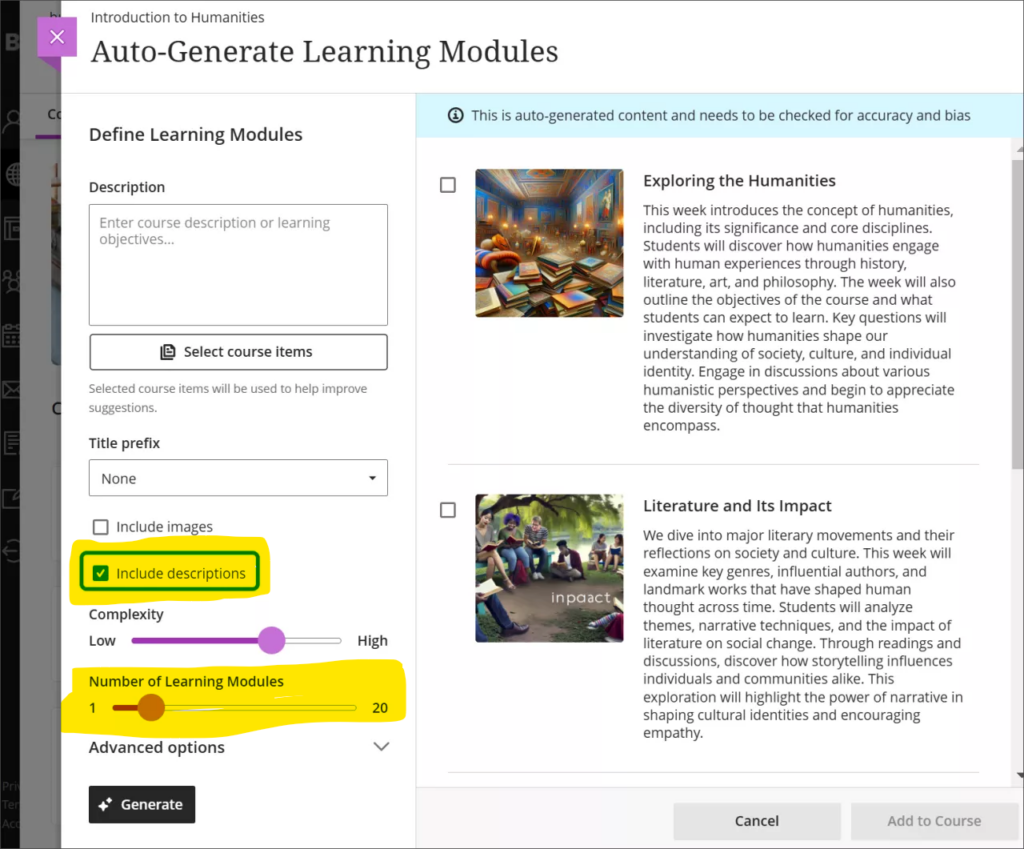

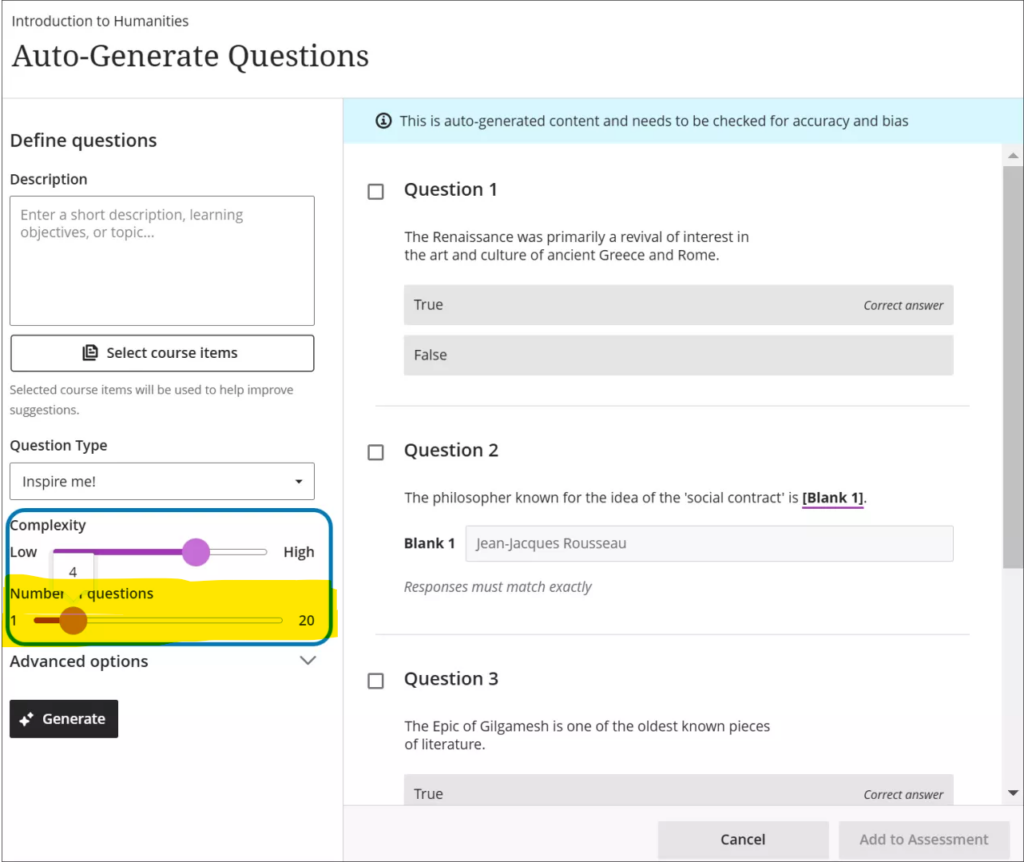

February’s upgrade will see two improvements to NILE’s AI Design Assistant:

- The option to include or exclude descriptions from auto-generated learning modules, plus the ability to auto-generate up to 20 learning modules (the previous maximim was 10)

- The ability to auto-generate up to 20 test questions at a time (the previous maximum was 10)

For more about the AI Design Assistant see: Learning Technology Team – AI Design Assistant

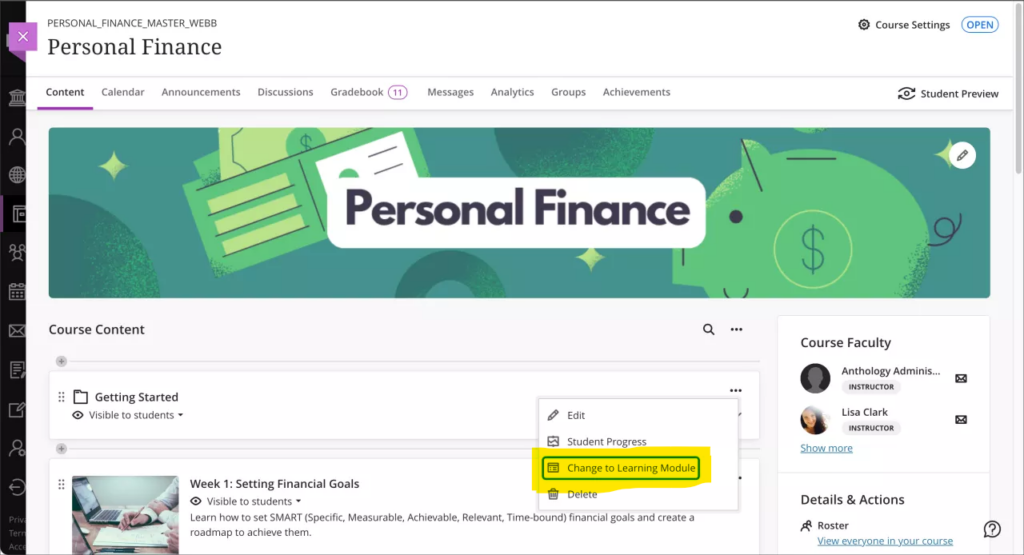

Option to change folders to learning modules or learning modules to folders

Following February’s update, staff will be able to quickly and easily change folders to learning modules, or learning modules to folders.

For more information about creating, customising, and using learning modules see: Blackboard Help – Create Learning Modules

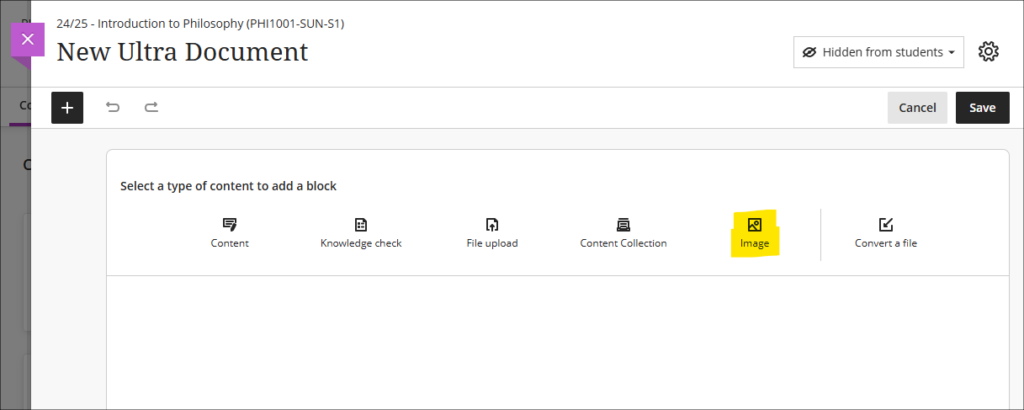

Upload images to Ultra documents using the new image block

Following February’s upgrade, staff will be able to use an image block to add images to Ultra bocuments. Staff can use image blocks to upload their own images, use the AI Design Assistant to generate images, or select images from Unsplash. Image blocks can be moved throughout a document, just like other block types. Staff also have the option to resize images, set height, and maintain aspect ratios in image blocks. Adding images via the image block also reduces white space around images and provides greater control over content design.

Improved rendering of Office (Word, PowerPoint, Excel) and PDF documents uploaded by staff and students, and improved student file submission process

Already in place is Blackboard’s improved rendering of standard Office (Word, PowerPoint, Excel) and PDF documents. Previously, when viewing Office and PDF documents in Ultra courses a message would appear warning that the file conversion process might have altered the document layout and spacing. While any changes were usually only very slight, Blackboard have upgraded the file conversion tool to elimate any such changes. Thus, the versions of documents viewed in Ultra courses will be identical to the versions originally uploaded by staff and students. This upgrade will improve the experience of students viewing Office/PDF documents uploaded into the course content area of Ultra courses, and the experience of staff assessing Office/PDF documents submitted by their students using the Blackboard assignment tool.

Please note that no action is needed in order to activate the improved file rendering tool. Files previously uploaded by staff into the course content area will automatically be rendered using the upgraded tool. Files previously uploaded by students via Blackboard assignments will retain their previous formatting (i.e., they will be rendered using the old rendering tool) so as not to make any changes to work that has already been submitted and graded, but new student submissions to Blackboard assignments will use the upgraded rendering tool.

As part of the file submission process for students submitting work to Blackboard assignments, the February upgrade will bring the following additional improvements:

- Timed assessments: When a student uploads a file just before the timer runs out, the upload will complete before the attempt is auto-submitted

- Re-upload workflow: If a student’s file doesn’t upload correctly, they can easily re-upload it using the new workflow

Students can now be confident that their files are successfully attached, received, and stored, and they will be notified immediately if there are any issues with their file submissions.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in Blackboard’s January upgrade will be available from Friday 10th January. This month’s upgrade includes the following new/improved features to Ultra courses:

- Improvements to AI Design Assistant

- Staff option to hide/show columns in the gradebook

- New location for controls to open/close Ultra courses to students

- New location for staff ‘Enrol on a NILE course’ tool

- End of life for Turnitin ETS e-Rater

Improvements to AI Design Assistant

Following the improvement to auto-generated Blackboard rubrics in last month’s upgrade, for the January 2025 upgrade Blackboard have improved the auto-generation features of many of the other AI Design Assistant to have faster and more complex outputs, including:

- Learning modules;

- Assignments, discussion, and journals prompts;

- Auto-generated test questions;

- AI conversation avatars;

- Image generation.

Following the upgrade, the auto-generated outputs for learning modules will be more descriptive and focused on the topic than was previously the case. Assignments, discussions, and journals will also present more depth in relation to prompts and content selected by staff. In addition, images for AI Conversation avatars will be more realistic, and the quality of the images generated by the AI Design Assistant will be improved, making the images look more realistic and better related to the auto-generation prompts.

You can find out more about using the AI Design Assistant at: Learning Technology Team – AI Design Assistant

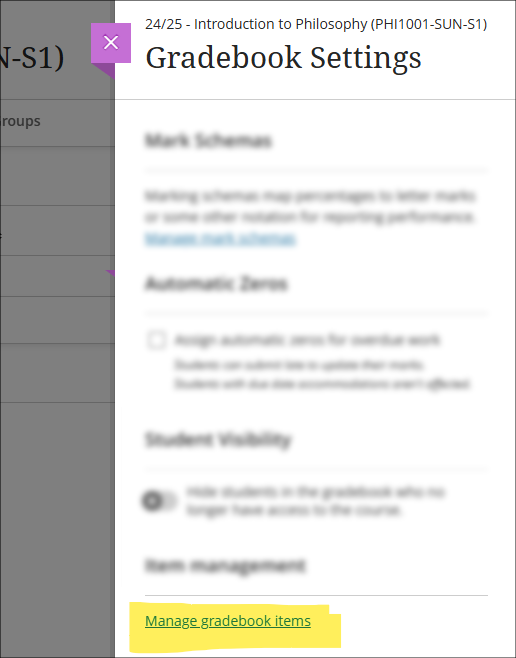

Staff option to hide/show columns in the gradebook

Currently, while staff can set gradebook items to be available or unavailable to students, staff have no way of hiding gradebook items from the staff view of the gradebook. To make the gradebook more manageable, after the January upgrade staff will be able to use the ‘Manage gradebook items’ option in the gradebook settings to hide items in gradebook from the staff view of the gradebook without affecting the visibility of these items for students. Once hidden, items can be shown again by returning to ‘Manage gradebook items’ and changing their visibility status.

More information about using the Ultra gradebook is available from: Blackboard Help – Ultra Gradebook

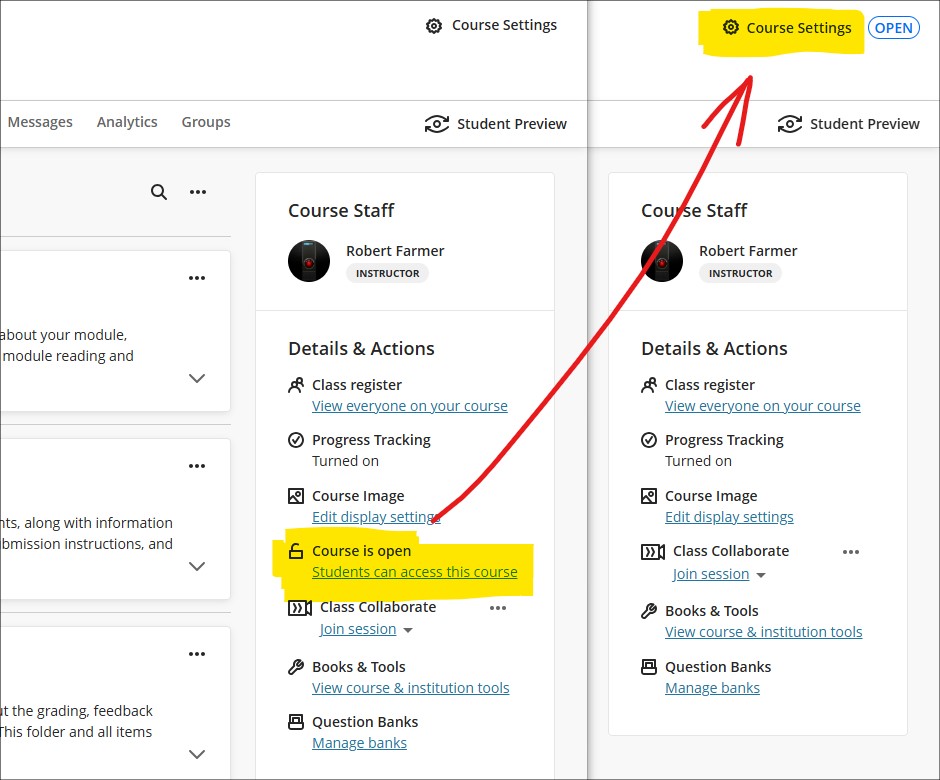

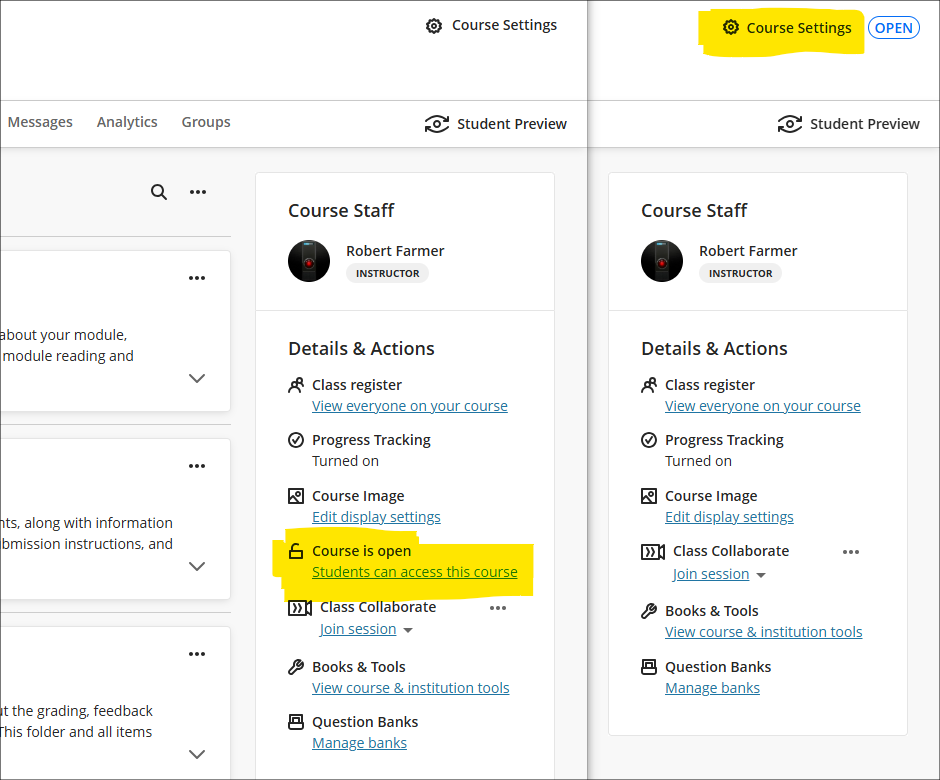

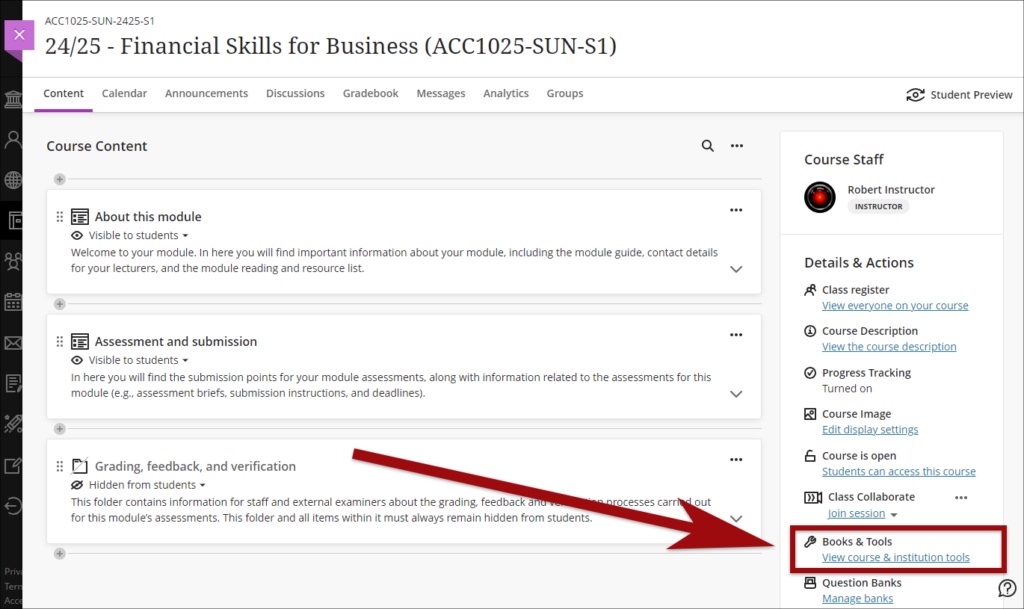

New location for controls to open/close Ultra courses to students

Following January’s upgrade, the setting to open, close, and complete Ultra courses will move from the ‘Details & Actions’ menu to the ‘Course Settings’ panel. Additionally, the current status of the course will be displayed to staff in the upper right-hand corner of the course page.

New NILE courses will continue to be closed (i.e., unavailable) to students so that teaching staff can open them to students when they are ready.

When accessing the course settings panel, a closed course will look like this:

To open the course, switch off the ‘Close course’ button:

More information about the course access status is available from: Blackboard Help – Course Settings, Course Access

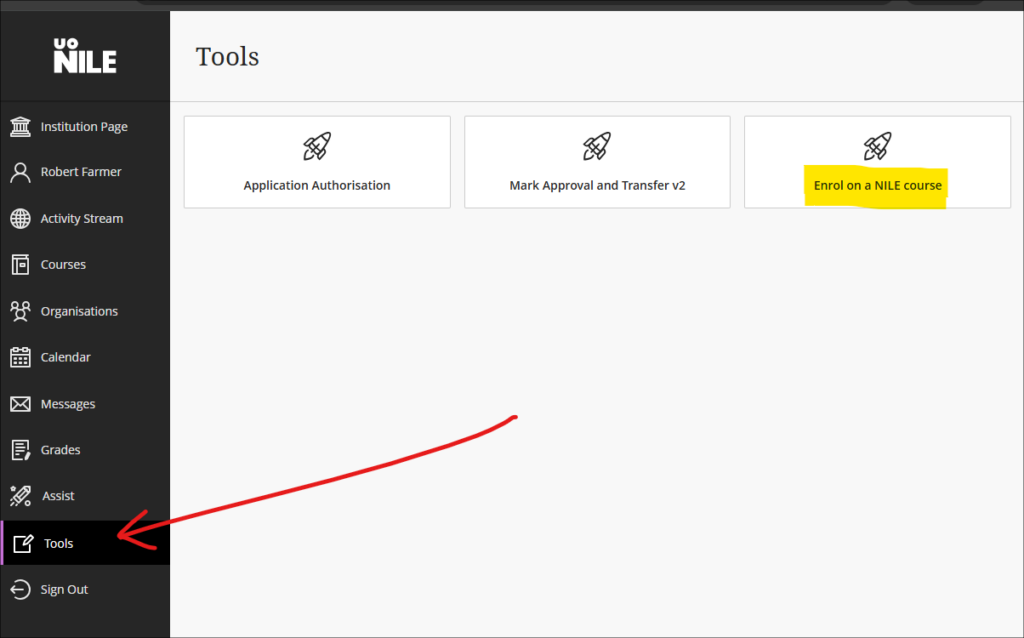

New location for staff ‘Enrol on a NILE course’ tool

Following the January upgrade, an improved version of the ‘Enrol on a NILE course’ tool will be located in the Tools section of the main NILE menu. The new version of this tool allows UON staff to enrol on (and unenrol from) a NILE course as either ‘Instructor’ or ‘Support Staff’.

- Instructor: this role provides full permissions for the NILE course and is intended for academic staff teaching on the course.

- Support Staff: this role provides limited permissions for the NILE course and is intended for professional services staff supporting students on the course (e.g., ASSIST staff).

More information about using the tool is available from: Learning Technology Team – How do I enrol on or remove myself from a NILE course?

End of life for Turnitin ETS e-Rater

Turnitin has been notified by Educational Testing Services (ETS) that they will no longer be supporting their e-rater grammar service in 2025. As a result, Turnitin will be removing the ETS e-rater from all of its products as of December 31, 2024.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in Blackboard’s December upgrade will be available from Friday 6th December, along with two new features which are available already. This month’s upgrade includes the following new/improved features to Ultra courses:

- NILE > SITS grades transfer process (available now)

- Turnitin Paper Lookup Tool (available now)

- AI Design Assistant: Rubric generation improvements

- New post indicator for Ultra discussions

- Important updates to the storage and management of Kaltura media (from 31st December)

- End of life for guest access to NILE, including welcome courses and organisations, and removal of old Original courses and organisations (from 31st December)

NILE > SITS grades transfer process

The process for sending grades from NILE to SITS is now available. Full guidance on how the process works, along with a list of FAQs, is available at: Learning Technology Team – Transferring grades from NILE to SITS

Turnitin Paper Lookup Tool

The Turnitin Paper Lookup Tool is now available, which means that staff can easily find assessments submitted to Turnitin on NILE courses which are no longer available. The tool is accessed from the course content area of a NILE course, in the Details & Actions menu, under ‘Books and Tools: View course and institution tools’.

The paper lookup tool is the same in all NILE courses, so it doesn’t matter which NILE course you access it through. Guidance on how to use the Paper Lookup Tool is available at: Turnitin – The paper lookup tool for Feedback Studio

AI Design Assistant: Rubric generation Improvements

Blackboard have upgraded the AI tool powering the auto-generation of rubrics. Following the upgrade, Blackboard say that rubrics, “will now have more complex outputs. The AI Design Assistant will also work faster. Instructors can expect better rubrics, even for long, complicated assessments.”

More information about using the AI Design Assistant is available from: Learning Technology Team – AI Design Assistant

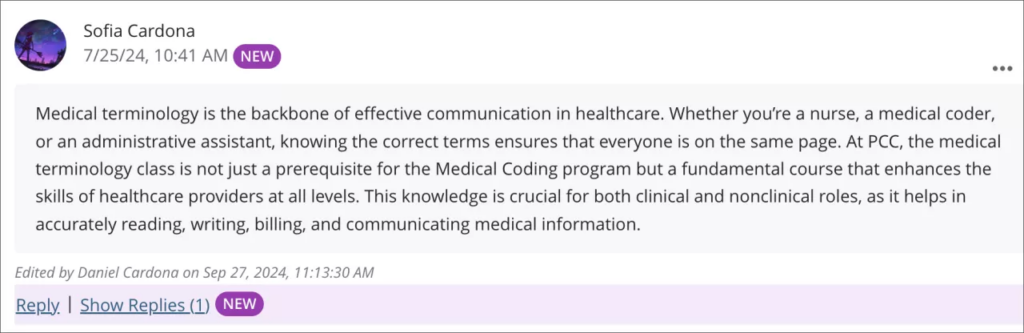

New post indicator for Ultra discussions

We added a “New” indicator that displays next to posts and replies that a user has not yet accessed.

More information about using discussions in Ultra courses is available from: Blackboard Help – Discussions

Important updates to the storage and management of Kaltura media (from 31st December)

As previously announced, in order to make more effective use of our available storage in Kaltura, the audio/video streaming system used in NILE, in October 2024 ITSG (IT Steering Group) approved changes to the storage and management of Kaltura media. Removal of media files from Kaltura will now take place as follows:

- Student Media: Media files with no plays to be permanently deleted after three years. Media files with one or more plays to be permanently deleted eight years after upload date.

- Staff Media: Media files with no plays in the last eight years to be permanently deleted.

End of life for guest access to NILE, including welcome courses and organisations, and removal of old Original courses and organisations (from 31st December)

As previously announced, in order to implement necessary security measures, from the 1st of January 2025 guest access to NILE will no longer be possible. This means that only logged in users will be able to access NILE. Guest access to Ultra courses has never been possible, however, some old Original courses, including welcome courses, may still be available via guest access and the information they contain may need to be relocated.

Additionally, please note that while most NILE courses are regularly archived and removed from NILE in accordance with the NILE Archiving and Retention Policy, some old Original courses and organisations remain on the system and will continue to be removed from NILE on a rolling ten year basis. Currently, all Original courses and organisations created before 01/01/2014 are no longer available on NILE, and courses created before 01/01/2015 will be no longer be available from 1st January 2025.

Staff who are concerned that they may be affected by either of these matters are encouraged to contact Robert Farmer, the Learning Technology Manager, to discuss their requirements. Where information needs to be available to people who do not have a NILE login, it will be necessary to use another platform to provide this. However, where using NILE is still the best option, we will be happy to provide a new Ultra course or organisation to replace the old Original one.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

Blackboard’s AI design assistant, launched last December, has quickly proven to be a helpful tool for developing content within NILE courses. Our early data shows that while most users create tests independently, the AI design assistant is especially popular for test and quiz creation. Senior Lecturer in Nursing, Julie Holloway, shares her positive experience using AI to support her pharmacology students in a prescribing program.

“We are using AI to help generate new exam questions, particularly in relation to pharmacology content,” Julie explains. Due to the parameters of professional regulation within independent prescribing, she’s unable to provide students with past exam papers for their revision. Instead, AI has allowed her to create supplemental revision questions directly linked to each pharmacology lecture, providing students with valuable practice material aligned with their coursework.

One notable feature in the AI design assistant enables users to select existing course materials for the AI to draw from when generating content. Julie has leveraged this by selecting specific pharmacology materials, allowing her to create questions that closely reflect the lectures and give students an efficient tool for self-assessment. “The process was easier than I originally thought,” she adds.

As well as helping with efficiency, Julie also noted the AI’s capacity to inspire new ways of phrasing questions. “Exam questions can become repetitive,” she says, and the AI’s suggestions help with this and enhance the student experience by supplementing their revision.

In thinking about the limitations of using AI to create test questions, Julie points out that the AI occasionally generates questions that aren’t entirely relevant. However, she highlights, “I think this will improve as we get more experienced with working with AI and search terms, etc.”

Would she recommend this approach to other educators? “Absolutely,” she says, encouraging colleagues to explore the tool themselves. “Just give it a try—it’s not as scary as you think!”

If you would like any support using any of the AI Design Assistant tools in NILE, then please contact your Learning Technologist. If you are unsure who this is, then please select this link: https://libguides.northampton.ac.uk/learntech/staff/nile-help/who-is-my-learning-technologist

For more information on the AI Design Assistant, then please select this link: https://libguides.northampton.ac.uk/learntech/staff/nile-guides/ai-design-assistant

The new features in Blackboard’s November upgrade will be available from Friday 8th November. This month’s upgrade includes the following new/improved features to Ultra courses:

- Improvement to print test option for Ultra tests

- Improved assignment file submission process for students submitting to Ultra assignments

- Important updates to the storage and management of Kaltura media (from 31st December)

- End of life for guest access to NILE, including welcome courses and organisations, and removal of old Original courses and organisations (from 31st December)

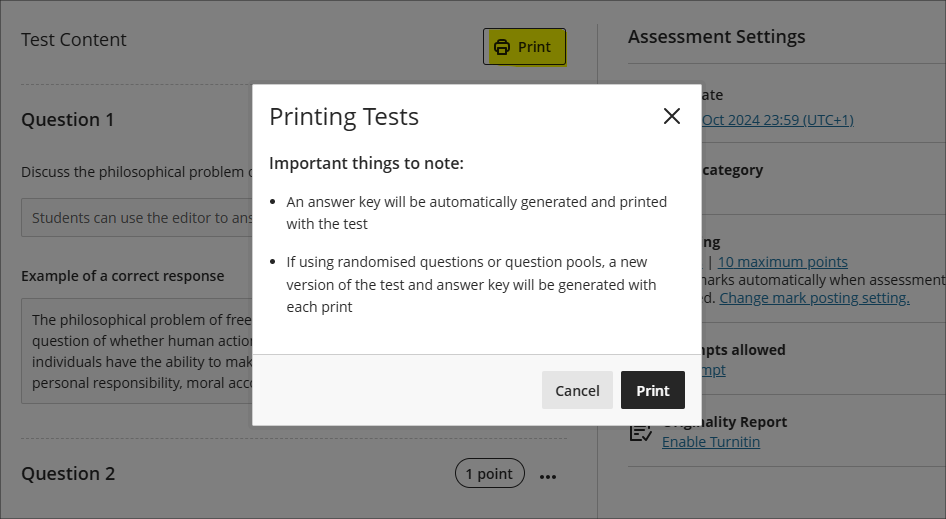

Improvement to the print test option for Ultra tests

Prior to the November upgrade, the print test option in Ultra tests did not include questions coming from question pools. Following the upgrade, question pool questions will be included when printing tests. Also following the upgrade, when using print test a single PDF file will now be generated which includes both the test with answers (the answer key) in the first half of the PDF, and the test as it appears to students without answers in the second half of the PDF. As is currently the case, the print test option will only be available to staff.

Improved assignment file submission process for students submitting files to Ultra assignments

The November upgrade improves the file upload process for students submitting files to Ultra assignments, making students aware of corrupted files, files which exceed the maximum permitted file size limit, audio/video files which must be uploaded via Kaltura, and ensuring all files are correctly attached during multiple file uploads.

- Corrupted File Alerts: If a student attempts to submit a corrupted file a pop-up will notify them of the issue.

- File Size Limit & File Type Notification: A pop-up will display a message to students if their file exceeds the permitted size limit of 1GB or is an audio/video file that needs to be uploaded to NILE using Kaltura. In both of these instances, students will be directed to view the ‘Why can’t I upload my file to NILE?‘ FAQ.

- Managing Multiple File Uploads: To prevent issues when uploading multiple files, students will now be required to wait for the each file to complete the upload process before attaching additional files.

Important updates to the storage and management of Kaltura media (from 31st December)

In order to make more effective use of our available storage in Kaltura, the audio/video streaming system used in NILE, in October 2024 ITSG (IT Steering Group) approved changes to the storage and management of Kaltura media. Removal of media files from Kaltura will now take place as follows:

- Student Media: Media files with no plays to be permanently deleted after three years. Media files with one or more plays to be permanently deleted eight years after upload date.

- Staff Media: Media files with no plays in the last eight years to be permanently deleted.

End of life for guest access to NILE, including welcome courses and organisations, and removal of old Original courses and organisations (from 31st December)

As previously announced in our Blackboard Upgrade – July 2024 post, in order to implement necessary security measures, from the 1st of January 2025 guest access to NILE will no longer be possible. This means that only logged in users will be able to access NILE. Guest access to Ultra courses has never been possible, however, some old Original courses, including welcome courses, may still be available via guest access and the information they contain may need to be relocated.

Additionally, please note that while most NILE courses are regularly archived and removed from NILE in accordance with the NILE Archiving and Retention Policy, some old Original courses and organisations remain on the system and will continue to be removed from NILE on a rolling ten year basis. Currently, all Original courses and organisations created before 01/01/2014 are no longer available on NILE, and courses created before 01/01/2015 will be no longer be available from 1st January 2025.

Staff who are concerned that they may be affected by either of these matters are encouraged to contact Robert Farmer, the Learning Technology Manager, to discuss their requirements. Where information needs to be available to people who do not have a NILE login, it will be necessary to use another platform to provide this. However, where using NILE is still the best option, we will be happy to provide a new Ultra course or organisation to replace the old Original one.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

The new features in Blackboard’s October upgrade are available from Friday 4th October. This month’s upgrade includes the following new/improved features to Ultra courses:

- Students who have completed their studies no longer hidden in NILE courses

- Email notifications for followed discussions

- Auto-generate test questions in question banks

- End of life for guest access to NILE, including welcome courses and organisations, and removal of old Original courses and organisations (from 31st December)

Students who have completed their studies no longer hidden in NILE courses

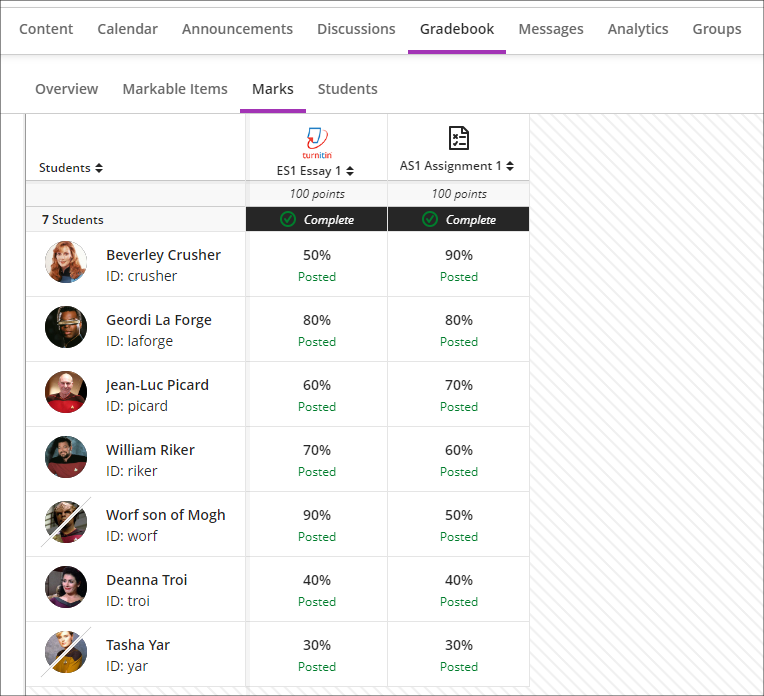

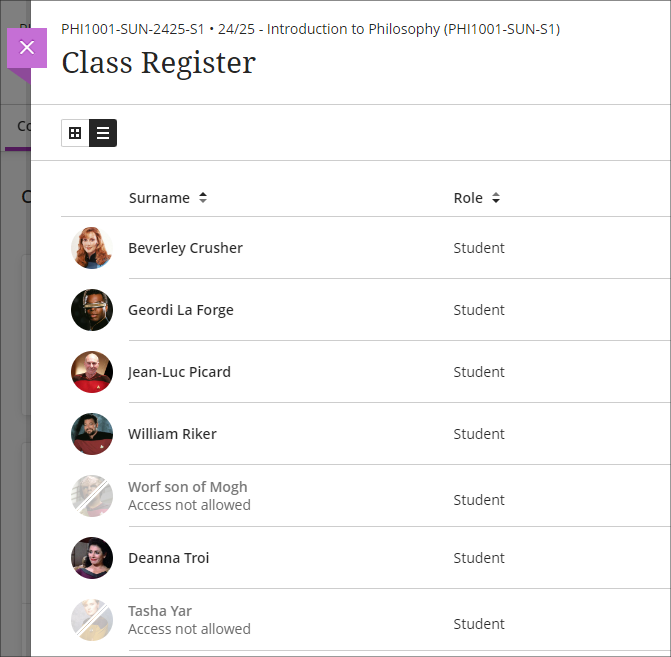

Students’ NILE accounts are automatically made unavailable in NILE approximately one month after their status in the SRS (Student Records System) is updated from ‘Enrolled’ to ‘Award’. This has had the effect of hiding these students in their NILE courses, which has made it difficult for staff to view the assignments, grades and feedback of students who have completed their studies. Following the October upgrade this will no longer happen, and instead of disappearing from the gradebook and from the class register, students whose NILE accounts have been made unavailable will remain in their NILE courses, with unavailable students’ accounts marked with a strikethrough. Due to the way that Turnitin functions, unavailable students and their submissions will not be visible in the Turnitin submission inbox, however, the grades of unavailable students’ Turnitin assignments will be visible in the gradebook. Unavailable students’ Blackboard assignments, tests, journals and discussions will be fully visible in NILE.

Please note that the above change to NILE courses only applies to students who have completed their studies and whose NILE accounts have been made unavailable. It will continue to be the case that students who have been withdrawn from a module or who have transferred off a module will not appear in those particular NILE courses.

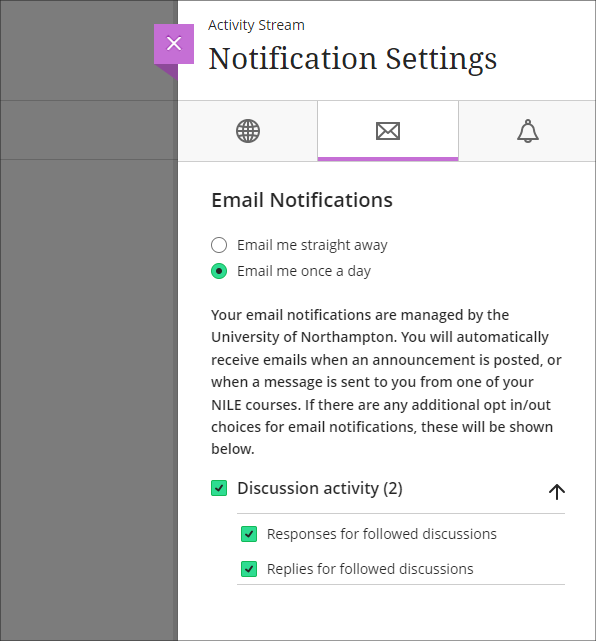

Email notifications for followed discussions

Following on from the August 2024 release, in which a ‘Follow discussion‘ option was introduced to Ultra discussions, the October 2024 release will allow staff and students to optionally receive email notifications for followed discussions.

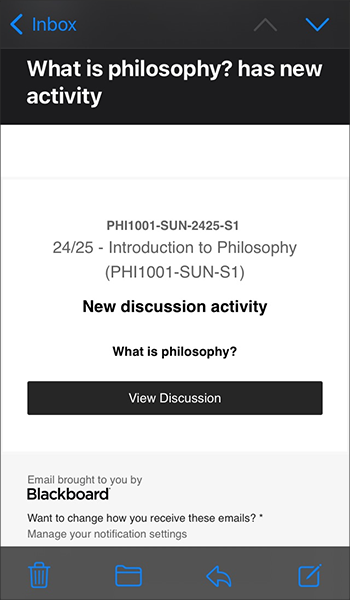

Staff and students who opt to follow discussions will continue to receive notifications in the activity stream when there are responses and replies to the discussions they are following. Additionally, staff and students will also be able to receive email notifications when there are responses and replies to followed discussions, and these will be configurable in the email notifications section of the activity stream’s notification settings. Emails for followed discussions will be default on, and the default frequency for email notifications is ‘Email me once a day’.

Please note that the emails received for followed discussions will not contain the actual content of the discussion response or reply, but will only state that a response or reply has been made to a followed discussion, and will include a link to the discussion.

More information about discussions in Ultra courses is available from: Blackboard Help – Discussions

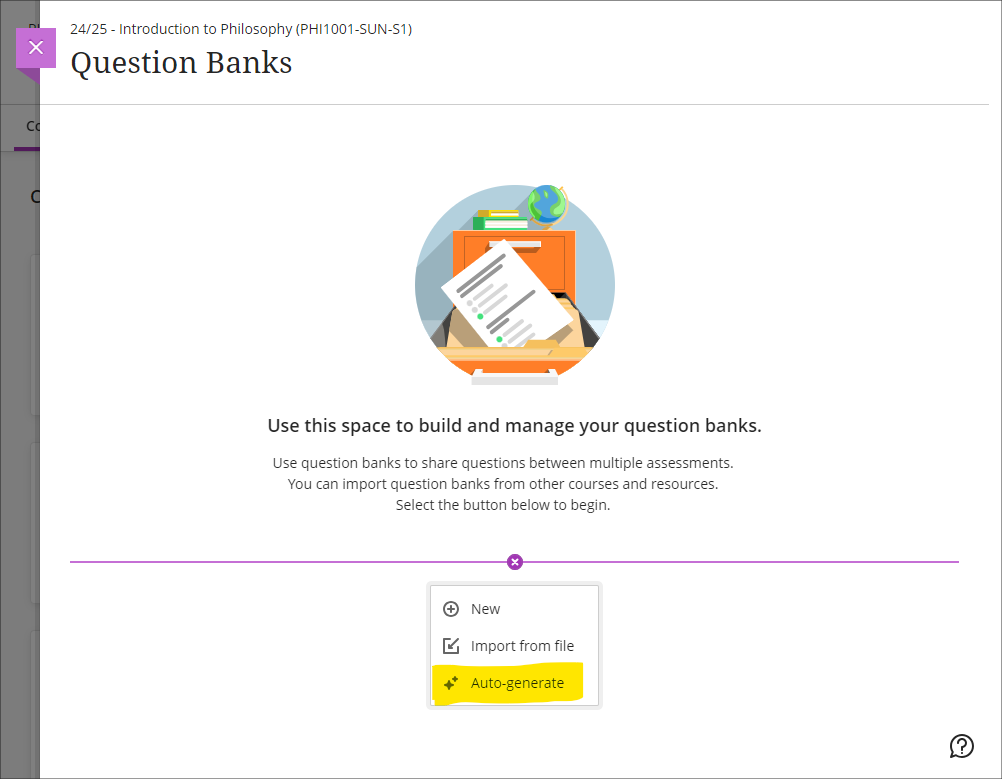

Auto-generate test questions in question banks

Following October’s upgrade, staff will be able to use the AI Design Assistant to auto-generate test questions in question banks. The question banks tool is available from the ‘Details & Actions’ menu in the content area of Ultra courses. Using question banks makes it much easier to reuse test questions in different tests, or to create a large pool of test questions which can be reused in tests which pick a random sample of test questions.

More information about using AI Design Assistant is available from: Learning Technology Team – AI Design Assist

More information about using question banks in Blackboard tests is available from: Blackboard Help – Question Banks

End of life for guest access to NILE, including welcome courses and organisations, and removal of old Original courses and organisations (from 31st December)

As previously announced in our Blackboard Upgrade – July 2024 post, in order to implement necessary security measures, from the 1st of January 2025 guest access to NILE will no longer be possible. This means that only logged in users will be able to access NILE. Guest access to Ultra courses has never been possible, however, some old Original courses, including welcome courses, may still be available via guest access and the information they contain may need to be relocated.

Additionally, please note that while most NILE courses are regularly archived and removed from NILE in accordance with the NILE Archiving and Retention Policy, some old Original courses and organisations remain on the system and will continue to be removed from NILE on a rolling ten year basis. Currently, all Original courses and organisations created before 01/01/2014 are no longer available on NILE, and courses created before 01/01/2015 will be no longer be available from 1st January 2025.

Staff who are concerned that they may be affected by either of these matters are encouraged to contact Robert Farmer, the Learning Technology Manager, to discuss their requirements. Where information needs to be available to people who do not have a NILE login, it will be necessary to use another platform to provide this. However, where using NILE is still the best option, we will be happy to provide a new Ultra course or organisation to replace the old Original one.

More information

As ever, please get in touch with your learning technologist if you would like any more information about the new features available in this month’s upgrade: Who is my learning technologist?

Recent Posts

- Blackboard Upgrade – February 2026

- Blackboard Upgrade – January 2026

- Spotlight on Excellence: Bringing AI Conversations into Management Learning

- Blackboard Upgrade – December 2025

- Preparing for your Physiotherapy Apprenticeship Programme (PREP-PAP) by Fiona Barrett and Anna Smith

- Blackboard Upgrade – November 2025

- Fix Your Content Day 2025

- Blackboard Upgrade – October 2025

- Blackboard Upgrade – September 2025

- The potential student benefits of staying engaged with learning and teaching material

Tags

ABL Practitioner Stories Academic Skills Accessibility Active Blended Learning (ABL) ADE AI Artificial Intelligence Assessment Design Assessment Tools Blackboard Blackboard Learn Blackboard Upgrade Blended Learning Blogs CAIeRO Collaborate Collaboration Distance Learning Feedback FHES Flipped Learning iNorthampton iPad Kaltura Learner Experience MALT Mobile Newsletter NILE NILE Ultra Outside the box Panopto Presentations Quality Reflection SHED Submitting and Grading Electronically (SaGE) Turnitin Ultra Ultra Upgrade Update Updates Video Waterside XerteArchives

Site Admin